< BACK TO ALL BLOGS

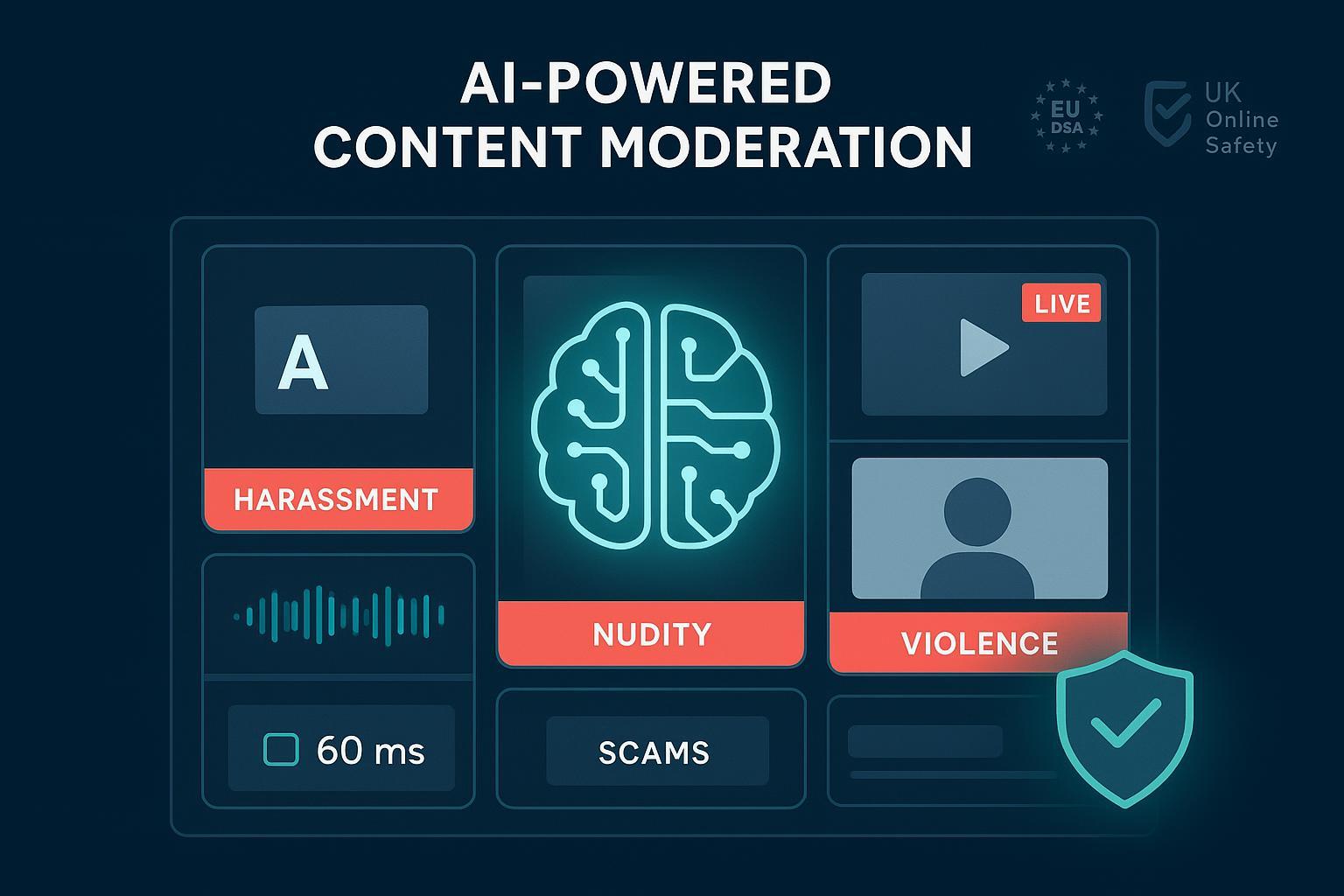

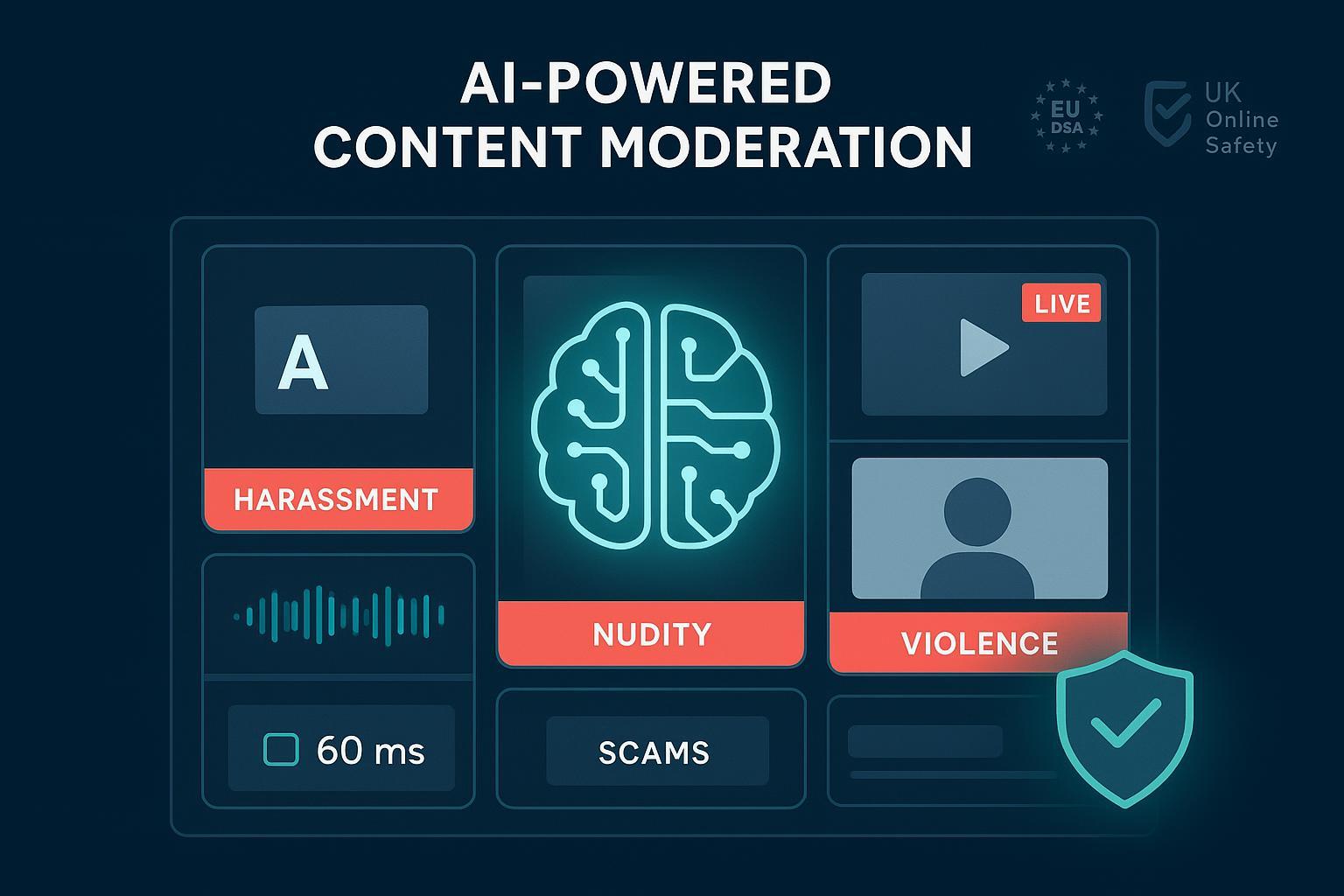

Content Moderation Made Easy with AI Automation (2025)

Image Source: statics.mylandingpages.co

Content moderation in 2025 isn’t just about blocking bad posts. It’s a continuous, risk-managed system that protects users, revenue, and brand reputation across text, images, audio, video, and live streams—often in multiple languages and at massive scale. Below is a practitioner’s playbook drawn from real deployments: how to implement AI automation that’s fast, fair, auditable, and economical.

Principles that don’t age

These are the foundations I’ve seen hold up across industries and scale.

- Risk-based first. Map your highest-impact harms (e.g., child safety, violent threats, fraud) to the strictest controls and lowest latency. Lower-risk nuisances (spam, low-grade profanity) can tolerate slower paths.

- Policy → signal mapping. Convert policy text into detectable signals and thresholds (keywords, embeddings, image/video models, speech-to-text). Each rule should tie to a measurable signal.

- Multi-modal and multi-lingual by design. Real harm crosses formats and languages; design ingestion, enrichment (OCR, ASR, translation), and decisioning for cross-signal correlation.

- Human-in-the-loop where it counts. Use AI for triage and automation, but maintain escalation for ambiguous, sensitive, or high-risk cases. Measure reviewer impact.

- Measurement-first. Define precision, recall, latency SLOs, and cost per decision before you ship. What you don’t measure will drift.

- Privacy and auditability baked in. Minimize data retention, control access, and keep an immutable decision log for regulators and appeals.

According to the European Commission’s 2024–2025 guidance, the Digital Services Act expects platforms to run ongoing risk assessments and mitigation for systemic risks. See the concise overview in the European Commission’s own page: EU DSA risk management duties. The UK’s Ofcom is phasing in Online Safety Act duties through 2025; their guidance clarifies proportionate measures and risk assessments: Ofcom Online Safety duties overview. For governance, the NIST AI Risk Management Framework (1.0, 2023) remains a solid anchor for AI system controls, documentation, and monitoring: NIST AI RMF 1.0.

Implementation playbook: from pilot to scale

The fastest path to reliable automation is incremental. Here’s a sequence that works in practice.

Phase 0 — Prepare (2–4 weeks)

- Build a risk register. Rank harms by severity and likelihood. Tie each to policy references and legal exposure.

- Inventory policies and gaps. Normalize them into machine-detectable rules; define “must remove,” “restrict/age-gate,” “deprioritize,” and “allow.”

- Define a taxonomy. Use consistent labels (e.g., sexual content → nudity: explicit/partial/suggestive; violent content → weapon presence/use, gore, threats; integrity → scams, impersonation, coordinated spam).

- Create gold data. Sample 1,000–5,000 items from recent traffic, label with dual-reviewers, compute inter-rater agreement, and capture edge cases.

- Set target metrics. Example starting targets: precision ≥ 0.95 on high-severity; recall ≥ 0.90; p95 latency ≤ 150 ms for streaming checks; cost ≤ $1 per 1,000 decisions for low-risk spam.

Phase 1 — Pilot (2–6 weeks)

- Narrow scope. Pick one high-impact category (e.g., explicit nudity) on one content type (e.g., images) and one locale.

- Shadow mode first. Run models without enforcing; compare decisions with human ground truth to establish baseline precision/recall.

- Sampling protocol. Auto-clear obvious safe content but send 2–5% to review for drift detection; 100% of auto-blocks above a severity threshold should be sampled initially.

- Threshold discovery. A/B different confidence thresholds to find the cost/quality sweet spot; record ROC-AUC with your gold data.

- Reviewer tooling. Ensure single-key dispositions, hotkeys, evidence panes (OCR/ASR snippets), and policy snippets inline. Measure reviewer time per item.

Phase 2 — Scale (4–12 weeks)

- Expand coverage. Add additional classes (weapons, self-harm, scams), modalities (text, audio, video), and geographies.

- Queue design. Split queues by risk/latency: ultra-low latency for livestream pre- and mid-stream checks, near-real-time for uploads, batch for retroactive sweeps.

- SLA/SLOs. Define p50/p95/p99 latency targets by queue. For livestreams, enforce p95 ≤ 150 ms checks with sliding window analysis; for uploads, 1–2 s end-to-end is acceptable.

- Appeals & transparency. Build an appeals flow with timestamps, decision rationale, and the ability to review model evidence. Track appeal sustain rate as a quality signal.

Phase 3 — Optimize (ongoing)

- Active learning. Re-label disagreements and high-uncertainty items to continuously refresh training/eval sets.

- Drift detection. Monitor concept drift (new slang, visual trends) with canary models and anomaly alerts on false positives/negatives.

- Threshold tuning. Revisit thresholds monthly; revisit taxonomy quarterly based on incident reviews.

- Ergonomics. Reduce reviewer cognitive load: batch similar items, pre-highlight risky regions in frames, provide quick policy macros.

A 2024–2025 pattern worth emphasizing: livestreams and short-form video create bursty, high-volume risk. Ofcom’s staged duties and the DSA’s systemic risk lens both push for fast mitigation plus strong audit trails, not merely accuracy.

Multi-modal and real-time workflows

Different content requires different enrichment and decision paths. Below are battle-tested flows.

Text

- Hybrid stack: a lightweight profanity/keyword filter for cheap triage; an LLM-guided classifier for nuanced harassment, threats, and self-harm; and pattern detectors for links and phone numbers.

- Context windowing: include a few preceding messages or post history to reduce misclassification of sarcasm/quotes.

- Code-switching and slang: maintain locale-specific lexicons and update monthly.

Images

- Core detectors: nudity (explicit/partial), sexual minors risk, weapons, violence/gore, drugs, hate symbols, self-harm cues.

- OCR pass: extract text from images/memes and re-run text policies; flag obfuscated contact info and scam hooks.

- Adversarial robustness: augment with crops, blurs, and color shifts; use ensemble models to reduce single-point failures.

Audio

- ASR first: transcribe with diarization; re-run text classifiers on transcripts.

- Prosody and cues: detect screams, aggression tone, and minors’ voices with caution; use these as secondary signals, not sole grounds for removal.

- Stream handling: chunk into 5–10 s windows; maintain rolling context for continuity.

Video

- Keyframe + segment sampling: analyze keyframes plus motion-aware segments; fuse with audio transcript results.

- Object/scene models: weapons, blood, adult nudity, drug paraphernalia; use temporal consistency to lower false positives.

- Deepfake risk: run face-manipulation detectors for high-reach or verified accounts; require higher reviewer sampling.

Livestreams

- Sliding window. Maintain a 3–5 s sliding window inspection with sub-150 ms p95 inference budget.

- Pre-moderation. Run pre-stream device checks, title/thumbnail analysis, and early chat rate limiting.

- Kill-switch & graduated responses. Shadow-ban, blur, age-gate, or pause; reserve hard shutdown for imminent harm.

For synthetic media and manipulated content, the Partnership on AI’s 2023 guidance lays out disclosure and responsibility patterns that remain useful: PAI Responsible Practices for Synthetic Media. On deepfake detection capabilities and limits, a thorough 2024 survey is a solid technical primer: A 2024 deepfake detection survey.

Vertical playbooks

Because risk profiles vary, tailor thresholds, sampling, and escalation per vertical.

Marketplaces

- Counterfeit and prohibited items: image similarity against brand catalogs; OCR + NLP for prohibited claims (e.g., “Replica,” “FDA-approved” when false).

- Seller risk: account age, chargeback history, velocity of new listings, shipping geography mismatches.

- Review integrity: detect coordinated review spikes and sentiment anomalies.

Social and live platforms

- Harassment and brigading: detect mass-mention attacks, report floods, and cross-post coordination.

- Minor safety: stricter thresholds for nudity/sexual content when minors may be present; aggressive age-gating.

- Link spam and scams: reputation checks for domains, shorteners, and contact handles; quarantine first-time links.

Gaming

- Chat toxicity: symbol/leet normalization, context memory per player, escalating mutes.

- Voice comms: profanity and threats with short latency budgets; sample 5–10% of auto-clears.

- UGC skins/mods: image and file scans for IP violations, hate symbols, and malware links.

Fintech and financial communities

- Scam and impersonation detection: entity resolution for names/handles, phone/WhatsApp scraping in bios, and inbound DMs.

- Transaction-adjacent content: stricter checks near P2P transfer prompts or off-platform payment nudges.

- Compliance holds: queue suspicious content for enhanced review with audit logs preserved for 7+ years per policy.

Measurement and governance that regulators expect

Define and monitor these metrics from day one:

- Quality: precision, recall, FPR/FNR per class and modality; appeal sustain rate; reviewer agreement.

- Speed: p50/p95/p99 latency by queue; time-to-action for incidents; backlog age.

- Cost: cost per decision, cost per 1,000 items, reviewer minutes per decision; automation coverage %.

- Fairness and bias: performance by language/locale and user cohort; disparity flags.

Regulatory anchors:

- The European Commission’s DSA overview (2024–2025) highlights risk assessments, mitigation, and transparency reports. See: EU DSA risk management duties.

- Ofcom’s Online Safety Act duties (2024–2025 guidance) emphasize proportionate systems and children’s safety: Ofcom Online Safety duties overview.

- NIST’s AI RMF 1.0 (2023) offers a shared language for governance functions like Map, Measure, Manage: NIST AI RMF 1.0.

- The U.S. FTC’s 2024–2025 materials warn against overstated AI claims and deceptive ad practices; align your communications and appeals UX accordingly: FTC business guidance on AI claims.

Track link density; add the above to your internal compliance wiki. You’ll cite the documents in policy pages and transparency reports.

Failure modes and how to fix them

- Over-removal (false positives). Countermeasure: conservative thresholds; post-enforcement sampling; easy appeals; precision-weighted training.

- Under-removal (false negatives). Countermeasure: active learning on near-misses; red-team adversarial content; raise recall on high-severity classes.

- Bias across languages/locales. Countermeasure: per-locale eval sets; human review in-language; ensure comparable error rates.

- Adversarial attacks. Countermeasure: ensemble models; input randomization; hash lists for known bad assets; rate limits.

- Novelty and drift. Countermeasure: weekly taxon review; trend mining from user reports; canary deployment for new models.

- Reviewer burnout. Countermeasure: rotate categories, show policy snippets, provide counseling resources, and blur/graze images by default with click-to-reveal.

There’s solid evidence that human-in-the-loop systems outperform fully automated pipelines on contested content. A recent line of research and industry experience converges on this: human-AI collaboration reduces extreme errors and stabilizes outcomes at scale; structure your queues to exploit that.

Build vs. buy: decision framework and checklist

When choosing between building your own stack and working with vendors, evaluate the following:

- Latency and throughput. Can you meet p95 ≤ 150 ms for livestream checks, and handle burst scaling during events?

- Modality coverage. Text, images, audio, video, live streams—with OCR/ASR and cross-signal fusion.

- Language coverage. Do you cover your top 10 locales with comparable accuracy? How do you handle code-switching?

- Data gravity and privacy. Can you keep data in-region (EU/US/APAC), support deletion requests, and minimize retention?

- Tooling for reviewers. Evidence panes, hotkeys, inter-rater analytics, and appeal workflows built-in.

- Governance. Audit logs, versioned policies, risk assessments, transparency reporting.

- Total cost of ownership. Model training/inference, infra, MLOps, reviewer tooling, staffing, 24/7 coverage.

If you evaluate vendors, include a short list with clear fit criteria. For multi-modal, low-latency, globally deployable platforms, one example to consider is DeepCleer. Place vendors under the same scrutiny as your internal build: benchmark on your gold data, measure p95 latency, and run a 4–6 week shadow pilot before going live.

Quick-start configurations that work

- New social app (English-first, images + short video):

- Phase 0–1 in 4 weeks: explicit nudity and weapons first; OCR on thumbnails; auto-block high confidence, sample 10% other cases.

- Add harassment text model in week 5; add live pre-stream checks in week 6.

- Targets: precision ≥ 0.96 on explicit nudity; p95 ≤ 120 ms on stream checks.

- Start with prohibited items and counterfeit detection; set per-country lists.

- OCR + NLP for claims; image similarity against brand references.

- Targets: recall ≥ 0.92 on prohibited items; false positive rate ≤ 1.5% to avoid seller frustration.

- Gaming platform (voice + chat):

- Low-latency ASR + toxicity detection with escalating mutes.

- Sample 5% of auto-clears; review appeals within 24 hours.

- Targets: p95 latency ≤ 150 ms for voice; reduce repeat offender rate by 30% in 90 days.

30/60/90-day rollout plan

- Day 0–30: Risk register, gold data, pilot one high-severity class in one modality and one locale; shadow mode; baseline metrics.

- Day 31–60: Expand to 3–5 classes and two modalities; implement appeals; publish a plain-language policy page and user notices.

- Day 61–90: Optimize thresholds, add livestream checks, ship transparency metrics, and conduct your first formal risk assessment.

What “easy” really means in 2025

“Easy” isn’t zero effort—it’s removing ambiguity with proven patterns. Start small, measure relentlessly, and scale what works. With a risk-based taxonomy, multi-modal pipelines, human-in-the-loop escalation, and strong governance, AI automation turns moderation from perpetual fire-fighting into an operational system you can trust.

And remember: your best safety system is the one you actually run every day. Start with one class, one modality, and ship the pilot this month.