< BACK TO ALL BLOGS

AI Content Moderation Software Checklist for 2025

When you choose ai content moderation software, focus on these essentials:

Human-in-the-Loop (HITL) approaches help you balance ai automation with careful human oversight, keeping moderation fair and ethical. Experts note that ai works fast, but humans step in for tough decisions to protect user rights.

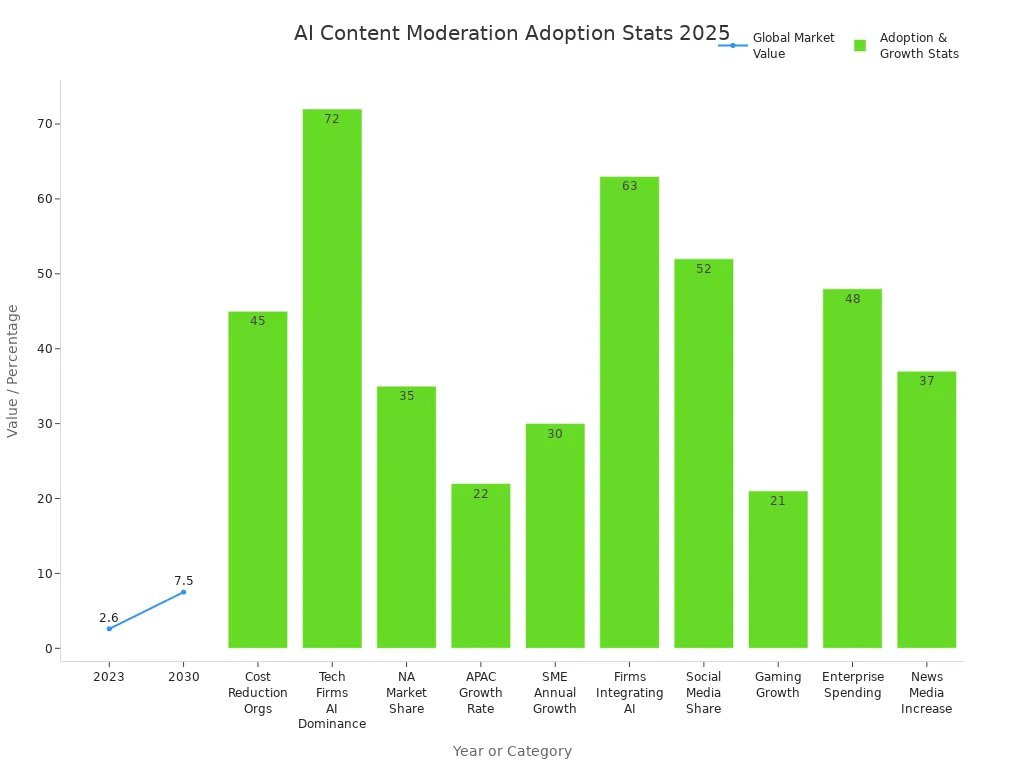

AI Content Moderation Adoption States 2025

Content moderation services now see rapid adoption. The software market is projected to reach $7.5 billion by 2030 as more companies seek advanced features.

When you choose ai content moderation, you want high accuracy. Good content moderation tools use ai and machine learning capabilities to spot harmful or inappropriate content. These systems look for things like hate speech, violence, or spam. They use metrics such as precision, recall, and F1 score to measure how well they work. Precision tells you how often the system flags real problems and avoids mistakes. Recall shows how many real issues the system finds. The F1 score combines both for a balanced view.

For example, Facebook’s ai moderation tool, RIO, detects 94.7% of hate speech. This shows that leading ai-driven moderation can catch most harmful content. You need to check these numbers when you compare automated content moderation options. High accuracy means your platform stays safe and users trust your service.

Tip: Always compare the precision, recall, and F1 score of different ai content moderation tools. These numbers help you understand how well the system works.

To keep up with new threats, ai and machine learning capabilities must improve all the time. Platforms update their moderation models and policies regularly. Human feedback helps ai learn from mistakes and adapt to new types of content.

You want your content moderation to avoid mistakes. A false positive happens when the system flags safe content as harmful. A high false positive rate can frustrate users and block good content. Common causes include:

You should also watch the false negative rate. This measures how often harmful content slips through. Balancing both rates is key for effective moderation. Regular updates and human review help lower both the false positive rate and the false negative rate, making your content moderation more reliable.

When you choose content moderation software, you need to check if it covers all types of user-generated content. Most platforms today handle text, images, and videos. Each type brings its own challenges for moderation.

Note: Video content is the hardest to moderate because it mixes audio, visuals, and text. This makes it easy for harmful content to slip through if the software is not advanced.

You want content moderation software that adapts to new threats and keeps up with the growing volume of user-generated content. Top tools like DeepCleer reach up to 99.9% accuracy and learn from human feedback.

User-generated content comes from all over the world. Your content moderation software must support many languages to keep your platform safe and fair. Modern software now detects and moderates content in over 50 languages. This helps you manage user-generated content in global communities, online learning, and social media.

Aspect | Details |

|---|---|

Multilingual Support | Over 61% of new content moderation products include support for multiple languages. |

Regional Growth | Asia-Pacific leads in user-generated content moderation, with a focus on local languages. |

Hybrid Models | 70% of platforms use both AI and human review for better results. |

Platforms like TraceGPT and Clarifai offer strong multilingual support. They help you moderate user-generated content in rare languages and dialects. This is important as more users join from different regions. Your software should also handle cultural differences and local rules. This keeps your content moderation fair and effective everywhere.

You need content moderation software that adapts to your platform’s unique needs. AI-powered content moderation tools let you set your own rules for what is allowed or blocked. This customization helps you match your moderation to your community’s values. Many top software options, like DeepCleer Intelligent Audit Platform, offer dashboards where you can create custom rule sequences. You can decide when the system should flag content or send it to a human moderator. These AI-powered content moderation solutions keep learning and adjusting, so your rules stay effective as new types of content appear. This fine-grained control means your team can respond quickly to new challenges.

Tip: Customization in AI-powered content moderation lets you enforce your own policies, not just generic rules.

AI-powered content moderation works best when you can adjust how tasks flow. Flexible workflows let you split moderation into different tasks, such as checking for violence, child safety, or harassment. You can assign these tasks to moderators who feel comfortable with them. This approach supports your team’s well-being and helps everyone work together. When moderators can opt out of certain workflows, they feel more supported and less stressed. This flexibility builds strong teams and reduces burnout. As a result, your moderation stays efficient and reliable. AI-powered content moderation software supports these flexible workflows, making it easier to handle all types of content. You can keep your platform safe while protecting your team’s mental health.

AI-powered content moderation gives you the tools to adjust both rules and workflows. You can keep up with new content trends and protect your users with software that grows with your needs.

You want your content moderation software to connect easily with your current systems. Good API connections help you automate tasks and save time. Leading vendors offer different API approaches. Some focus on seamless, developer-friendly APIs that support text, image, video, and audio. Others provide APIs as an extra feature, with more focus on human moderation. You should check if the API lets you create custom rules or models. This helps you match the moderation process to your needs.

Vendor Type | API Integration Approach | Supported Content Types | Customization Options |

|---|---|---|---|

API-first | Seamless, developer-friendly | Text, image, video, audio | Custom rules, custom models |

Human-centric | API available, human-focused | Text, images | Limited customization |

Enterprise-focused | Flexible API, advanced features | Text, image, audio | Advanced AI, custom models |

Performance-oriented | Developer API, no dashboard | Diverse moderation classes | Custom rule development |

Hybrid with control center | API plus dashboard | Text, image, video | Custom rules, AI scoring |

Tip: Choose an API that matches your workflow. Look for clear documentation, strong security, and support for the content types you need.

Your content moderation software should work with the platforms you use most. Social media sites like Facebook, Instagram, LinkedIn, and Twitter are the main focus for many tools. Some solutions also support online communities, e-commerce, gaming, and even AR environments. You need to check if the software fits your platform and can handle your content volume.

Integration can be complex. You may face challenges like algorithmic bias, context understanding, and security risks. You must combine AI with human moderation for best results. Always review how the software handles updates, audits, and privacy. Strong integration keeps your content moderation reliable and secure.

You need content moderation software that can handle millions of posts, images, and videos every day. Modern AI systems now predict which content might break the rules before users even upload it. This helps you keep your platform safe and improves efficiency and speed. Many platforms use a hybrid model. AI scans most content quickly, then sends tricky cases to human moderators. This balance gives you both speed and accuracy.

You face challenges like algorithmic bias and the need to understand different types of content. Not every moderation task fits automation, but core workflows benefit from these new tools.

You must choose where your content moderation runs: in the cloud or on your own servers. Cloud-based solutions give you fast setup and easy scaling. You avoid high upfront costs and get updates, security, and maintenance from your provider. Cloud systems let you access moderation tools from anywhere, which helps global teams work together. However, you depend on third-party providers for data security and may have less control over how AI models work.

On-premises solutions give you more control over your data and AI models. You keep sensitive data local, which is important for privacy and regulated industries. You can customize your moderation system to fit your needs. But you face higher costs for hardware and software. Scaling up takes more time and money, and you must handle updates and security yourself.

Some companies use a hybrid approach. They keep sensitive data on-premises but use the cloud for extra speed and scalability. This gives you the best of both worlds.

You need strong security to protect your users and your platform. Encryption keeps your data safe as it moves between systems and while it sits on servers. Most content moderation software uses advanced encryption methods to stop hackers from reading private information. You will see tools that use techniques like homomorphic encryption and secure enclaves. These methods help keep your data safe even if someone tries to break in.

Here are some common security principles and techniques you should look for in content moderation software:

These features help you prevent leaks and keep your content moderation process safe. You also need to make sure your software follows privacy laws like GDPR and CCPA. This focus on data security helps you build trust with your users.

You must respect user privacy during every step of content moderation. Leading AI moderation tools process data responsibly and follow privacy laws. They use Edge AI and federated learning to analyze content on local devices. This means your users’ data does not always travel to a central server, which lowers privacy risks.

You should look for software that:

When you use these practices, you show your users that you care about their privacy. You also help your team avoid unnecessary moderation and keep your platform fair. Good data security and privacy practices are key to successful content moderation.

You must make sure your content moderation software follows the latest rules. In the EU, the AI Act sets strict standards for how you use AI in content moderation. The law covers both AI systems with a specific purpose and general-purpose AI. Here are some key points you should know:

Note: The AI Act also requires you to keep records and check your software for safety before you use it.

You need clear reporting tools to show how your content moderation works. Many platforms now publish transparency reports, but these often lack detail. Most reports only give high-level numbers or general categories. They do not always explain why content was removed or how moderation decisions were made.

Tip: Good reporting tools help you build trust. They let users and regulators see how you keep your platform safe and fair.

You want content moderation software that makes your job easier. A good dashboard gives you clear information about user-generated content. You can see what the system flagged, check accuracy, and track trends. An intuitive analytics dashboard helps you measure efficiency and speed. You get quick access to important data, so you can make smart decisions fast.

Here is what organizations value most in user experience:

Feature | Description |

|---|---|

Accuracy | Tools have over 90% accuracy to minimize false positives and negatives, ensuring reliable content filtering. |

Customization | Ability to tailor moderation algorithms to specific community guidelines and legal standards. |

Integration | Seamless compatibility with existing marketing and social media technology stacks. |

Scalability | Capacity to scale moderation efforts as the organization grows. |

Data Analytics | Robust dashboards to monitor flagged content volume and accuracy, supporting effectiveness measurement. |

Multilingual Support | Advanced NLP and machine learning enable moderation across multiple languages for global reach. |

Hybrid Moderation | Combination of AI with human oversight to handle complex or nuanced cases effectively. |

You need ease of use in every part of the dashboard. Simple menus and clear alerts help you manage user-generated content without confusion. You can filter, search, and review flagged posts quickly. This saves time and keeps your platform safe for everyone.

You need strong training resources to get the most from your content moderation tools. Good training helps you and your team understand how to use the dashboard and review user-generated content. Many platforms offer step-by-step guides, video tutorials, and live support. These resources make it easy for new moderators to learn the system.

Ease of use grows when you have access to helpful training. You can handle user-generated content faster and with fewer mistakes. Training also teaches you how to spot tricky cases and use the software’s features for better results. When you know how to use every tool, you boost efficiency and speed. This keeps your content moderation process strong as your platform grows.

You need a strong escalation process to keep your platform safe and fair. AI-driven content moderation services use advanced tools to scan and flag content quickly. These systems handle most low-risk cases on their own. When the AI finds content that is unclear or high-risk, it sends these cases to human moderators. This approach lets you act fast on clear violations and use human judgment for complex or sensitive issues.

Traditional content moderation relied on manual reviews and simple filters. These methods often missed context and worked slowly. Today, AI-driven systems use natural language processing and speech recognition. They understand intent and context in real time. This means you can set up multi-level escalation. Low-risk content gets handled automatically. High-confidence violations are removed right away. Ambiguous cases move to human review. You get better scalability and more consistent decisions.

Tip: Structured escalation and appeal processes improve the quality and transparency of your content moderation process.

You must support your moderators to keep them healthy and effective. AI content moderation services help by taking care of routine tasks. This lets your team focus on cases that need careful judgment. AI also reduces how much harmful or disturbing content your moderators see. This protects their mental health.

Human moderators play a key role. They handle complex cases that AI cannot solve. They bring ethical standards and context to every decision. You also need feedback and appeal systems. These let moderators correct mistakes and improve the system over time. Legal rules, like Article 22 of GDPR, require human oversight in automated decisions. This ensures your content moderation stays fair and accountable.

When you select a vendor for content moderation, you should look closely at their customer service. Good support helps you solve problems quickly and keeps your platform running smoothly. Many users report some common challenges with AI moderation tools:

You should check if the vendor offers 24/7 support, fast response times, and clear help resources. Some vendors provide dedicated account managers or live chat. Others may only offer email support or online documentation. Strong customer service helps you handle both technical issues and urgent moderation needs.

Vendors use different pricing models for their AI moderation services. You will see a range of options based on your needs and usage. Here is a table to help you compare common pricing features:

Pricing Model Aspect | Description |

|---|---|

Pricing Model Type | |

Content Types | Image, Text, Voice, Video |

Billing Units | Per 1,000 images, per 1,000 text tasks, per 1,000 minutes (voice/video) |

Volume Tiers | Tiered pricing based on daily usage volumes; higher volumes receive lower per-unit costs |

Scenario-Based Pricing | Charges based on business or risk scenarios; pricing can change if multiple scenarios are used |

Pricing Differences by Upload | Different prices for cutting frames versus full video uploads |

You should ask vendors for clear pricing details. Some charge only for what you use, while others offer discounts for high volumes. Pricing may also change depending on the type of content you moderate. Always review the contract for hidden fees or extra charges.

Tip: Choose a vendor that matches your budget and offers flexible pricing as your needs grow.

You should match AI content moderation software to your business goals and future growth. Use the checklist to guide your team and compare vendors. Start by listing your content types and moderation needs. Next, research tools that fit your requirements. Request demos and test user interfaces for ease of use. Involve key stakeholders in every step.

Recommended steps for evaluation:

Careful planning helps you choose the right solution for safe and effective content moderation.

AI content moderation software uses machine learning to scan and review user content. You can use it to detect harmful text, images, or videos. The software helps you keep your platform safe and follow community guidelines.

You can lower false positives by updating your AI models often. Combine AI with human review for tricky cases. Adjust your moderation rules to fit your community. Regular feedback helps the system learn and improve accuracy.

Your users may speak many languages. Multilingual support lets you moderate content from around the world. This helps you protect all users and meet local laws. You also build trust with a global audience.

Yes, most AI moderation tools offer APIs. You can connect these APIs to your website, app, or social media platform. This makes it easy to automate moderation and keep your workflow smooth.