< BACK TO ALL BLOGS

Emerging Trends in Content Moderation Powered by AI

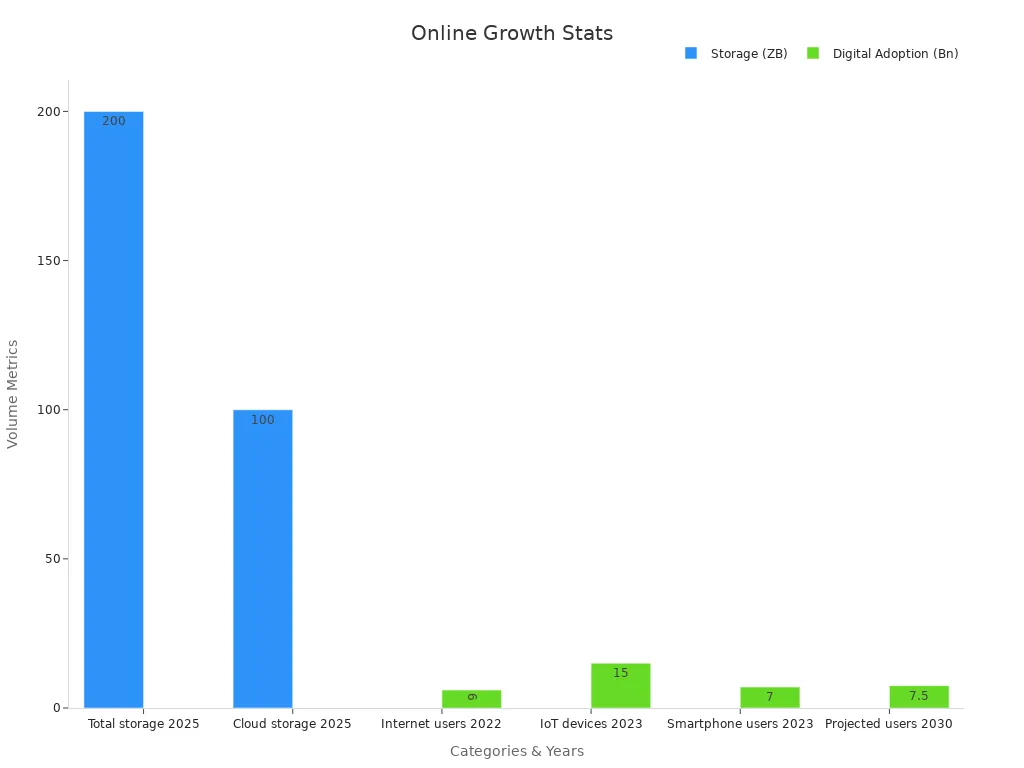

By 2025, people will handle over 200 zettabytes of data. Half of this data will be kept in the cloud. This shows that online spaces are growing fast and getting more complex.

Some important trends will shape media in the future:

These new ideas help stop new dangers. They also make online spaces safer.

Online platforms are getting much more content from users. People share millions of photos, videos, and posts every day. This fast growth makes it harder to keep things safe online. Content moderation is now more important than ever.

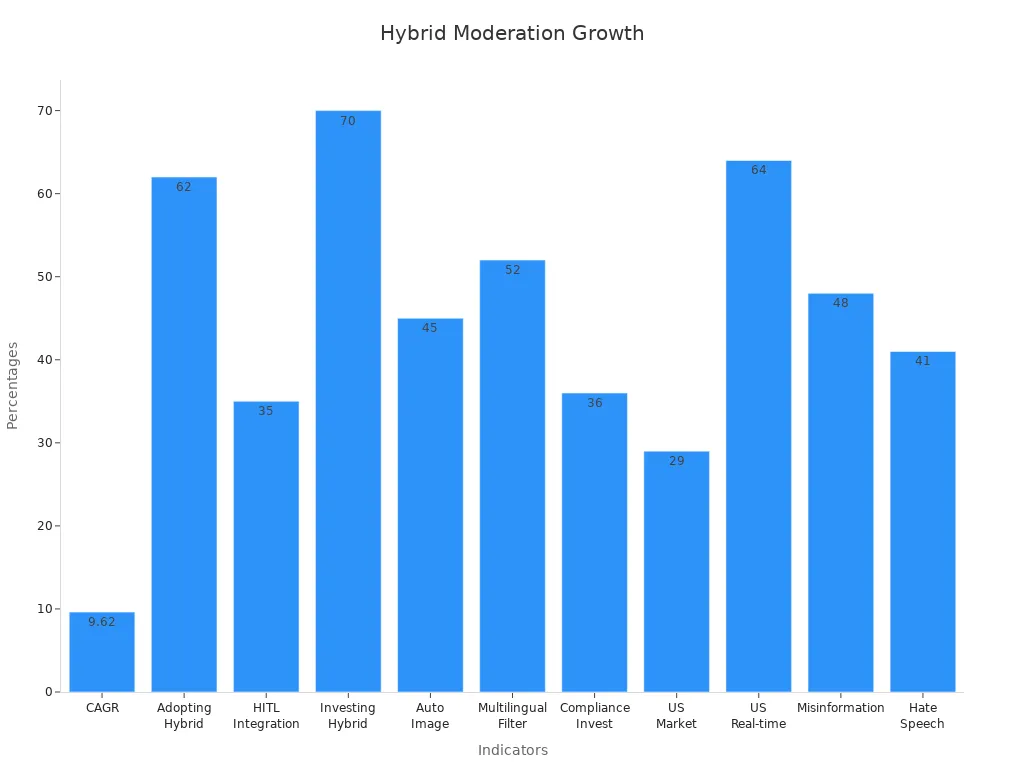

Social media, shopping sites, and forums need good content moderation. This helps keep users safe from harm. AI and machine learning can find and flag bad things like hate speech or scams. Human moderators help with tough cases and understand culture. Working together, they make sure online spaces are safe and fair.

AI-generated content brings new problems. Generative AI can make deepfakes, fake accounts, and fake images. These are hard to spot. Platforms must deal with bullying, catfishing, and grooming from these tools. The FBI warns about fake explicit content hurting both kids and adults. Misinformation spreads faster when AI makes fake stories or pictures.

Companies like Meta delete millions of fake accounts to stop lies and scams. Twitch checks users to block hate raids and fake views.

Generative AI makes it easier for bad people to trick others. Now, anyone can make scams or fake things without much skill. Studies show people often cannot tell real from AI-made content. This makes it easier to fool people. Content moderation must change fast to keep everyone safe online.

AI changes how websites handle content in 2025. Automated systems do the first checks on posts. These tools look at millions of posts, pictures, and videos each day. They use machine learning, natural language processing, and image recognition to find bad or unsafe things. Social media sites need these tools because users share so much content.

This way, things move faster and stay the same each time. It also means human moderators have less work and can focus on harder problems.

AI now does 45% of image checks and helps with 52% of filtering in different languages.

Human moderators are still needed for tricky choices. They check content that AI thinks might be a problem. Sometimes, automated tools miss jokes or hidden messages. Humans help find these and make sure things are fair.

Studies show people trust moderation more when humans help, especially when things are confusing. Using both AI and humans makes things more fair and helps everyone trust the system.

Image Source: unsplash

Image Source: unsplash

AI-driven tools have changed how websites check content. These tools use deep learning and natural language processing. They scan text, pictures, and videos right away. They can find harmful posts, hate speech, and fake news before people see them. Computer vision helps look at photos and videos. Natural language processing helps understand what words mean.

Multi-modal AI systems now look at text, pictures, and even sound. For example, speech recognition and video checks work together to spot unsafe things in live streams. Deep learning networks help find harmful stuff in many ways. These new tools help keep users safe and make online spaces better.

Note: Websites must keep making their tools better. AI-made content is getting good at hiding from simple checks, so tools must get smarter fast.

Generative AI brings new problems for checking content. AI-made things can look and sound like real people made them. This makes it hard for people and machines to know what is real or fake. Fake news spreads faster when bad people use generative AI to make fake stories, pictures, or videos.

AI-powered checking is like a race. As generative AI gets smarter, checking tools must get better too. Websites now use more user feedback and appeal steps to fix mistakes and build trust.

Context is important when checking content. AI tools now use context to understand what posts mean. They look at words, pictures, and even when something was posted to see if it breaks the rules. This helps stop safe posts from being blocked by mistake.

Websites also use new tools to find smart bot groups that spread fake news. These bots use AI-made content to trick people and hide from checks. By using context, websites can better protect users and keep online groups safe.

Tip: The future of checking content will need smarter AI tools that can understand context and change for new dangers. Websites must keep updating their tools to stay ahead of bad actors.

AI-powered content moderation helps websites check millions of posts every day. AI works much faster than people. People make about 102 MB of data each minute. AI can look at this data right away. It can find and flag bad things before they spread. This quick action keeps online spaces safer.

Websites use cloud tools and shared computers to help AI check more things at once. These tools let AI look at many types of content together. The table below shows how AI makes checking faster:

AI means websites do not need as many human moderators as they grow. This helps fast-growing sites stay safe for everyone.

AI does boring and upsetting jobs, so people do not get too stressed. Studies show that when AI works with people, it gives advice and help. This makes it easier for moderators to handle their work and feel better. AI blocks the worst content, so humans only see the hardest cases.

AI helps moderators stay healthy and do their jobs well.

By keeping people away from harmful things, AI lowers the chance of mental health problems for moderation teams.

AI tools make online groups safer and more fun. They remove bad posts quickly, so people have better talks. Studies say AI chatbots can answer customers 80% faster. AI also helps show users things they like, making them 25% more interested. Sites using AI tools keep 33% more users.

Sites like Twitter use AI to find and remove bad posts. This makes users feel safer and happier. Because of this, people want to stay and join in more.

AI systems can sometimes be unfair when checking posts. They learn from data that may not include every group. This means they might flag posts from minority users more. Sometimes, they miss harmful posts aimed at certain groups. Toolkits like Aequitas and Fairlearn help find these problems. Developers use fairness-aware algorithms and audits to fix them. But fairness is not just about numbers. People’s choices are important too. Experts say unfair data can make social problems worse. Having different people on teams and clear rules helps make AI fairer, but it is still hard.

AI has trouble understanding jokes or hidden meanings in posts. It often misses sarcasm or cultural references. This can cause mistakes, like removing safe posts or missing bad ones. A study showed that 40% of harmful posts were not caught by normal AI tools. AI also does not work as well with non-English languages. It is 30% less accurate with these. These problems happen on social media and in online games.

Using both AI and people works better. Human moderators can spot hidden meanings and make fair choices.

It is still hard to know how AI makes choices in moderation. Many users and groups do not understand how AI decides what to remove. Projects like Mozilla’s YouTube Regrets show even experts have trouble learning how platforms work. Surveys say there are no good ways to explain AI choices to everyone. Companies usually share details only when laws say they must. Some people worry that sharing too much could let others cheat the system. Still, giving clear info helps people trust the platform and fix mistakes. Platforms should explain things in ways that everyone can understand.

Being more open helps people trust online platforms and keeps them honest.

Image Source: pexels

Image Source: pexels

Brands need a good reputation to do well online. Content moderation helps brands by blocking harmful posts and hate speech. It also stops spam from hurting their image. This keeps users safe and helps the brand look good. If brands do not moderate well, their reputation can get hurt fast. For example, McDonald's had trouble with the #McDStories campaign in 2012. Bad stories spread quickly because there was not enough moderation. The brand got a lot of negative attention.

Many top brands use their own teams and outside help to check lots of user posts. The table below shows how some companies keep their good name with content moderation:

Trust is very important for social media sites. Users want brands to follow rules and keep everyone safe. When brands show how they moderate, users know what is okay to post. If users can appeal, they feel the brand is fair and honest.

When brands work hard on moderation, people trust them more. Users feel safe, and brands do not get into legal trouble. Social media sites that care about rules and fairness become leaders online.

Online communities need strong moderation to do well. Studies show that moderation changes how people act online. Good moderation stops harmful content and helps people behave better. It also lets people share their ideas safely. Both official moderators and volunteers are important. They do things like teach members, connect people, and set rules. In health groups, moderators and mentors work together to give correct information and help others. They act fast if someone breaks the rules, like muting or banning users who give unsafe advice.

Community moderation uses both automated tools and humans. Automated systems can block bad words, hide spam, and blur explicit images. Behavior analysis tools spot accounts that act oddly, so moderators can help early. These actions keep talks safe and respectful. Transparency reports and clear rules help members trust each other. When everyone knows the rules, the community gets stronger.

Good communities use best practices to stay safe and friendly. Having a dedicated moderation team means someone is always watching. Clear guidelines tell members what they should do. Moderators check the group many times each day. They use set rules to decide when to delete posts or ban users. Tools help them work faster and keep things positive.

Big platforms like YouTube and Facebook remove lots of harmful content every day. This shows how important moderation is. As online communities grow, these best practices will help keep them safe, fair, and welcoming for all.

The future of content moderation will use both AI tools and people. This mix helps websites deal with more and harder online posts. Brands and platforms need to change how they work. They should:

A fair and careful plan will help make online spaces safe and trusted.

AI-powered content moderation uses machine learning and automation to check posts, pictures, and videos online. These systems find harmful or bad content very fast. Human moderators look at hard cases to make sure things are fair and right.

AI learns from new data to handle new risks. It can find things like deepfakes or scams. Teams update AI models often to keep up with new threats. This helps keep users safe and makes online spaces better.

Yes, AI can both check content and suggest things users might like. It looks at what users do and what they like. This helps make the site more fun and keeps people interested.

AI has trouble with bias, context, and getting things right. Machines might not understand jokes or cultural hints. People still need to check things to make sure rules are followed and trust is kept.

Brands earn trust and keep a good name with strong moderation. They stay out of legal trouble and keep users safe. Good moderation also helps follow rules and makes online groups better.