< BACK TO ALL BLOGS

Audio Moderation: Detect Toxic Speech in Seconds

Image Source: statics.mylandingpages.co

Real-time voice is now the most difficult surface to keep healthy. The margin for error is measured in milliseconds: if you can detect toxic speech and act in under a second, you prevent harassment from taking root and you protect users without disrupting normal conversation. This best-practice guide distills what consistently works in production: an end-to-end, low-latency pipeline, concrete latency budgets, accuracy and fairness guardrails, compliance-ready logging, and battle-tested troubleshooting.

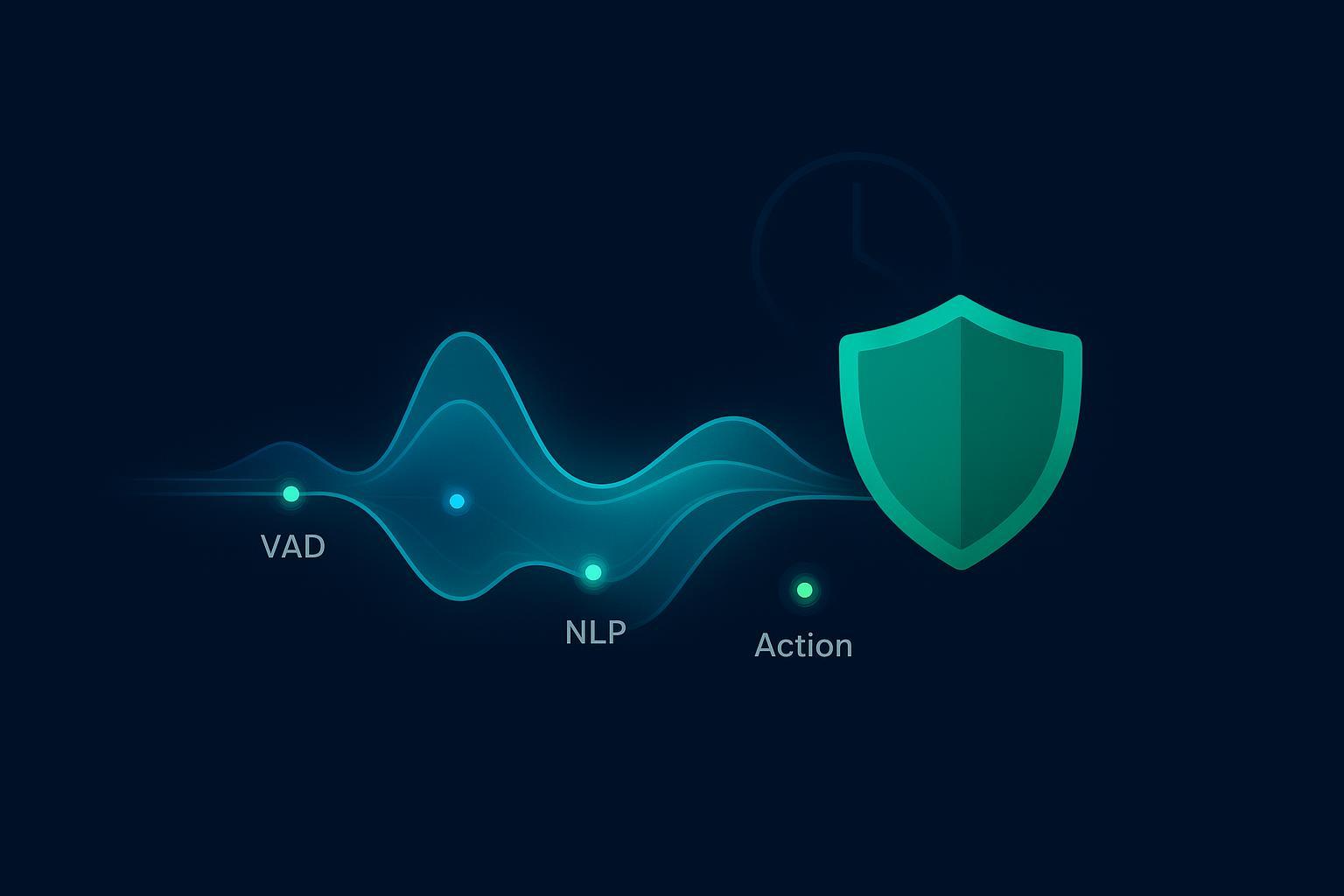

The end-to-end pipeline for sub‑second detection

What to build (modular, streaming-first): Capture → Voice Activity Detection (VAD) → Speaker Diarization → Streaming ASR (transcription) → Toxicity NLP/LLM → Severity Scoring → Action (mute/warn/escalate) → Logging & Appeals.

Latency budgets you can actually hit at scale (production targets):

- Capture → VAD: under 50 ms

- VAD → Diarization: under 50 ms (can overlap with ASR)

- Diarization → Streaming ASR: 200–400 ms chunking; emit partial tokens as confidence stabilizes

- ASR → Toxicity NLP/LLM: under 100 ms (token-streamed)

- Toxicity → Scoring: under 100 ms (lightweight classifier or efficient prompt)

- Scoring → Action: under 50 ms (policy engine + enforcement)

- End-to-end: aim for 300–500 ms on optimized stacks; treat sub-1s as the upper bound for global deployments

Why these numbers: tightly pipelined stacks in industry show sub-1s loops are realistic when you stream partial transcripts and overlap stages. For architectural context and practical ranges, see the GetStream engineering overview in A Complete Guide to Audio and Voice Moderation (2025), which describes streaming pipelines and real-time constraints, and the AWS case on Modulate’s ToxMod, which details event-driven queues and autoscaling for near real-time processing at scale. Evidence: GetStream’s 2025 audio moderation guide and AWS GameTech’s 2024 Modulate/ToxMod architecture blog.

Design moves that keep latency down without gutting accuracy:

- Stream everything. Start toxicity analysis on partial transcripts; don’t wait for full sentences.

- Push VAD and first-pass ASR to the edge to reduce round-trip time; quantize models (8/4-bit) where feasible.

- Use autoscaled GPU pools for NLP/LLM/scoring; cache hot prompts and policies to reduce time-to-first-token.

- Defer automated action when attribution is uncertain (e.g., diarization confidence < 90%); route to human.

Internal note for deeper scaling patterns across modalities: see this overview of real-time moderation APIs in DeepCleer’s blog index to understand cross-modal throttling, backpressure, and observability patterns.

Choose components with streaming metrics, not just accuracy slogans

Many teams over-index on batch accuracy benchmarks and are surprised by slow, brittle live behavior. Evaluate components with live streaming metrics and in-domain noise.

ASR (streaming transcription)

- Selection: Compare models on in-domain audio (gaming VOIP, livestreams, workplace calls). Track word error rate (WER), latency to first stable tokens, real-time factor (RTF), and robustness to accents/noise.

- Benchmarks: The Open ASR Leaderboard consolidates 60+ models with standardized WER and speed trade-offs; use it to shortlist and then validate on your audio. See the Hugging Face Open ASR Leaderboard (2025) and the Open ASR Leaderboard paper (2025) for methodology.

- Optimization: NVIDIA’s NeMo/Riva docs show how to accelerate streaming ASR profiles on GPUs and cut latency 10x with architectural changes; helpful when pushing sub-second loops. Reference the NVIDIA developer blog on accelerating ASR (2024).

- Vendors: AssemblyAI’s Universal-1 (2024) reports multilingual robustness; as with all vendor claims, validate in your domain: AssemblyAI Universal‑1 research brief (2024).

Practical targets: sub-1.0 real-time factor; first stable tokens within 300–700 ms depending on language/noise; explicit handling for code-switching and slang.

Speaker diarization (who spoke when)

- Goal: Correct attribution is critical for enforcing policy fairly. In noisy, overlapping speech, prioritize streaming-capable diarization with embeddings and attention tracking.

- What to watch: diarization error rate (DER) and Jaccard error rate (JER) on domain audio. AssemblyAI reports progress on short segments and noisy far-field, but validate these numbers on your own data: AssemblyAI diarization update (2025). For streaming profiles and deployment configs, see NVIDIA Riva’s ASR NIM support matrix (Sortformer, latest).

Policy tip: if diarization attribution confidence drops below a threshold (e.g., 0.9), avoid automatic punitive actions; warn the channel and escalate to human review.

VAD (speech vs. non-speech)

Robust VAD prevents flooding ASR with noise and saves compute.

- Lightweight, real-time candidates: MagicNet VAD (2024) shows strong real-time characteristics; SincQDR‑VAD (2025) reports noise-robust gains (AUROC/F2) in low SNR conditions. As always, test on your streams.

Implementation checklist:

- Benchmark all three—ASR, diarization, VAD—on your actual traffic. Log DER/JER, WER, and end-to-end latency.

- Add language ID for code-switching and route segments to language-specific toxicity models.

- Instrument per-stage time budgets and alerts when any percentile breaches the target (e.g., P95 ASR latency > 500 ms).

Toxicity detection: transcripts first, with audio cues for severity and explainability

What works in production today:

- Transcribe to text and run proven text toxicity classifiers; they’re mature and fast. Then enrich your decision with audio-side cues like prosody (shouting), aggressive acoustic events, and speaker context for severity.

- For regulators and appeals, explainable audio hate-speech methods that localize toxic frames can help moderators review faster and understand model rationale. See the 2024 research on frame-level rationales: Explainable audio hate speech (2024) and its SIGDIAL version: Audio hate speech explainability at SIGDIAL 2024.

- Prepare for synthetic voice abuse. A 2025 dataset, SynHate, targets hate in deepfake audio across 37 languages—useful for red-teaming and resilience: SynHate multilingual synthetic hate dataset (2025).

Practical guideposts:

- Keep the primary decisioning path fast and deterministic; attach audio cues as severity modifiers and reviewer aids.

- Localize clips to the exact frames for evidence bundles and appeals.

- Maintain per-language thresholds; slang and dialects can change toxicity expression.

Bias and fairness: calibrate, audit, and red‑team continuously

Bias doesn’t fix itself with more data; you need explicit controls.

- Threshold calibration with uncertainty control: Conformal prediction is a robust way to maintain error-rate guarantees across languages/accents—rejecting or escalating uncertain outputs instead of forcing a shaky decision. See the overview in TACL’s Conformal Prediction for NLP (2024).

- Active learning with expert multilingual QA: Prioritize labeling for edge cases (code-switching, dialects). Practical lessons are summarized in Toloka’s multilingual toxicity data practices (2025).

- Adversarial red-teaming: Generate accent/dialect perturbations, slang, and implicit toxicity using knowledge-graph prompts (e.g., MetaTox). Survey work in 2025 outlines effective multimodal and text strategies—see PeerJ Computer Science’s multimodal hate speech survey (2025).

- Transparent metrics: Disaggregate precision/recall by language and accent; publish uncertainty ranges; log all interventions and final outcomes for audits.

Policy reality: When unsure, prefer soft interventions (e.g., warning overlays, temporary mute) and human review pathways over hard bans; appeals data should inform threshold re-tuning.

Compliance 2025: DSA, GDPR, COPPA—build with audits in mind

You’ll be asked to demonstrate not just what you removed, but how your systems make decisions.

- EU Digital Services Act (DSA): For large platforms, transparency reporting is annual (biannual for VLOPs/VLOSEs). Disclose use of automated tools, provide Statements of Reasons (SOR) for each action, and enable user appeals. See the European Commission’s overview of harmonized transparency rules and the DSA transparency portal for SOR practices: EC’s DSA transparency overview (2024–2025) and EC news on harmonized reporting rules (2025).

- GDPR (audio as personal data): Establish a lawful basis (consent, contract, legitimate interests with a balancing test), minimize data, and define retention windows. Watch EDPB guidance on automated decision-making and potential biometric issues when voice is used for identification: EDPB official portal for GDPR guidance.

- COPPA (US, under‑13): Voice recordings are personal information. Obtain verifiable parental consent, provide parental access/deletion, and clear notices. See the FTC’s COPPA FAQs for voice recordings (current).

Implementation checklist for compliance readiness:

- Map data flows for raw audio, transcripts, embeddings; tag by jurisdiction.

- Render SORs with fields: action, rule violated, automated/human roles, evidence clips, and appeal path.

- Provide in-product appeal flows with SLAs; log reviewer and model actions.

- Define retention/rotation by risk class and region; document deletion processes.

For a concrete view of how a moderation provider frames service and privacy practices, review the relevant sections in DeepCleer’s service and privacy items and adapt to your policies and locales.

Troubleshooting: what fails in production and how to fix it

- Background noise and low SNR: Introduce adaptive noise suppression before ASR; deploy robust neural VAD; monitor false positive rises under noisy conditions. If P95 WER jumps by >5 points when SNR drops, consider noise-specific ASR profiles.

- Overlapping speakers and crosstalk: Use embedding-based streaming diarization; when attribution confidence is < 0.9, warn rather than punish and escalate for human review.

- Sarcasm, euphemisms, and coded language: Pair fast toxicity classifiers with an LLM-assisted context pass only on low-confidence segments; maintain a living slang lexicon refreshed from appeals and red-team output.

- Code-switching: Split by language ID and route to language-specific thresholds; track performance gaps in low-resource languages and prioritize labeling there.

- Synthetic/TTS voice abuse: Add spoofing/synthetic detection; verify against opt-in speaker profiles; escalate ambiguous cases.

- Latency regressions under load: Continuously profile each stage; auto-scale inference pools; reject or degrade gracefully when queues breach SLOs (e.g., downgrade to text-only severity when ASR or LLM backends are saturated).

Workflow example: real-time toxic speech detection with an off‑the‑shelf stack

Disclosure: The following example uses DeepCleer to illustrate an implementation we’ve deployed; vendor named to ensure transparency.

- Objective: Sub-second warnings and mutes in voice rooms, with audit-ready evidence clips and appeals.

- Stack sketch: Edge VAD + diarization; streaming ASR; text toxicity + audio cues; policy engine; action bus; logging.

- Steps you can replicate:

- Ingest RTP/WS streams; run edge VAD and emit 320 ms frames; attach speaker embeddings.

- Feed frames to streaming ASR; emit partial transcripts every ~250–400 ms with token confidences.

- Run a fast toxicity classifier as tokens arrive; if risk ≥ medium and diarization ≥ 0.9, page the policy engine.

- Policy engine computes severity with audio cues (e.g., high volume spike) and user history; decide warn vs. temp mute; clip 3–5 s around the segment for evidence.

- Log SOR fields and open an appeal ticket pre-filled with transcript and clip; surface to moderators.

In one implementation, we used DeepCleer for the toxicity and policy stages while keeping ASR/diarization pluggable. The key enablers were partial-token streaming, per-language thresholds, and a strict defer-to-human rule under attribution uncertainty.

Measuring impact and ROI: make interventions measurable

You won’t find many public, audited ROI figures for live audio moderation, so design your own measurement from day one.

Track these KPIs:

- Incident rate per hour (by severity)

- Time-to-intervention (P50/P95 from audio-in to action)

- False positive/negative rates by language/accent and by context (solo, group, stream)

- Share of sessions with warnings vs. mutes vs. bans

- Appeal uphold rate (what percentage of actions get reversed)

- User retention and session length deltas before/after policy changes

For scale patterns under load and near-real-time behavior, the AWS case materials on Modulate’s deployment provide concrete infrastructure ideas such as event-driven queues, autoscaling GPU workers, and batching strategies; see the AWS Modulate case study (2025). Use those patterns as a reference, but validate your own latency and throughput under synthetic and production traffic.

Operational playbook: rollout and ongoing governance

- Shadow mode first: run for 30 days with no user-facing actions; compare human vs. machine decisions and tune thresholds.

- Controlled launch: start with soft warnings and limited rooms; gate hard actions behind higher confidence.

- Quarterly bias audits: disaggregate by language/accent; publish ranges internally; re-run conformal calibration.

- Red-team refresh: add new slang, dialects, and implicit toxicity cases; inject code-switch and deepfake samples.

- Moderator tooling: fast evidence review with frame-level localization and one-click SOR rendering.

Appendix: selection checklist you can copy

- Business goals clarified: what is unacceptable? What are warn/mute/ban thresholds per context?

- Latency budgets set: per stage targets and SLOs; alerts on P95 breaches.

- ASR chosen for your domain: measured WER on your audio; first-token latency within target.

- Diarization and attribution policy: confidence thresholds and human escalation rules.

- Toxicity model calibrated per language; audio cues integrated as severity modifiers.

- Bias controls in place: conformal rejection, disaggregated metrics, active learning.

- Compliance assets: SOR templates, appeals, retention policy, deletion process.

- Observability: per-stage timing, error budgets, synthetic monitors, red-team suite.

Next steps

- Start with the pipeline and latency targets above; run a two-week shadow pilot on a single surface (e.g., gaming voice chat) and measure P50/P95.

- If you need a production-ready toxicity and policy layer that plugs into your existing ASR/diarization stack, consider requesting a demo from DeepCleer. We can walk your team through sub‑second streaming patterns, calibration methods, and audit‑ready logging.

References called out inline include: GetStream’s 2025 audio moderation overview; AWS’s 2024/2025 Modulate case materials; Open ASR Leaderboard (2025); NVIDIA ASR optimization (2024); AssemblyAI Universal‑1 (2024); MagicNet VAD (2024); SincQDR‑VAD (2025); explainable audio hate speech (2024); EC DSA transparency (2024–2025); EDPB portal; FTC COPPA FAQs (current); TACL conformal prediction (2024); Toloka multilingual labeling guidance (2025); PeerJ CS multimodal hate speech survey (2025).