< BACK TO ALL BLOGS

Tackling AI Image Risks: A 2025 Guide to Compliance & Automated Detection

Updated on 2025-11-06

In 2025, proactive, automated image detection has shifted from a nice-to-have into a compliance and trust obligation. Enforcement of the EU Digital Services Act (DSA) and the UK Online Safety Act (OSA) now carries material penalties, while explicit deepfakes and AI-generated child sexual abuse material (CSAM) are surging. Platform teams across policy, engineering, and operations must translate these pressures into concrete controls: low-latency screening at upload, evidentiary logging, escalation playbooks, and transparency artifacts that withstand regulator scrutiny.

Under the DSA, the European Commission can impose fines up to 6% of worldwide annual turnover and use extensive investigative powers starting February 2024; the Commission detailed the enforcement toolkit in its DSA enforcement framework (Feb 2025). Transparency and statements-of-reasons obligations continue to tighten through 2025, as the Commission’s September explainer outlines in “Digital Services Act: keeping us safe online” (Sep 2025).

In the UK, the OSA gives Ofcom robust powers: companies can face fines up to £18 million or 10% of worldwide revenue, with phased duties taking effect across 2025. The government’s official Online Safety Act explainer (Apr 2025) clarifies that Ofcom will issue codes of practice and transparency requirements that platforms must be ready to meet.

Bottom line: regulators expect proactive, systemic risk mitigation, not merely reactive takedowns. For image-based harms, that means automated detection embedded into the product flow, auditable decisions, and timely user-facing notices.

Policy has moved because the threat landscape changed. The UK Government announced plans to make creating sexually explicit deepfake images a criminal offense, with potential prison terms, underscoring the urgency of proactive detection—see Government crackdown on explicit deepfakes (Jan 2025).

Credible NGO data shows a sharp rise in synthetic CSAM. In 2024, the Internet Watch Foundation (IWF) confirmed 245 reports of AI-generated child sexual abuse imagery versus 51 in 2023—an approximate 380% increase—and noted that most were realistic enough to be treated like real photographic abuse under UK law, per IWF: new AI CSAM laws announced following campaign (Feb 2025). IWF has repeatedly urged proactive detection obligations within EU law to curb synthetic CSAM proliferation.

For platforms, this means detection must cover not only traditional nudity/sexual content but also generative artifacts, face/body swaps, and signals that indicate synthetic manipulation—paired with rapid escalation for matches involving minors.

To operationalize compliance, treat “content risk control” as a cross-functional program that translates legal duties into detection, decisioning, and documentation. If your team needs a primer on terminology and scope, see What is Content Risk Control?.

Core controls to implement now:

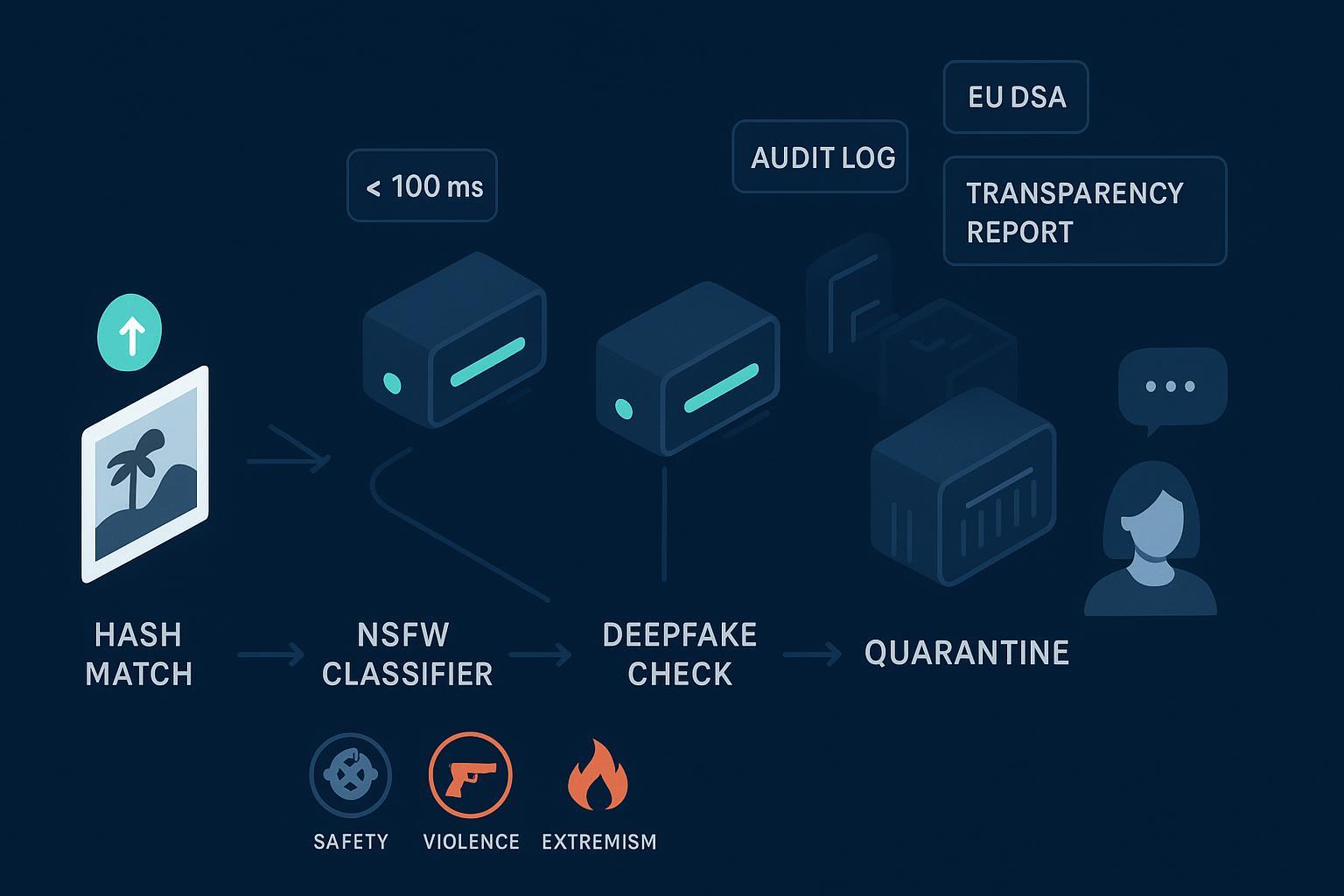

A robust pipeline typically follows staged detection and escalation:

For multi-modal coordination and label hierarchies, review a practical outline in Generative AI Moderation Solution.

Caution on benchmarks: Peer-reviewed work shows high accuracy for pornographic classification in lab conditions—for instance, a 2024 study reported ~97% accuracy—but datasets often differ from platform-scale diversity. Treat published metrics as directional, and validate on your real-world content.

Here’s how an upload-to-delivery image flow can work in practice:

Tools like DeepCleer can be integrated for multi-modal detection within such pipelines. Disclosure: DeepCleer is our product.

Define and track:

Governance practices:

DSA requires providers to issue statements of reasons and maintain transparency reporting that regulators can examine. The Commission’s 2025 explainer highlights ongoing obligations—see Digital Services Act: keeping us safe online. Prepare:

When evaluating automated image detection solutions:

If you want to explore a hands-on workflow, try our Online Content Risk Detection Demo. And for foundational reading, dive into our blog hub.

Legal note: This article provides operational guidance, not legal advice. Enforcement is evolving; consult counsel for jurisdiction-specific requirements.