< BACK TO ALL BLOGS

Best Practices for Content Moderation Buying Decisions

Effective content moderation buying decisions protect your platform from harmful content and reputational damage. When you rely only on automated content moderation, you may miss the subtle cues that only human moderators can catch. A structured approach gives you scalability, real-time support, and fairness for all users. With clear guidelines and ongoing training, your team can enforce policies and maintain user trust.

You build trust and safety on your platform by setting clear community guidelines and enforcing them with strong content moderation. When you manage user-generated content well, you protect users from harmful material and misinformation. During the COVID-19 pandemic, unchecked false information spread quickly and hurt public health efforts. You can avoid similar risks by combining automated tools with human review. This approach helps you catch subtle threats like child grooming or extortion that automated systems might miss. When you use both technology and human oversight, you create a fair and safe space for everyone. Transparent policies and regular updates to your content moderation policy show users that you care about their well-being. This commitment improves trust and safety metrics and keeps your community strong.

Your business reputation depends on how you handle user-generated content. Strong content moderation filters out offensive material, abusive comments, and fake reviews. This protects your brand and keeps your platform professional. The table below shows how different industries benefit from effective moderation:

Aspect | Example | Impact on Reputation |

|---|---|---|

Brand Protection | Filters inappropriate content | Safeguards brand image |

Gaming Platforms | Pre-moderates chats | Ensures user safety and positive perception |

Marketplaces | Monitors reviews | Protects product and brand reputation |

Knowledge Platforms | Verifies articles | Builds trust in platform accuracy |

Consumer Trust | Moderates UGC and reviews | Increases conversion rates and user loyalty |

When you use a hybrid approach, combining AI and manual review, you improve detection and maintain a positive online presence. Clear community guidelines help users understand what is acceptable, which further strengthens your reputation.

You face serious legal and compliance risks if you ignore content moderation. Many countries have strict laws about harmful or illegal content. For example, Germany requires platforms to remove illegal posts quickly. If you fail to act, you risk fines, lawsuits, and government scrutiny. Here are some key risks:

You must update your content moderation policy regularly to keep up with changing laws and protect your business. By investing in robust trust and safety practices, you avoid costly legal problems and maintain user confidence.

You must first understand what types of user-generated content appear on your platform. Each type brings unique risks and challenges. For example, real-time unmoderated content can broadcast offensive language or threats during live events. Two-way interactions, such as those on dating apps or gig economy platforms, often involve hate speech, nudity, or violence. Social media moderation faces issues like fake news, harassment, and inappropriate posts. Online marketplaces deal with fake reviews and misleading information. Gaming platforms see harassment and grooming in chat features. Educational platforms must prevent distractions and disrespectful content to maintain a positive learning environment. Content-sharing platforms, including those with ai-generated content, face copyright infringement and explicit material.

Type of User-Generated Content | Moderation Challenges and Risks |

|---|---|

Real-time unmoderated content | Offensive content, misalignment with brand values, risk of broadcasting profanity or threats during live events |

Two-way interactions (e.g., dating apps, gig economy platforms) | Hate speech, nudity, drug use, violence, offensive photos or messages |

Social media posts | Hate speech, fake news, harassment, inappropriate or low-quality content |

Online marketplaces | Fake reviews, offensive language, misleading or commercially sensitive information |

Discussion forums and chat platforms | Spam, trolling, disrespectful or off-topic discussions |

Gaming platforms | In-game harassment, grooming, inappropriate content in chats or forums |

Educational platforms | Distraction, disrespectful or irrelevant content, maintaining positive learning environment |

Content-sharing platforms (e.g., YouTube, TikTok) | Copyright infringement, explicit content, community guideline violations |

Users often try to bypass moderation processes by using creative misspellings, character swaps, or splitting words with spaces. These tactics make content moderation more complex and require both advanced filters and human oversight to maintain content quality.

You need clear goals for your content moderation strategy. Start by identifying how moderation impacts users and external stakeholders. Set objectives that guide your policy and process design. For example, you may want to improve user experience, reduce harmful content, or increase trust in your platform.

Tip: Define key metrics such as processing rates, accuracy of human reviews, and the balance between automated and manual checks. These metrics help you measure success and adjust your approach.

Organizations often use these goals to select the right tools and workflows. Strategic metrics answer questions about user reports and policy violations. Operational metrics focus on throughput, accuracy, and scalability. By aligning your goals with these measures, you ensure your moderation processes remain effective and responsive. This approach helps you avoid quick fixes and supports continuous improvement in content moderation.

Choosing between building your own content moderation solutions and buying an off-the-shelf platform shapes your platform’s future. You must weigh control, speed, cost, and scalability before making a decision.

Building a custom solution gives you full control over features and workflows. You can design the system to fit your exact needs and scale it as your platform grows. This approach often leads to a more intuitive experience for your moderators, reducing training time and improving efficiency. You also gain a competitive edge by offering unique features that set your platform apart.

However, custom development comes with challenges. You face a high initial investment and a longer timeline—sometimes up to ten months—before you can launch. Small businesses may find the complexity overwhelming. You must also commit to ongoing maintenance and updates, which require dedicated resources.

Tip: Custom solutions work best for large platforms with unique requirements or high content volumes.

Pros of Custom Development:

Cons of Custom Development:

Off-the-shelf platforms offer a fast and cost-effective way to deploy content moderation. You can start using these solutions within weeks, not months. Many platforms support multiple data types, such as text, images, video, and audio. They often include no-code workflow automation, compliance tools, and integration with third-party services. You benefit from proven reliability, strong community support, and regular updates.

Despite these advantages, off-the-shelf platforms may not fit your unique needs perfectly. Customization options are often limited. You may face ongoing subscription fees and depend on the vendor for updates and support. Integration with your existing systems can sometimes be challenging.

Aspect | Custom Content Moderation Solutions | Off-the-Shelf Platforms |

Advantages | Tailored to specific needs, scalable, unique features, full control | Quick deployment, lower upfront cost, proven reliability, strong support |

Disadvantages | High initial cost, long development, ongoing maintenance | Generic fit, limited customization, recurring fees, vendor dependence |

Common Features in Off-the-Shelf Platforms:

Many trust and safety leaders praise these platforms for their efficiency, ease of use, and support for moderator well-being.

Cost and scalability play a major role in your decision. Off-the-shelf solutions have lower initial costs (around $15,000) and can be deployed in three to four weeks. However, monthly costs rise as your usage grows, with fees scaling linearly based on the number of queries or users.

Custom-built solutions require a much higher upfront investment (often $150,000 to $250,000) and take longer to develop. Once deployed, monthly costs remain stable, even as your platform grows. This makes custom solutions more cost-effective for high-volume or complex needs, especially after the two-year mark.

Criteria | Custom-Built Solutions | Off-the-Shelf Solutions |

|---|---|---|

Initial Cost | High upfront investment | Moderate initial cost, often a one-time fee |

Long-Term Cost | Lower over time, no recurring fees | Recurring subscription and add-on fees |

Scalability | Highly scalable, designed for growth | Limited scalability, may require costly upgrades |

Flexibility | High control over updates and maintenance | Limited control, vendor-dependent |

As your platform grows, you must also consider costs for technology upgrades, compliance, employee training, and customization. Investing in AI and hybrid moderation models can reduce human costs by up to 30% and improve efficiency by 15-20%. Operational streamlining and automation help maintain profitability as content volume increases.

Note: If your platform expects rapid growth or has complex moderation needs, a custom solution may offer better long-term value. For smaller platforms or those needing quick deployment, off-the-shelf platforms provide a practical starting point.

You must understand that content moderation laws differ across regions. Each country sets its own rules for online platforms. For example, the UK's Online Safety Act requires you to remove harmful content quickly, especially to protect minors. This law also asks you to screen encrypted messages, which raises privacy concerns. In the European Union, the Digital Services Act (DSA) sets strict rules. You must provide clear ways for users to report illegal content and explain your moderation decisions. The DSA also requires you to handle complaints and avoid punishing users who report in bad faith.

Many countries in Latin America, such as Brazil and Colombia, have their own laws. Brazil's Fake News Law asks you to keep records of mass forwarded messages and publish transparency reports. Colombia's Law 1450 protects freedom of expression and net neutrality. Courts in Colombia may hold you responsible for defamatory content if you do not have proper moderation procedures.

Here is a summary of key regional frameworks:

Regional Law / Framework | Key Content Moderation Requirements and Provisions |

|---|---|

EU Digital Services Act (DSA) | Clear reporting channels, statements for moderation actions, complaint mechanisms, careful handling of bad-faith reports |

UK Online Safety Act (OSA) | Protects minors, requires removal of harmful and illegal content, extra platform responsibilities |

EU AI Act | Human oversight and transparency in AI moderation systems |

Brazil's Fake News Law | Transparency, record-keeping, user rights to contest moderation, accountability |

Colombia's Law 1450 | Freedom of expression, net neutrality, limits on ISP censorship, liability for lack of moderation |

Australia and ASEAN | Transparency and user rights in content moderation |

You need to stay updated on these laws to avoid fines and legal trouble.

Data privacy laws shape how you collect, store, and process user information during moderation. The European Union's DSA requires you to remove illegal content quickly and publish transparency reports. You must also report certain types of harmful content, such as child sexual abuse material, to special agencies. California's Assembly Bill 587 makes you disclose your moderation policies to the public. In Germany and Austria, you must remove hate speech and violent content within 24 hours.

The General Data Protection Regulation (GDPR) increases your responsibility for data privacy and security. This law can raise your compliance costs, especially if you run a small business. In the United States, Section 230 of the Communications Decency Act protects you from liability for user-generated content. However, changes to this law could force you to change your moderation approach.

Always choose moderation solutions that help you meet these privacy and reporting requirements. This keeps your platform safe and builds trust with your users.

Modern platforms rely on a mix of content moderation tools to keep communities safe and compliant. You need to understand the strengths and weaknesses of each approach to choose the right solution for your needs. The main types of content moderation include AI automation, human review, and hybrid models. Each method plays a unique role in protecting your platform and users.

Automated content moderation uses advanced algorithms and machine learning to scan and filter user-generated content at scale. You can process thousands of posts, images, or videos every minute with these systems. AI moderation tools excel at detecting clear policy violations, such as explicit language, spam, or graphic imagery. They deliver fast and consistent results, which helps you manage high volumes of content efficiently.

However, automated content moderation has important limitations. AI models often struggle with context and nuance. For example, OpenAI’s GPT-4 shows high accuracy in many moderation tasks, but studies reveal that AI models like GPT-3.5 produce more false negatives than false positives. This means the system may miss harmful content, especially in niche communities or when users use creative workarounds. AI tools also face challenges with language disparity, performing better in high-resource languages and less accurately in others. You may see over-enforcement, such as the removal of educational images, or under-enforcement, where harmful content slips through.

Note: Automated content moderation can amplify human errors and biases found in training data. This can disproportionately affect marginalized groups and lead to wrongful removals.

You should also consider transparency. Many AI moderation systems do not explain why they remove content, making it hard for users to understand or appeal decisions. To maintain trust, you need to audit these systems regularly and ensure they align with your platform’s values.

Aspect | Human Moderators | AI Moderators |

|---|---|---|

Context understanding | High | Low |

Nuance detection | Excellent | Poor |

Consistency | Variable | High |

Speed | Slower | Very fast |

Accuracy | Better with nuanced cases | Good with clear violations |

Automated content moderation works best for routine, high-volume filtering. You can use it to block obvious violations quickly, but you should not rely on it alone for complex or sensitive cases.

Human content moderation remains essential for handling nuanced or context-dependent violations. You benefit from human moderators’ ethical reasoning and common-sense knowledge. They can interpret tone, intent, and cultural context, which AI often misses. When you face ambiguous or high-risk content, human review provides the judgment needed to make fair decisions.

Early automated systems based on rules or keyword matching could not handle the complexity of human language. Even with advances in natural language processing, real-world evaluation shows that human oversight is still necessary. You can use human moderators to review flagged content, provide feedback to AI systems, and help refine automated processes.

You should design your content moderation tools to support human reviewers. Features like content blurring, tiered review systems, and task rotation help reduce fatigue and improve accuracy. Diverse review teams also help identify biases and blind spots, making your moderation process more fair and inclusive.

Tip: Strategic use of human review—such as risk-based routing or confidence thresholds—ensures that you focus human effort where it matters most.

While human content moderation offers high accuracy for complex cases, it can be slow and costly to scale. You need to balance quality with efficiency by integrating human review where it adds the most value.

Hybrid models combine the speed of automated content moderation with the nuanced judgment of human moderators. You can use AI to filter routine content and flag uncertain or high-risk cases for human review. This approach gives you the best of both worlds: fast processing for most content and careful oversight for sensitive material.

Recent advances in AI moderation have improved accuracy and customization. Still, you need human input for policy development, regulatory compliance, and ethical oversight. Hybrid models let you leverage AI’s technical strengths while ensuring fairness and safety through human judgment.

Callout: Hybrid content moderation types optimize productivity and quality, balancing efficiency with ethical oversight.

You should design your hybrid workflow to route content based on risk, confidence, or policy complexity. This ensures that you use your resources wisely and maintain a safe, trustworthy platform.

You need content moderation software that delivers real-time moderation to keep your platform safe and responsive. Real-time filtering lets you flag or block harmful content within milliseconds, often before users even see it. This speed is essential for livestreaming, chat, and short-form video. Modern systems use modular AI pipelines, stacking models like CNNs for quick checks and vision transformers for deeper analysis. You can also use OCR to scan text in images. These tools help you catch threats fast and reduce the risk of harmful content spreading.

Tip: Intelligent queue management and threshold tuning help you balance speed and accuracy. You can set strict latency targets and use circuit breakers to keep your system stable during traffic spikes.

Hybrid architectures route urgent content for instant review while sending less risky material for deeper checks. This approach ensures your real-time moderation remains both fast and reliable, even as your platform grows.

Your users share more than just text. They upload images, videos, audio, and files. The best content moderation software supports all these content types. You need tools that can scan for profanity, hate speech, harassment, threats, and spam across every format. AI-powered filters and customizable rule engines let you tailor your moderation to your platform’s needs. Contextual understanding through AI improves accuracy, especially when users try to bypass filters with creative tricks.

Here are the most requested features in content moderation software:

You can use these features to handle over 50 moderation categories, from copyright issues to cultural context.

You want content moderation software that fits smoothly into your existing systems. Many platforms face challenges with integration, such as limited API functionality or legacy architectures. Complex implementation can require advanced technical skills. You may also see performance issues during high-traffic periods if your software does not scale well.

Note: Choose solutions that connect easily with your AI services, analytics, user databases, and security tools. This will help you maintain reliability and adapt to new business needs.

Seamless integration ensures your moderation tools work with your business systems, making your workflow more efficient and your platform safer. When you select software with strong integration capabilities, you avoid common barriers and keep your moderation process running smoothly.

When you evaluate content moderation vendors, you should look for a proven track record. Vendors with experience in your industry understand the unique risks of user-generated content. They know how to protect your brand reputation and keep your platform safe. A strong moderation partner brings legal knowledge and stays updated on changing regulations. You benefit from their expertise in handling complex content and their ability to respond quickly to new trends. Reliable vendors offer ongoing support, clear communication, and training for your team. They also provide frameworks and scorecards to help you measure accuracy and consistency. This approach ensures your moderation aligns with your company values and supports responsible growth.

Tip: Always ask for case studies or references from similar projects to verify a vendor’s experience.

Your platform may grow quickly, so you need a vendor who can scale with you. Scalability means the vendor can handle more user-generated content as your audience expands. Look for clear service agreements that define turnaround times, accuracy rates, and data security. The best vendors adapt to new content trends and policy updates. They support multiple content types and languages, making it easier to serve a global audience. Seamless integration with your existing systems is also important. Use the table below to compare capacity models:

Capacity Model | Description & Scalability Considerations |

|---|---|

External Vendors | Manage long-term, scaled review with defined SOPs; easy to scale; vendor manages employees and operations. |

In-house Contractors | Good for temporary or changing workflows; medium control; suitable for testing before scaling. |

Employees | Experts handle high-risk content; full control; limited scalability for smaller teams. |

Hybrid Models | Volunteers moderate content; low cost; risk of inconsistent decisions and volunteer burnout. |

Outsourcing content moderation offers many benefits. You gain access to advanced technology, specialized expertise, and flexible team sizes. Outsourcing helps you manage large volumes of user-generated content and reduces the workload for your internal team. It can also save costs by avoiding hiring and training expenses. However, outsourcing comes with challenges. You may face quality and consistency issues, especially if the vendor does not fully understand your brand guidelines. Data privacy and security risks increase when third parties handle sensitive information. Communication barriers, cultural differences, and time zones can also affect results. To succeed, you need transparent standards, regular training, and clear communication with your vendor.

Pros of Outsourcing Content Moderation | Cons of Outsourcing Content Moderation |

Cost savings and efficiency | Less control over quality |

Scalability for high content volumes | Data privacy and security risks |

Access to expertise and technology | Cultural misunderstandings |

Reduced internal workload | Training and onboarding challenges |

Wellness programs for moderators | Ethical concerns about well-being |

Note: Conduct a pilot project before making a long-term commitment. This helps you test the vendor’s capabilities and ensures they align with your goals.

Transparency builds trust between you and your users. When you explain moderation actions and give users a voice, you create a safer and more respectful community. Clear communication about your rules and decisions helps users understand what is expected and why certain actions happen.

You need reporting tools that are easy to find and simple to use. These tools let users flag harmful or inappropriate content quickly. When you provide clear guidelines and regular updates about your moderation policies, you help users understand the rules. Publishing transparency reports and notifying users about moderation actions also increases trust.

Aspect | Explanation |

Clear policy communication | Regular updates and clear communication of moderation policies help users understand rules, fostering transparency and trust. |

Transparency reports | Publishing reports on moderation actions and government requests demystifies the process and reinforces user trust. |

Moderation action notifications | Informing users about moderation decisions and reasons educates users and maintains transparency. |

Appeals process | Providing a clear appeals process demonstrates fairness and empowers users, enhancing trust. |

When you combine automated tools with human oversight, you create a content review process that is both efficient and fair. Users value transparency about moderation decisions and want to know how both AI and humans are involved.

You can also encourage users to report issues by making the process accessible and user-friendly. When users feel involved, they help maintain community standards and reduce accidental violations.

A fair appeal process gives users a chance to contest moderation decisions. You should make your appeals policy easy to find and simple to understand. False positives can happen, so you need a system that lets users explain their side. This approach shows that you value fairness and accountability.

When you explain the reasons behind your moderation rules and actions, you help users learn and avoid future violations. This practice strengthens trust and supports a positive platform culture.

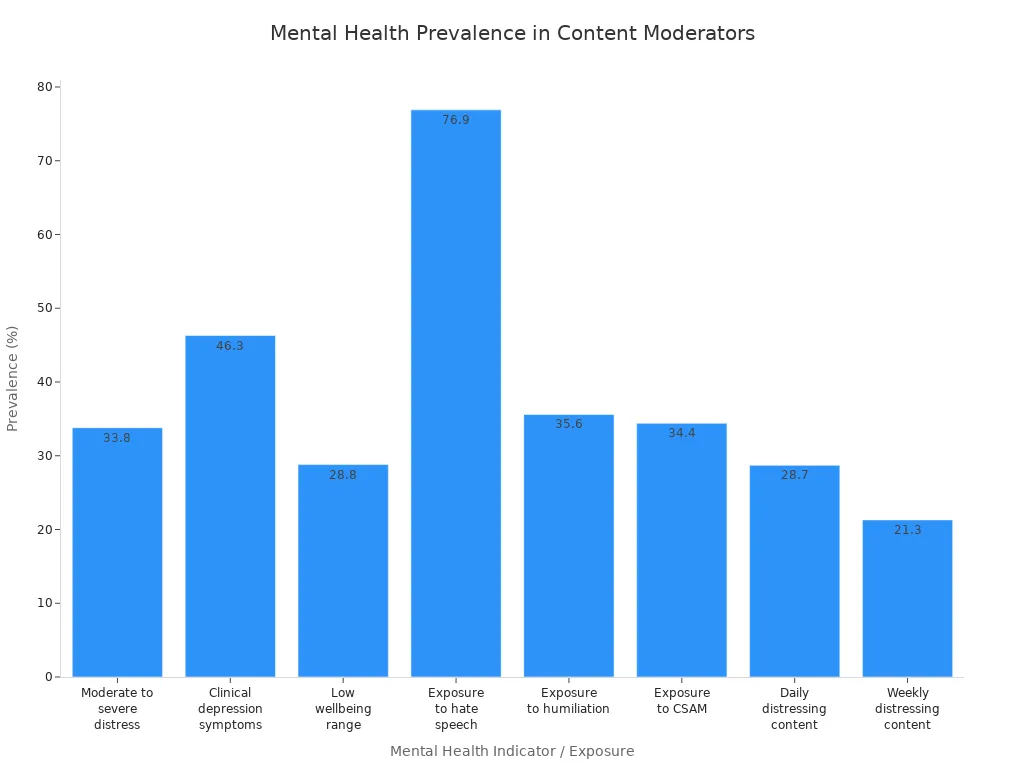

You face unique mental health challenges as a content moderator. The work often exposes you to distressing and traumatic material. Recent research shows that over half of professional content moderators score high on depression scales, a rate much higher than in other high-risk jobs like policing. You may experience anxiety, burnout, or even symptoms of PTSD. These issues often happen together, making your job even harder.

Mental Health Prevalence in Content Moderators

The table below highlights the mental health risks you might encounter:

Mental Health Indicator | Prevalence / Statistic |

|---|---|

Moderate to severe psychological distress | 33.8% of content moderators |

Clinical level depression symptoms | 46.3% scored 13 or greater on Core-10 |

Low wellbeing range | 28.8% of participants |

Exposure to hate speech | 76.9% reported exposure |

Exposure to humiliation | 35.6% reported exposure |

Exposure to child sexual abuse material | 34.4% reported exposure |

Daily exposure to distressing content | 28.7% of moderators |

Weekly exposure to distressing content | 21.3% of moderators |

You need support systems, such as regular counseling, wellness programs, and clear time-off policies. These resources help you manage stress and reduce the risk of long-term harm.

A strong organizational culture shapes your experience as a moderator. When you work in a positive culture, you feel valued and supported. This environment helps you stay engaged and reduces turnover. Leadership plays a key role by setting clear values and expectations.

You thrive when your values align with your organization’s culture. A supportive environment boosts your engagement, productivity, and well-being.

You need a clear way to compare content moderation solutions. Scorecards help you do this. They let you rate each option based on important factors. You can use a table or checklist to keep your evaluation organized.

Criteria | Weight | Vendor A | Vendor B | Vendor C |

|---|---|---|---|---|

Accuracy | 30% | 8 | 9 | 7 |

Scalability | 20% | 7 | 8 | 6 |

Integration | 15% | 9 | 7 | 8 |

Support & Training | 15% | 8 | 8 | 7 |

Cost | 20% | 7 | 6 | 9 |

You can assign weights to each criterion based on your needs. For example, if accuracy matters most, give it a higher weight. After you score each vendor, add up the totals. The highest score shows the best fit for your platform.

Tip: Involve your team in the scoring process. Different roles may notice different strengths or weaknesses.

You should always test a solution before making a final decision. Pilot testing lets you see how a tool works in real situations. Start with a small group of users or a limited set of content. Watch how the system handles real posts, images, or videos.

Pilot testing helps you spot problems early. You can see if the tool meets your needs and fits your workflow. If issues come up, you can address them before a full rollout.

Callout: A successful pilot test gives you confidence in your buying decision. It also helps you plan for a smooth launch.

You need to keep your content moderation strategies up to date. Online threats change quickly. New types of misinformation and hate speech appear all the time. You should review your policies and guidelines on a regular schedule. Involve legal experts, data analysts, and your moderation team in these reviews. User feedback also helps you spot problems early.

A good strategy includes regular training for your moderators. You can hold workshops or short sessions to teach new trends and best practices. Both human and AI moderators need updates. This training helps reduce mistakes and bias. It also keeps your team ready for new challenges.

Tip: Use feedback loops and quality checks to see how well your strategy works. If you find gaps, update your process right away.

You must stay flexible as your platform grows. Combine AI and human review to handle new risks. Set up workflows that let you escalate tough cases to experts. This approach helps you adapt when user behavior shifts or new laws appear.

Keep transparency and ethics at the center of your process. Explain how your AI tools work and why you make certain decisions. This builds trust with your users and keeps your platform safe.

Here are steps you can follow to adapt quickly:

When you review and adapt often, you keep your platform strong and your community safe.

You can make smart content moderation buying decisions by following a clear process. Start by understanding your platform’s needs. Compare solutions using scorecards and pilot tests. Review your strategy often as your platform grows. Balance automation with human review to keep your community safe and compliant.

Remember: Regular updates and a strong mix of tools help you protect your users and your brand for the long term.

You should focus on accuracy and scalability. A solution that handles your content volume and detects harmful material quickly will protect your users and brand.

You can use AI for fast, routine checks. Assign complex or sensitive cases to human moderators. This hybrid approach improves both speed and fairness.

Yes. Most modern tools offer APIs or plugins. You can connect them to your platform, analytics, or user management systems for seamless operation.