< BACK TO ALL BLOGS

Content Moderation Outsourcing Software: A Practical Guide

Before you evaluate any content moderation software, identify and categorize your specific needs. Start by listing the types of content your platform handles and the risks you want to mitigate. Align your content moderation strategy with your business goals and user safety requirements. Critical factors—such as reliability, scalability, and data security—should drive your decision. Many companies outsource moderation to save costs, access advanced tools, and keep communities safe. Use a checklist to compare options and ensure your solution fits your unique requirements.

Tip: Clearly defining requirements helps you choose a partner with the right experience, technology, and ethical standards.

Outsourcing content moderation helps you control costs and boost efficiency. You avoid fixed expenses like salaries, benefits, office space, and training. Instead, you pay only for the content moderation services you need. Many companies report up to 50% cost savings when they switch to outsourcing content moderation. You also skip the long process of hiring and training in-house teams. This means you can focus your resources on your core business. Outsourcing content moderation lets you scale up or down quickly, which is especially helpful for startups or fast-growing platforms.

Outsourcing content moderation often leads to faster turnaround times and improved user satisfaction.

When you choose content moderation outsourcing, you gain access to expert moderators and advanced technology. Providers offer deep industry knowledge and specialized skills that are hard to find in-house. They train their teams to follow your platform’s rules and understand cultural context. Many leading companies use a mix of AI and human moderators. For example, providers like Teleperformance and Accenture combine machine learning with human oversight to catch harmful content. This hybrid approach improves accuracy and keeps your platform safe.

Content moderation outsourcing makes it easy to handle sudden spikes in user-generated content. Providers can quickly add trained moderators and use AI tools to manage large volumes. You get 24/7 global coverage, so your platform stays safe at all times. Teams work across time zones and languages, ensuring your users have a consistent experience. Companies like Alorica and Cogito Tech offer multilingual support and cultural sensitivity, which helps you meet international standards.

AI and automation play a major role in modern content moderation. You can use AI to scan massive amounts of user-generated content in seconds. These systems detect spam, hate speech, explicit images, and other harmful material quickly. AI-powered tools excel at repetitive tasks and pattern recognition. They help you keep your platform safe by flagging obvious violations before users see them.

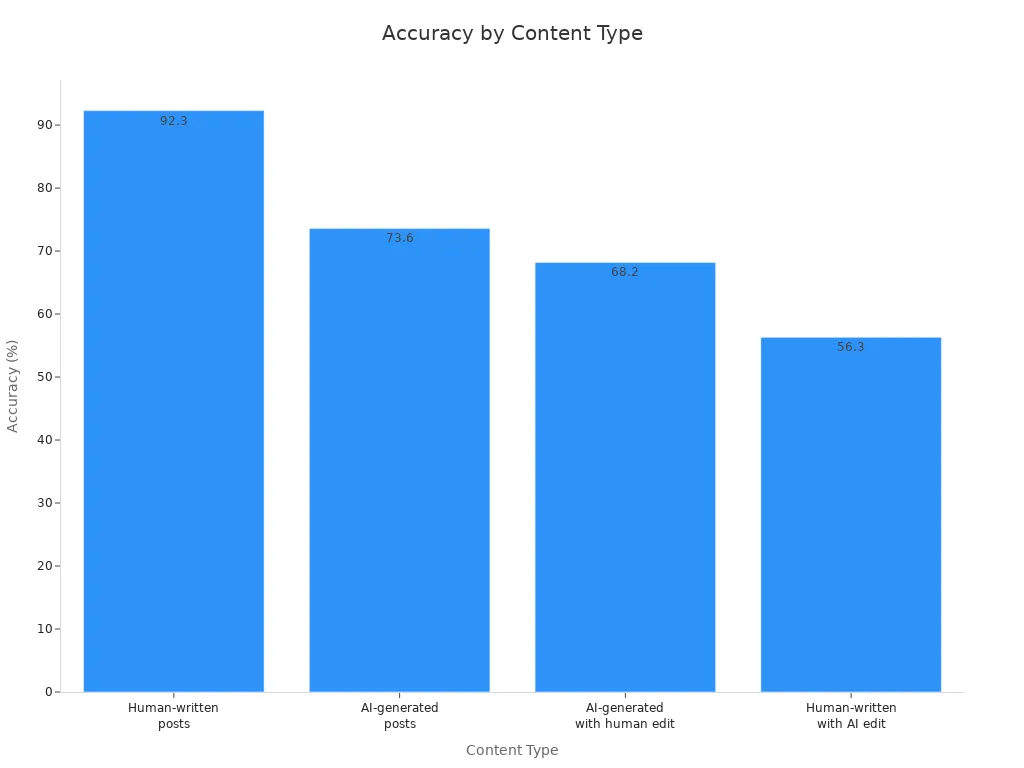

However, AI has limits. It often struggles with context, sarcasm, and cultural nuances. Large language models process content faster than humans, but they do not always match human accuracy in complex cases. For example, AI tools process content with an average accuracy of about 82%, while human moderators reach around 80-83% accuracy. The difference becomes clear when you look at content types:

Content Type | Accuracy |

|---|---|

Human-written posts | ~92.3% |

AI-generated posts | ~73.6% |

AI-generated with human edit | ~68.2% |

Human-written with AI edit | ~56.3% |

Accuracy by Content Type

AI moderation works best for clear-cut cases, such as spam or explicit content. It offers speed, consistency, and cost savings. You can rely on AI for the first layer of defense, but you should not expect it to handle every situation perfectly.

Tip: Use AI for high-volume, repetitive tasks and let human moderators handle complex or sensitive content.

Human moderators remain essential for effective content moderation. You need people who understand context, emotion, and cultural differences. Human moderators excel at reviewing nuanced or ambiguous content, such as satire, harassment, or sensitive topics. They make decisions that require empathy and critical thinking.

Leading outsourcing firms select moderators with strong analytical skills, cultural awareness, and emotional resilience. They provide ongoing training and mental health support. Moderators learn your platform’s rules and stay updated on trends. This approach ensures high-quality moderation and protects your community.

Providers use a hybrid model, combining AI’s speed with human judgment. For example, Facebook uses algorithms for initial screening, then sends complex cases to human reviewers. This method reduces the workload for your team and improves decision quality.

Note: Human moderators receive continuous education and support to maintain accuracy and well-being.

Your platform may attract users from around the world. Multilingual support in content moderation helps you manage global communities. Top providers offer moderation in more than 30 to 50 languages. They use native speakers and advanced moderation tools to ensure accuracy.

For example, Teleperformance supports over 50 languages, while Oworkers covers more than 30. Providers like DeepCleer offer services in multiple languages, including English, Spanish, Arabic, Hindi, German, Portuguese, and French. This broad coverage helps you meet international standards and respect cultural differences.

Multilingual teams allow you to deliver consistent content moderation across regions and time zones.

Custom filters let you tailor content moderation to your platform’s unique needs. You can block or allow specific words, phrases, or types of content. Filters help you detect profanity, hate speech, personal information, and more. Many platforms offer configurable filters that you can adjust as your community evolves.

Here are common filter options:

Filter Category | Examples / Details |

|---|---|

Profanity Filters | Detect and block offensive language |

Noise Filters | Filter out irrelevant or disruptive background noise |

Personally Identifiable Information (PII) Filters | Detect and block sensitive personal data such as emails, phone numbers, URLs |

Custom Block and Allow Lists | User-defined lists to block or allow specific words, phrases, or content |

Bullying Filters | Detect content related to harassment or bullying |

Sexual Content Filters | Detect nudity, sexual acts, or inappropriate sexual content |

Hate Speech Filters | Detect language or content promoting hate or discrimination |

Violence Filters | Detect violent content or threats |

Spam Filters | Detect unsolicited or irrelevant promotional content |

Inappropriate Visuals | Detect images or videos with nudity, gore, or other harmful content |

Culturally Sensitive Content | Filters tailored to specific cultural norms or sensitivities |

Common Content Moderation Filters

Custom filters, when combined with human review, give you flexibility and control over your content moderation strategy.

Real-time monitoring keeps your platform safe around the clock. You can detect and remove harmful content within seconds of publication. Leading platforms, such as Gcore, achieve average response times of 30 milliseconds worldwide. This speed is possible because of global infrastructure and high-capacity data centers.

Real-time content moderation services help you respond to threats quickly. You protect your users and your brand reputation. Fast response times also improve user trust and satisfaction.

Real-time monitoring is essential for live chats, streaming, and fast-moving online communities.

When you evaluate a provider for content moderation, reputation should be your starting point. A strong reputation shows that the provider delivers reliable and high-quality service. You want a partner who understands the complexities of content moderation and follows strict content moderation guidelines.

Consider these steps to assess reputation:

Tip: A provider with a good reputation often has dedicated teams, established workflows, and a history of meeting moderation policies.

Technology forms the backbone of effective content moderation. You need to know what tools and systems the provider uses. Modern providers combine AI, machine learning, and human review to deliver fast and accurate results.

Key points to evaluate:

A provider with advanced technology and strong quality control ensures your platform stays safe and compliant.

Industry experience makes a big difference in content moderation. Providers with years of experience know how to apply content moderation guidelines and moderation policies to different types of content. They understand the unique challenges of your industry and can make quick, unbiased decisions.

Benefits of choosing an experienced provider:

Experienced providers also use continuous learning and the latest technology to keep up with new trends. They offer multilingual support and can personalize their services to fit your brand.

Customer reviews give you real insight into a provider’s performance. Look for feedback on reliability, communication, and quality control. Reviews often highlight how well the provider follows content moderation guidelines and adapts to changing needs.

You can use this checklist when reading reviews:

Note: Positive reviews and long-term client relationships signal a provider who values transparency and continuous improvement.

A careful review of reputation, technology, industry experience, and customer feedback helps you choose the right partner for content moderation services. This approach ensures your platform meets high standards for safety, compliance, and user trust.

You must protect user data when you outsource content moderation. Leading providers use strict security measures to keep information safe. These steps help prevent data leaks and unauthorized access:

These practices create a strong foundation for safe content moderation. You should always ask your provider about their security protocols and how they align with your content moderation guidelines.

You need to follow all laws that apply to your platform and users. Content moderation outsourcing providers must comply with regulations such as GDPR, CCPA, and COPPA. These laws protect user rights and set rules for handling personal data. Your provider should show clear policies for data retention, breach notification, and user consent. Make sure their content moderation guidelines match legal standards in every country where you operate. This approach helps you avoid fines and keeps your reputation strong.

Tip: Review your provider’s compliance certifications and audit reports before signing any contract.

User privacy matters during every step of the content moderation process. Providers protect confidential user data by following strict privacy regulations and using strong security protocols. These actions keep personal information safe from misuse or exposure. You should confirm that your provider’s privacy policies support your own standards and content moderation guidelines. This step builds trust with your users and shows your commitment to safety.

You want your content moderation solution to work smoothly with your existing systems. Check if the provider supports your platform’s APIs and data formats. Many top providers offer plugins or SDKs for easy setup. Ask about compatibility with your website, mobile app, or social media channels. If you use cloud services, confirm that the moderation software can connect without delays. System compatibility helps you avoid technical issues and keeps your workflow efficient.

Tip: Test the software in a staging environment before you go live. This step helps you spot problems early.

Every platform has unique needs. You should look for content moderation tools that let you set your own rules and filters. Customization lets you decide what types of content to allow or block. Some providers let you create custom workflows for different user groups or regions. You can also adjust review queues and escalation paths. This flexibility helps you protect your brand and meet your community standards.

Customization ensures your moderation process matches your goals.

A smooth onboarding process helps your team start strong. Good providers offer training, clear documentation, and support during setup. You should ask for a step-by-step guide to connect your systems. Many companies provide live demos or sandbox environments for practice. Onboarding should include a review of your content moderation policies and a test of all features. Fast onboarding means you can launch your solution quickly and with confidence.

Note: Choose a provider that offers ongoing support after onboarding. This support helps you adapt as your needs change.

When you explore content moderation outsourcing, you will find several pricing models. Each model fits different business needs and project sizes. The table below shows the most popular options and how they compare:

Pricing Model | Description | Cost-Effectiveness & Suitability |

|---|---|---|

Staffing Model | Hire individuals or teams for specific tasks over a set period. | Cost-effective for short-term projects; scalable; pay only for project staff, not full-time employees. |

Fixed Price Model | Pay a fixed price for a project based on estimated time and resources. | Good for small or new projects; less flexible; provider assumes risk; best for predictable scope. |

Time and Materials Model | Charges based on actual time and materials used. | Flexible and scalable; preferred for large, complex projects; cost based on actual effort and resources. |

Cost-plus Pricing Model | Costs plus a fixed profit margin; adjustable as expenses change. | Adaptable; includes fixed fee or incentive payments; suits changing requirements. |

Consumption-Based Pricing | Charges based on actual resource usage, like storage or bandwidth. | Flexible and scalable; easier cost management; harder to estimate for large or variable projects. |

Profit-Sharing Pricing Model | Share a percentage of profits with the provider. | Aligns incentives; good for high-risk projects; fosters close collaboration. |

Shared Risk-Reward Model | Both parties share risks and rewards, often combining fixed and variable fees. | Motivates performance; ideal for uncertain projects; encourages partnership. |

Incentive-Based Pricing | Provider receives bonuses for hitting milestones or exceptional results. | Motivates better performance; rewards achievement beyond expectations. |

You should choose a model that matches your project’s complexity and your company’s risk tolerance.

Content moderation outsourcing can bring hidden costs that impact your budget. Watch for these common issues:

These costs can add 15-20% or more to your expected rates. Always review contracts and ask questions to avoid surprises.

To measure the value of content moderation outsourcing, look beyond the price tag. Consider the quality of moderation, response times, and the provider’s ability to scale with your needs. Reliable partners help you protect your brand and keep your users safe. Fast, accurate moderation reduces risks and builds trust. When you assess value, include both direct costs and the long-term benefits of a safer, more engaged community.

Tip: Track your return on investment by monitoring user satisfaction, incident rates, and operational efficiency after you start outsourcing content moderation.

You need clear metrics to measure the success of your content moderation outsourcing. Key performance indicators (KPIs) help you track progress and spot areas for improvement. The right KPIs show if your provider meets your standards and keeps your platform safe.

Here is a table of important KPIs for content moderation outsourcing:

KPI Category | Key Performance Indicators (KPIs) | Why It Matters |

|---|---|---|

Quality | Accuracy Rate (error-free tasks) | Shows if content is moderated correctly. |

Efficiency | First Contact Resolution, Cycle Time, Response Time, Resolution Time | Measures speed and effectiveness of issue handling. |

Service Level | SLA Compliance | Confirms provider meets agreed standards. |

Customer Satisfaction | Client Satisfaction Scores, CES, NPS | Reflects satisfaction of clients and users. |

Cost-Effectiveness | Cost per Transaction, Cost Savings, Profit Margins | Tracks financial efficiency of outsourcing. |

Risk Management | Vendor Performance, Business Continuity | Checks reliability and ability to handle disruptions. |

You should review these KPIs regularly. High accuracy rates and fast response times mean your users stay safe. Good customer satisfaction scores show your community trusts your platform.

Tip: Set clear targets for each KPI before you start. This helps you and your provider stay aligned.

Measuring success does not stop after launch. You need to review your content moderation process often. Schedule regular meetings with your provider to discuss performance. Use KPI reports to guide these talks. Look for trends in accuracy, speed, and user feedback.

Ask your team and users for input. Their feedback can reveal hidden issues or new risks. Update your moderation guidelines as your platform grows. Stay flexible and ready to adjust your approach.

Continuous review helps you catch problems early. You keep your platform safe and your users happy. Strong oversight also builds trust with your provider and supports long-term success.

You face real risks when you share sensitive data with third-party vendors. Many companies lose direct control over data security during content moderation outsourcing. Data breaches and misuse of information can happen if you do not set strict rules. These problems often arise because your data leaves your company’s immediate oversight. To reduce these risks, choose vendors who follow strong data protection laws. Always include confidentiality clauses in your contracts. Use strong data encryption methods to protect information. Schedule regular security audits to check if your vendor follows your data protection policies.

Never overlook security. A single breach can damage your brand and user trust.

Integration problems can slow down your workflow and cause frustration. If your content moderation software does not work well with your existing systems, you may see delays or errors. You might also lose important data or miss harmful content. To avoid these issues, test the software in a safe environment before you go live. Work closely with your vendor to map out the integration process. Make sure your team understands how to use the new tools.

Ignoring user experience can lead to unhappy users and a damaged reputation. If moderation is too slow or inaccurate, users may leave your platform. Poorly designed workflows can frustrate both users and moderators. You need to balance safety with a smooth experience.

Listen to user feedback and adjust your process as needed.

Set up quality control reviews to ensure your moderation meets your standards. Fast, fair, and accurate moderation keeps your community safe and engaged.

To choose the right content moderation outsourcing software, you should:

Use this guide as your checklist for every step.

Start by assessing your current moderation needs. Begin evaluating providers who match your goals and standards.

You can moderate text, images, videos, audio, and live streams. Most providers support user-generated content on forums, social media, e-commerce, and gaming platforms.

Most providers offer real-time or near real-time moderation. You often see response times under one minute. Fast action helps you protect your users and brand.

Yes. You set your own rules and filters. Providers let you adjust workflows, blocklists, and escalation paths. This flexibility helps you meet your unique needs.

Reputable providers use strong security protocols. They follow data protection laws and run regular audits. Always check your provider’s certifications and ask about their privacy policies.