< BACK TO ALL BLOGS

Content Moderation Solutions 2025: AI Innovations, Trends & Top Providers

You face a digital world where content moderation demands speed, accuracy, and fairness. In 2025, content moderation solutions blend AI with human insight to manage risks and protect users.

AI now drives the most advanced content moderation solutions. You see platforms like DeepCleer, Amazon Rekognition, and Microsoft Content Moderator API leading the way. These systems use machine learning, natural language processing, and computer vision to scan text, images, video, and audio. You benefit from faster, more accurate, and scalable content moderation that keeps your platform safe and compliant.

You need more than simple keyword filters to keep your community safe. Contextual AI brings a new level of understanding to content moderation. It analyzes not just the words, but also the intent, tone, and context behind each post or image. This means you can catch harmful content that older systems might miss, such as sarcasm, coded language, or cultural references.

You see this technology in action across industries. In healthcare, contextual AI uses patient history and demographics to make safer decisions. In finance, it spots fraud by analyzing behavioral patterns. For content moderation, this means fewer mistakes and better protection for your users.

Tip: Contextual AI helps you avoid over-enforcement, so important conversations—like breast cancer awareness—do not get flagged by mistake.

Your users speak many languages and share content in many formats. Modern content moderation tools now handle this diversity with ease. Platforms like TikTok and LionGuard 2 use multilingual, multimodal AI to scan text, images, audio, and video in real time.

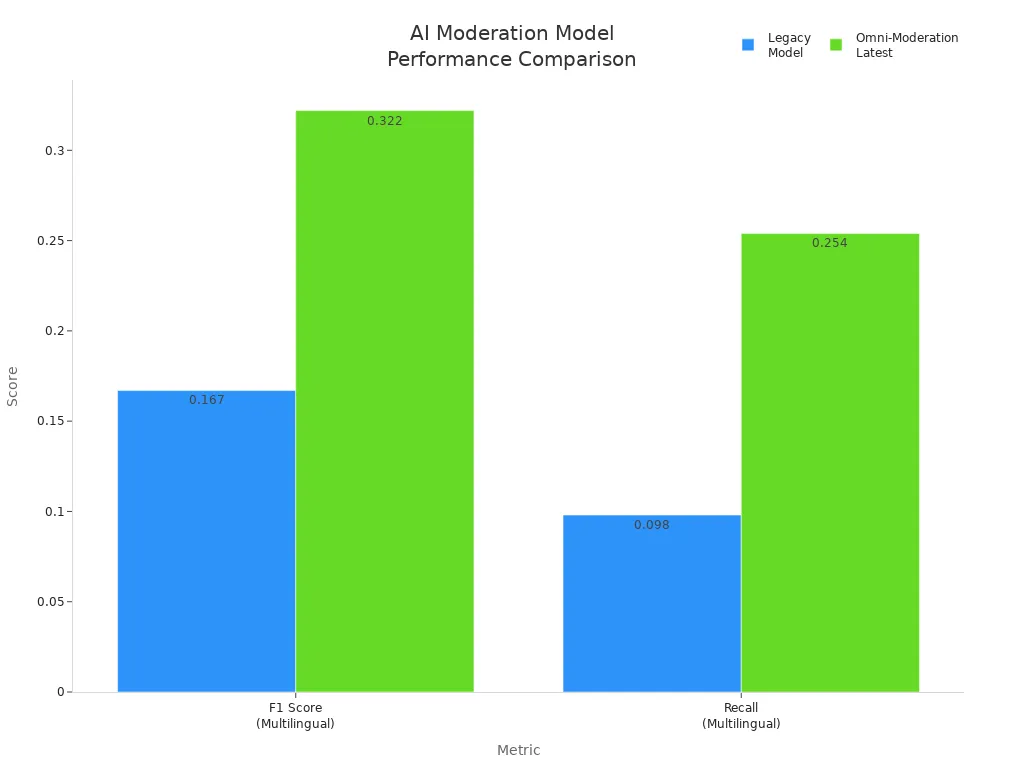

Here’s how the latest models compare:

Metric | Legacy Model Performance | Omni-Moderation-Latest Performance |

|---|---|---|

Input Types | Text only | Text and images |

Content Categories Supported | 11 categories | 13 categories (2 additional) |

Language Support | Primarily Latin languages | Enhanced accuracy across 40 languages, with large gains in low-resource languages like Telugu (6.4x), Bengali (5.6x), Marathi (4.6x) |

F1 Score (Multilingual Dataset) | 0.167 | 0.322 (significantly higher) |

Precision | Higher (fewer false positives) | Lower (more false positives) |

Recall | Lower (0.098 in multilingual) | Higher (0.254 in multilingual, 2.5x legacy) |

Latency | Comparable, minimal differences | Comparable, minimal differences |

AI Moderation Model Performance Comparison

You gain better recall and broader language coverage, which is vital for global platforms. However, you may see a slight increase in false positives, so human review remains important.

You see the best results in content moderation when you combine AI with human expertise. Human-in-the-loop (HITL) systems let AI handle large volumes of content quickly. You step in when the AI faces ambiguous or sensitive cases. This approach prevents over-filtering and misinterpretation.

You help train the AI by providing feedback. Over time, this feedback makes the system smarter and more accurate. You also ensure that content moderation meets ethical standards and builds user trust.

Note: Human-in-the-loop systems let you combine the strengths of both AI and human judgment, leading to better outcomes for your platform.

You need to protect user privacy while keeping your platform safe. Privacy-first approaches in content moderation help you achieve this balance. You can use pii filtering to remove personal information, such as names or addresses, before content reaches moderators or AI systems. This step keeps sensitive data secure and reduces the risk of data leaks.

Many platforms now use compliance-focused moderation to meet strict privacy laws. You must follow rules like GDPR and the Digital Services Act. These laws require you to handle user data carefully and explain how you use it. You should store only what you need and delete data when it is no longer necessary. By using privacy-first tools, you show users that you respect their rights and keep their information safe.

Tip: Choose content moderation solutions that offer strong privacy controls and clear data handling policies. This builds trust and helps you avoid legal trouble.

You want to know why content gets flagged or removed. Explainable AI (XAI) gives you clear answers. XAI tools show which words, images, or patterns led to a moderation decision. This transparency helps you understand the process and spot mistakes or bias.

Regulations like the Digital Services Act and the AI Act now require platforms to explain how AI systems make decisions. You must keep detailed logs and run bias audits to stay compliant. XAI supports compliance-focused moderation by making your decisions easy to review and defend.

You improve content moderation by using XAI. You make your platform safer, fairer, and more open. When you explain your decisions, users feel respected and protected.

You need content moderation that grows with your platform. Cloud-based tools give you the flexibility to handle sudden spikes in user activity. In 2023, over 67% of companies chose cloud services for content moderation because these tools scale quickly and do not require heavy investments in hardware. Large enterprises rely on these solutions to manage huge amounts of user content in many languages and cultures. You can use advanced AI and machine learning to improve accuracy and speed, even as your platform grows.

Edge tools work with cloud systems to give you fast results. Real-time detection pipelines use both edge and cloud inference. Edge inference gives you ultra-low latency for live content, such as livestreams. Cloud inference handles deeper checks with bigger models. This hybrid approach keeps your content moderation solutions stable and cost-effective, even during traffic spikes.

Tip: Modular AI models let you update or replace parts of your system without stopping the whole process. This makes your content moderation more flexible and future-proof.

You face new rules and standards all the time. Automated policy management systems help you keep up. These systems watch for changes in laws and regulations. They send you real-time alerts when something changes, so you can act fast. You get features like obligation mapping, risk tracking, and automated categorization. These tools help you link new rules to your company’s own risks and priorities.

Automated policy management also supports many languages and compares old and new rules to highlight changes. Workflow automation sends updates to the right people, making sure your team responds quickly. AI tags laws, extracts obligations, and summarizes changes, so you understand what to do next. With these systems, you improve record-keeping, audit readiness, and security. You reduce human error and make your content moderation more reliable.

You have many choices when selecting content moderation companies in 2025. The top providers stand out for their accuracy, flexibility, and ability to handle different types of content. They also support many languages and offer real-time moderation.

You should evaluate content moderation companies based on several criteria. Look for accuracy and flexibility, real-time moderation, support for multiple content types and languages, and ease of integration. Compliance, transparency, scalability, cost efficiency, and moderator experience also matter. Each company offers unique strengths, so you can match your needs with the right provider.

You can choose from different service models when using content moderation services. Each model fits different business needs and resources. The table below shows the main differences:

Aspect | Software Tools | APIs | Managed Service Models |

|---|---|---|---|

Core Features | AI-powered filters, dashboards | Developer interfaces, easy integration | AI automation plus human expertise |

Flexibility | High, supports nuanced content | Moderate, needs custom development | High, balances automation and human review |

Scalability | May struggle with large volumes | Built for speed and scale | Scalable, supports large teams |

Human Oversight | Supports human moderation | Mostly automated | Strong human moderation with AI |

Use Cases | Customization, complex content | Fast, API-based solutions | Compliance, contextual understanding |

Challenges | Scalability, consistency | Integration effort, less support | Higher cost, team management |

Best For | Businesses needing human oversight | High-volume, fast integration | Organizations needing compliance and balance |

You might pick software tools if you want to customize your moderation process. APIs work well if you need fast integration and handle lots of content. Managed service models give you a complete solution, combining AI and human expertise. These models help you meet compliance needs and manage large teams. You can choose the best fit based on your platform’s size, complexity, and goals.

Tip: Review your platform’s needs before choosing among content moderation companies and service models. The right choice helps you protect your users and grow your business.

You face unique challenges when moderating content on social media platforms. Every day, users create massive amounts of posts, comments, and videos. You must balance free speech with the need to remove harmful or illegal material. Detecting the intent behind posts, such as sarcasm or coded language, requires advanced tools. New threats like deepfakes and synthetic media add more complexity.

You can address these challenges by investing in advanced AI tools that automate and scale content moderation. Combining AI with human oversight helps you make better decisions. You should also keep your moderation policies transparent and adapt them to local laws and cultures.

Tip: Regular training and support for moderators improve judgment and resilience.

You encounter special issues in gaming communities, especially with real-time voice and chat moderation. Manual filtering cannot keep up with the speed and complexity of live conversations. Players often use slang, sarcasm, or speak over each other, making moderation difficult.

You must meet strict regulations, such as the EU Digital Services Act, which require fast and accurate moderation. Combining AI scalability with human oversight helps you build trust and keep your gaming platform safe.

You face different content moderation challenges in e-commerce. You must ensure product reviews are authentic and prevent counterfeit listings. Automated systems sometimes misinterpret sarcasm or cultural differences, leading to unfair moderation. You also need to protect user privacy and avoid algorithmic bias.

You can use machine learning, natural language processing, and computer vision to handle diverse content types and scale your efforts. Continuous improvement and user feedback help you refine your approach and maintain a safe marketplace.

You now see content moderation evolving with AI and human collaboration, real-time analytics, and privacy-first design. These innovations boost accuracy, safety, and scalability while raising ethical standards. To achieve effective content moderation, you should adopt ethical AI frameworks, invest in diverse datasets, and involve community voices. Regularly update your policies and demand transparency from providers. As regulations and technology advance, you can build safer, more inclusive digital spaces for everyone.

Content moderation means reviewing and managing user-generated content. You use it to remove harmful, illegal, or inappropriate material. This process helps keep your platform safe and trustworthy for everyone.

AI scans large amounts of content quickly. You benefit from faster detection of harmful material. AI also learns from patterns, which helps you catch new threats and reduce manual work.

You need human moderators for complex or sensitive cases. AI can miss context or make mistakes. Human judgment brings empathy and fairness, especially when content is ambiguous or controversial.

Privacy-first tools filter out personal information before review. You keep user data safe by following strict privacy laws. These tools help you build trust and avoid legal risks.