< BACK TO ALL BLOGS

Content Moderation Training Data Buying Guide for 2025

Imagine a digital platform where inappropriate content slips through undetected, damaging trust and safety for users. Choosing the right content moderation training data in 2025 means balancing risks—like false positives, privacy concerns, and cultural bias—against opportunities for efficiency and reputation gains. Platform goals, including regulatory compliance and business interests, shape the selection of data. Effective content moderation protects brand reputation and ensures digital platforms meet trust and safety expectations.

High-quality data forms the backbone of effective content moderation. Automated systems rely on well-annotated datasets to identify harmful or inappropriate material. Researchers have found that manually labeled data, especially with human explanations, improves the accuracy of these systems. However, manual annotation can introduce costs and bias, especially in subjective areas like hate speech.

Fine-tuning large language models with quality examples helps these systems handle complex or contextual content better.

Off-the-shelf models without this fine-tuning often miss important signals.

Users expect digital platforms to protect them from harmful content. Quality training data, especially when it includes human feedback and preference rankings, helps AI models become more helpful and less likely to cause harm. This improvement in model performance and safety builds user trust.

Low-quality or improperly sourced data creates serious compliance risks for companies. Unsafe data, such as toxic language or explicit content, can slip through weak content moderation systems.

Regulatory changes in 2025, such as new child protection laws and expanded definitions of personal information, will require platforms to improve data governance and recordkeeping. Companies must adapt their content moderation practices to meet these evolving standards and maintain trust and safety for all users.

Platforms face new challenges as user-generated content expands beyond text to include images, video, audio, and live streams. Each format brings unique risks and requires diverse, well-labeled data for effective content moderation. AI content moderation systems must adapt quickly to these changes. They need continuous updates to recognize new communication styles and threats.

Real-time detection becomes critical for live streams and fast-moving conversations. Platforms must balance speed with accuracy to prevent harmful material from spreading.

A wide range of challenges arise with evolving content types:

Challenge Category | Description & Key Points |

|---|---|

Platforms must manage large content volumes and peak user activity without losing quality. | |

Multilingual Moderation | Moderation must address many languages and cultures, requiring accurate translation and local expertise. |

Real-time Content Monitoring | Live streams demand immediate action to block objectionable content. |

Deepfake and Manipulated Content | Advanced AI content moderation tools must detect fake videos and images to maintain trust. |

AI content moderation now relies on large language models (LLMs) to automate decisions and improve efficiency. These models use policy-as-prompt methods, encoding rules directly into prompts. This approach reduces the need for massive annotated datasets. LLMs can reach near-human accuracy in some cases, such as detecting toxic or ai-generated content. They also help develop better moderation policies by comparing AI and human decisions.

However, AI content moderation still faces limits. LLMs sometimes miss subtle or implicit toxicity. They require human oversight to ensure fairness and consistency. Real-time detection remains a challenge, especially for new content types. As platforms expand into virtual and augmented reality, AI content moderation must evolve to handle new risks.

Human moderators remain essential for content moderation. They provide judgment on context, intent, and cultural nuance that AI content moderation cannot match. Human review is especially important for high-risk or borderline cases and for appeals. Platforms like Facebook use global teams to review AI-flagged content, ensuring fairness and adapting policies as threats change.

Automated systems offer speed and scale, but they lack the depth of human understanding. A hybrid approach combines AI content moderation for volume with human review for complex decisions. This balance helps platforms manage billions of posts and maintain user trust. As digital spaces grow, human moderation will expand to cover real-time detection and conduct in new environments.

Every organization must set clear goals before acquiring content moderation training data. These goals shape the entire content moderation process and help teams focus on what matters most. A well-defined strategy ensures that the data supports both platform safety and business objectives.

Tip: Regularly update your goals and guidelines to keep up with new content types, threats, and legal changes.

Diverse training data is essential for robust content moderation. Without diversity, AI models may miss harmful content or show bias against certain groups. Industry benchmarks now focus on socio-cultural and demographic diversity to improve fairness and accuracy.

A diverse dataset should include:

Attribute | Description |

|---|---|

Content Types | Text, images, audio, video, and mixed formats |

Demographic Coverage | Mainstream and underrepresented groups |

Language and Culture | Multiple languages and cultural contexts |

Sensitive Topics | Hate speech, misinformation, self-harm, sexual content, and emerging risks |

Real-World Scenarios | Common and rare cases, including edge cases |

Note: Balanced and well-labeled data helps both AI and human moderators make accurate decisions in the content moderation process.

Privacy and security remain top priorities when sourcing content moderation training data. Organizations must protect user information and follow all legal and ethical standards.

Key data attributes include relevance to the moderation task, high quality, and real-world representation. Data must be diverse, balanced, and free from bias. Labels should be clear, and data must be sourced legally and ethically. Keeping data up to date ensures models stay effective.

Organizations should always review privacy laws and update security practices to match new threats and regulations.

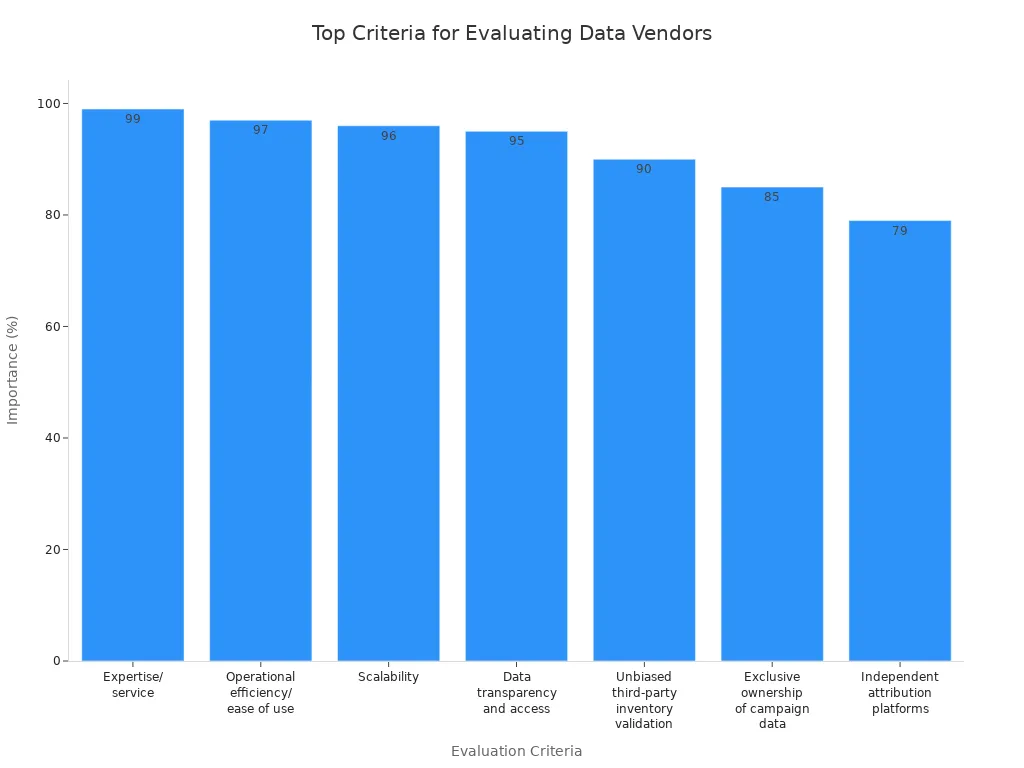

Selecting the right vendor for content moderation data shapes the success of any moderation strategy. Buyers must look beyond price and consider transparency, expertise, and operational efficiency. Recent surveys show that buyers value several criteria when evaluating vendors. The table below highlights the most important factors:

Evaluation Criteria | Importance (%) |

|---|---|

Expertise/service | 99 |

Operational efficiency/ease of use | 97 |

Scalability | 96 |

Data transparency and access | 95 |

Unbiased third-party inventory validation | 90 |

Exclusive ownership of campaign data | 85 |

Independent attribution platforms | 79 |

Top Criteria for Evaluating Data Vendors

Vendors must offer clear data access and transparent practices. Buyers should check for unbiased third-party validation and ensure exclusive ownership of campaign data. Operational efficiency and scalability help platforms handle large volumes of content. Expertise in content moderation services ensures that vendors understand the unique risks and requirements of digital platforms.

Organizations must also assess risks when working with third-party vendors. Common risks include data breaches, compliance failures, and reputational harm. The table below outlines these risks and ways to reduce them:

Risk Type | Description | Impact/Consequences | Mitigation Strategies |

|---|---|---|---|

ESG Risks | Vendors not following environmental, social, or governance standards. | Ethical issues, reputational harm, compliance risks, loss of business. | Due diligence, regular engagement, alignment with ESG goals. |

Reputational Risk | Vendor actions or data breaches harming company reputation. | Loss of trust, negative publicity, brand damage. | Monitoring, due diligence, incident response plans. |

Financial Risk | Vendors causing excessive costs or lost revenue. | Reduced profits, higher costs, instability. | Audits, performance checks, contingency planning. |

Operational Risk | Vendor process failures disrupting operations. | Downtime, inability to perform daily activities. | Continuity planning, key vendor identification, regular testing. |

Cybersecurity Risk | Vendor vulnerabilities leading to breaches or attacks. | Data loss, unauthorized access, malware. | Security assessments, risk thresholds, ongoing monitoring. |

Information Security Risk | Unsecured vendor access, ransomware, or data breaches. | Exposure of sensitive data, penalties, loss of trust. | Limiting access, audits, security protocols. |

Compliance Risk | Vendor non-compliance with laws and regulations. | Fines, legal actions, reputational damage. | Compliance alignment, audits, updated strategies. |

Strategic Risk | External threats like geopolitical events or disasters affecting supply chains. | Disruptions, increased costs, delays. | Diversifying suppliers, local sourcing, risk audits. |

Tip: Always perform due diligence and regular audits to ensure vendors meet security, compliance, and ethical standards.

Balancing cost and quality is a major challenge in the content moderation process. High-quality data often requires more investment, but it leads to better results and safer platforms. Buyers must weigh the benefits of precision and recall against operational complexity and cost. Adjusting moderation thresholds for different platforms and user groups can increase both quality and expenses.

Outsourcing content moderation services can lower costs by using third-party expertise and infrastructure. However, cheaper options may cut corners on training, wellness programs, and technology. This can reduce the effectiveness of content moderation solutions. Outsourced teams may also need extra training to align with company values and policies. In-house experts must stay involved to maintain quality.

Key points to consider when balancing cost and quality:

Data balancing techniques also help improve quality without excessive costs:

Note: Effective pricing frameworks consider data usability, quality, customer needs, and market trends. Buyers should align pricing with both value and quality, not just the lowest cost.

No single solution fits every platform. Many organizations now use custom or hybrid approaches to meet their unique content moderation needs. Custom datasets allow platforms to target specific risks, languages, or cultural contexts. Hybrid approaches combine in-house data with third-party content moderation services, blending control with scalability.

Custom solutions often involve working closely with vendors to define labeling guidelines, select relevant content types, and ensure data diversity. This approach helps address emerging threats and new content formats. Hybrid models let organizations use external expertise while keeping sensitive or high-risk data in-house.

Benefits of custom and hybrid approaches include:

Organizations should regularly review and update their content moderation solutions to keep pace with new risks and technologies.

Regular updates keep content moderation systems effective as online threats evolve. Teams should set up monitoring loops where human moderators review samples of flagged content. Tracking agreement rates among moderators helps spot inconsistencies or fatigue. When agreement drops, retraining or policy updates may be needed. Monitoring model performance metrics like accuracy and coverage can reveal data drift. If drift appears, teams should sample new data, annotate it, and retrain the ai content moderation model. Transparency with moderators about these practices supports ethical standards. Balancing ai content moderation with human oversight ensures the content moderation process stays reliable. Diverse and well-partitioned datasets, such as training and test sets, help optimize performance and reduce business risks.

Best Practices for Data Updates:

Compliance monitoring protects platforms from legal and reputational harm. Many organizations use specialized tools to manage this part of content moderation. The table below highlights common compliance tools and their benefits:

Compliance Tool / Feature | Description | Benefits |

|---|---|---|

Notice and Action Management Tool | Handles reports of illegal or harmful content efficiently. | Faster moderation, legal compliance, transparency, risk reduction. |

Checkstep DSA Plugin | Manages and documents notice and action processes. | Simplifies compliance, speeds response, ensures documentation. |

Risk Assessment Tool | Evaluates risks in content moderation and user data protection. | Proactive risk management, user protection, trust building. |

Checkstep Risk Assessment Solution | Uses AI analytics to identify and reduce risks. | Supports audit readiness, enhances safety and trust. |

Hybrid moderation, which combines ai content moderation with human review, helps balance efficiency and context. Automated and distributed moderation methods also support compliance but may need extra oversight.

Performance checks ensure that content moderation systems meet platform goals. Teams track metrics such as moderation accuracy rate, response time to flagged content, and cost per moderated item. The table below shows key metrics and industry targets:

Metric Name | Description | Industry Benchmark / Target |

|---|---|---|

Moderation Accuracy Rate | Percentage of correct moderation decisions. | |

Response Time to Flagged Content | Time to address flagged content. | <60 minutes |

Cost Per Moderated Item | Cost for each moderated content item. | Under $0.10 per item |

Client Satisfaction Score | Measures satisfaction with moderation services. | Above 80% |

Scalability Index | Ability to handle more content without losing quality. | Supports growth and efficiency |

Teams also monitor precision, recall, and escalation rates. Regular audits and feedback loops improve both ai content moderation and human decision-making. Balancing speed and accuracy remains critical for user safety and compliance.

Successful content moderation starts with clear goals, diverse and well-labeled data, and a balanced approach that combines AI with human oversight. Leading platforms like Airbnb show that continuous improvement, strong policies, and moderator support build trust and safety. Recent trends highlight the need to adapt to new threats and content types using advanced tools and regular training.

Key steps for buyers:

High-quality data includes accurate labels, diverse content types, and clear documentation. It reflects real-world scenarios and covers different languages and cultures. Reliable data helps AI and human moderators make better decisions.

Companies should review and update training data regularly. Many experts recommend quarterly updates. Frequent updates help address new threats, trends, and changes in user behavior.

Public datasets offer a starting point. However, companies must check for relevance, diversity, and compliance with privacy laws. Custom or proprietary data often provides better results for specific platform needs.

Buyers face risks like data bias, privacy violations, and poor labeling.

Companies should vet vendors, request transparency, and perform regular audits to reduce these risks.

Generative AI Moderation Solution