< BACK TO ALL BLOGS

Choosing Content Moderation Training Data for Modern Needs

Social media platforms now handle massive volumes of user-generated content, including images, videos, and reviews.

AI content moderation depends on high-quality, diverse data to detect harmful material and reduce risk. Poor data selection can lead to compliance failures and loss of user trust. Effective content moderation requires strategic data choices that align with platform values.

Social media platforms now support a wide range of digital content. Over the past five years, the most common types include:

These new formats challenge ai content moderation systems. They must detect harmful material across text, video, and audio. User-generated content often spreads quickly, making real-time content moderation essential for safety and compliance with community guidelines.

Regulations now shape how social media companies approach content moderation. Laws in the UK, EU, and US set strict rules for removing harmful material and protecting users. The table below highlights key regulations:

Regulation / Region | Key Requirements | Challenges | Trends |

|---|---|---|---|

UK Online Safety Bill | Remove illegal content, verify age | Privacy vs. monitoring | More platform responsibility |

EU Digital Services Act | Transparency, user appeals | Complex compliance | Focus on accountability |

Liability shield for moderation | Under review | Policy shifts expected |

Companies must update ai content moderation to meet these standards. They must also audit user-generated content and adapt to new laws. Global platforms face extra challenges as rules differ by country.

Users expect social media platforms to enforce community guidelines fairly and transparently. Most users want to understand the rules and have access to appeals. Studies show that 78% of Americans want unbiased content moderation, and 77% want transparency about government involvement. Only 19% trust platforms to moderate fairly. When users see unfair moderation, they may leave or lose trust in the platform. Platforms that explain their community guidelines and offer clear appeals build stronger, safer user-generated environments.

Social media platforms face enormous content moderation challenges due to the sheer volume and diversity of user posts. Every minute, users upload millions of images, videos, and comments. Leading companies use a mix of AI-powered moderation, human review teams, and community reporting to manage this scale. AI systems scan content in real time to detect hate speech, violence, and policy violations. Human moderators step in for complex cases, providing context and cultural understanding. Community reporting lets users flag harmful material, but this system can be misused.

Note: Universal community guidelines help platforms moderate content globally, but these rules sometimes erase cultural differences. For example, Facebook once deleted a harm reduction group that shared drug safety advice, removing important information for some communities.

Hybrid moderation approaches combine AI filtering with human review, creating a feedback loop that improves accuracy. However, platforms still struggle with contextual ambiguity, emerging technologies like deepfakes, and the mental health impact on moderators. Social media companies also gather user feedback to shape moderation policies, but balancing diverse opinions remains difficult. Cross-cultural moderation adds another layer of complexity, as content that is acceptable in one culture may be offensive in another.

Each social media platform faces unique content risk factors. Algorithmic moderation can introduce bias, lack transparency, and lead to disproportionate censorship of certain groups. For example, automated systems have sometimes removed posts from Arabic speakers or activists, raising concerns about safety and freedom of expression. Regulatory pressures push platforms to automate moderation, but this can shift accountability and cause collateral damage to marginalized communities.

Emerging risks include private messaging and AI-generated content, which raise new questions about surveillance and fairness. Platforms must balance safety with freedom of expression, ensuring that moderation practices do not silence important voices or damage trust.

Social media platforms serve global audiences with different languages, cultures, and viewpoints. To moderate content effectively, companies need training data that reflects this diversity. Diverse datasets help content moderation systems recognize a wide range of abuse types and policy violations. They also reduce the risk of missing harmful material that appears in less common formats or languages.

Note: Social media companies that use diverse data can adapt to new trends and evolving community guidelines faster. This approach also supports inclusivity, helping platforms serve users from different backgrounds.

High-quality annotation forms the backbone of reliable content moderation. Social media companies must ensure that data labeling is consistent, accurate, and aligned with community guidelines. Annotation quality depends on clear instructions, expert oversight, and continuous improvement.

Social media platforms that invest in annotation quality see measurable improvements. For example, clear sarcasm detection protocols increased sentiment analysis accuracy from 76% to 89%. Three-tier review systems reduced annotation errors from 12% to 2.3%.

Bias in content moderation can harm user trust and lead to unfair enforcement of community guidelines. Social media companies must address both historical and emerging biases in their training data. Unchecked bias can result in discrimination against underrepresented groups or over-censorship of certain communities.

Tip: Combining human judgment with AI allows social media platforms to handle complex cases while reducing bias. Regular audits and updates keep moderation practices fair and current.

Privacy compliance stands as a critical requirement for content moderation on social media. Companies must protect user data and follow regulations such as GDPR. Failure to comply can lead to legal penalties and loss of user trust.

Note: Social media platforms that prioritize privacy compliance not only avoid legal risks but also strengthen user trust. Clear communication about data use and protection reassures users and supports long-term engagement.

Social media companies face a critical decision when choosing between outsourcing and building in-house teams for content moderation strategies. Each approach offers unique benefits and challenges. The table below compares key aspects:

Aspect | Outsourcing Advantages | Outsourcing Disadvantages | In-House Advantages | In-House Disadvantages |

|---|---|---|---|---|

Productivity | Handles large volumes efficiently | Needs oversight for partner performance | Direct process control | May lack scalability |

Adaptability | Adapts quickly to new technologies | Depends on external infrastructure | Aligns with brand values | Slower tech adoption |

Expertise | Access to specialized knowledge | Complex governance, quality control needed | Deep brand understanding | High training costs |

User Security | Improves compliance and user trust | Data security risks if not managed | Full data security control | Risk of compliance gaps |

Cost Efficiency | Reduces costs through specialized vendors | Setup and transition costs | Avoids outsourcing fees | Higher operational costs |

Quality Control | Audits and feedback from providers | Less direct management | Direct quality control | Consistency challenges at scale |

Scalability | Scales resources quickly | Needs strong governance | Consistent standards | Scalability limits |

Outsourcing gives social media platforms access to professional moderation services, advanced technology, and cost savings. However, it introduces data security concerns and requires strong oversight. In-house teams offer direct control and alignment with brand values but may struggle with scalability and higher costs. Many social media companies assign a subject matter expert to coordinate with outsourcing partners, ensuring quality and smooth transitions.

Tip: Social media platforms should consider hidden costs, technology dependencies, and the need for redundancy when outsourcing, especially in regions prone to operational disruptions.

Social media platforms must tailor content moderation strategies to fit their unique community guidelines and evolving user behaviors. Platforms often update policies in response to new trends or societal events, such as viral challenges or global health crises. They use layered policies, combining broad public rules with detailed internal guidelines to help moderators make consistent decisions.

Some social media platforms decentralize moderation, allowing individual communities to set additional rules. This approach supports diverse user needs and strengthens trust. Case studies show that platforms like Frog Social and theAsianparent improve accuracy and reduce manual workload by customizing AI-powered moderation to their unique requirements.

Note: Social media platforms that invest in clear guidelines, regular training, and transparent communication build safer, more inclusive online spaces.

Data labeling platforms play a vital role in building effective content moderation tools. These platforms help companies prepare high-quality data for ai content moderation systems. Leading platforms stand out by offering features that support the needs of modern social media environments.

These features help companies choose the right moderation tools for their unique needs.

Modern ai content moderation relies on advanced integration of artificial intelligence and machine learning. Social media platforms use these technologies to process large volumes of user-generated content. AI-powered systems scan millions of posts, images, and videos every day. These systems identify harmful material quickly and accurately.

AI content moderation tools reduce manual tagging time by up to 75% in news organizations. E-commerce sites report a 30% improvement in customer satisfaction scores through AI-powered sentiment analysis. These moderation tools improve efficiency, accuracy, scalability, and consistency. Human moderators can then focus on complex or sensitive cases, while AI handles routine tasks.

Note: Companies that invest in ai content moderation see faster response times and better user experiences.

Many organizations turn to commercial vendors for sourcing training data for content moderation. These vendors offer professional annotation services, providing image, video, and text labeling tailored to specific needs. Their teams include experts who understand cultural differences and regulatory requirements. Vendors often use a mix of AI tools and human moderators, which improves accuracy and efficiency. They also provide 24/7 monitoring and ensure compliance with global content laws. However, using vendors can introduce risks. Companies may lose some control over moderation processes. Communication barriers and cultural misunderstandings can affect results. Quality assurance requires regular audits, and data security must meet strict standards like GDPR and CCPA.

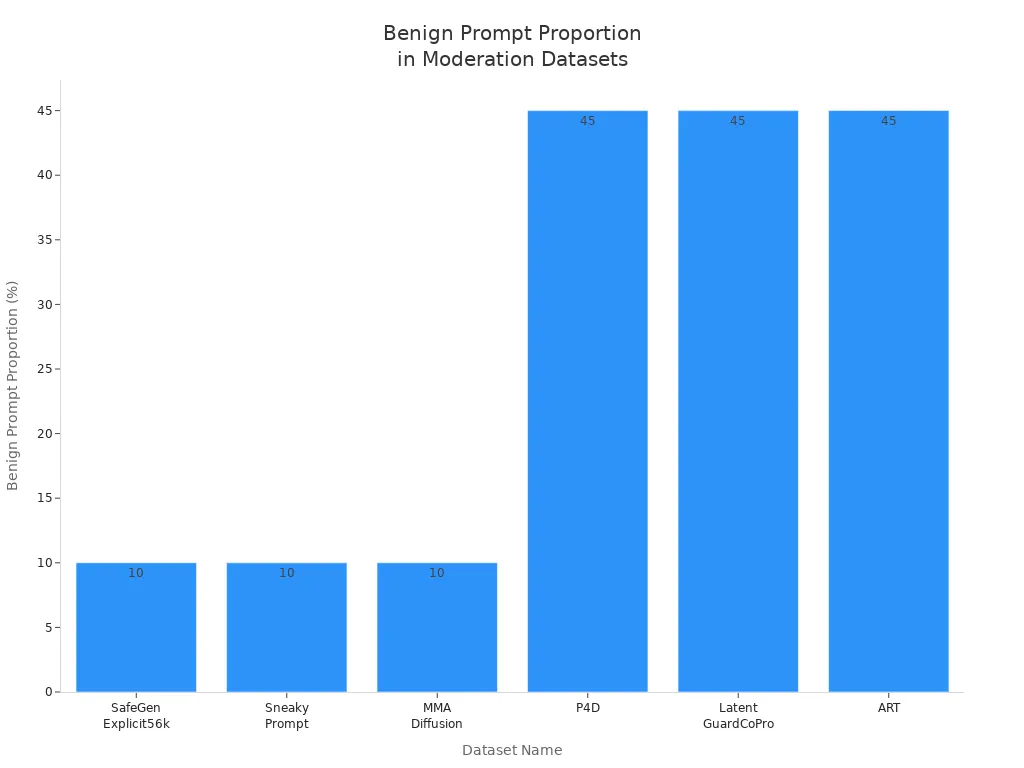

Open datasets give organizations access to large collections of real-world content for training moderation systems. These datasets vary in focus, balance, and diversity. Some focus heavily on sexual content, while others offer a better mix of harmful and benign material. The table below compares several widely used open datasets:

Dataset Name | Harm Coverage Focus | Benign Prompt Proportion | Coverage Balance | Quality / Diversity Notes |

|---|---|---|---|---|

SafeGenExplicit56k | Sexual content (~49%) | <10% | Poor | Limited diversity, skewed towards sexual content |

SneakyPrompt | Sexual content | <10% | Poor | Limited diversity, skewed towards sexual content |

MMA Diffusion | Sexual content | <10% | Poor | High syntactic diversity, adversarial prompt generation |

P4D | Balanced harm coverage | >45% | Best | Some redundancy, wider n-gram diversity |

LatentGuardCoPro | Balanced harm coverage | >45% | Best | Some redundancy, focus on unsafe input prompts |

ART | Balanced harm coverage | >45% | Best | Some redundancy, wider n-gram distribution |

Most open datasets lack diversity in harm categories like discrimination or misinformation. Many also have inconsistent labeling and limited documentation. Organizations should select datasets with broad harm coverage and high-quality annotations to improve moderation outcomes.

Some companies choose to collect their own training data. This approach allows them to tailor datasets to their platform’s unique needs. Best practices for custom collection include:

Synthetic data, created by generative AI, can help fill gaps and reduce bias. However, teams must evaluate synthetic data carefully to avoid issues like lack of real-world variability. Combining ethically sourced real-world and synthetic data creates a strong foundation for content moderation systems.

Transparency stands as a key factor when selecting a content moderation data provider. Providers must show clear processes, reliable quality, and strong data security. Leading vendors often hold certifications that prove their commitment to transparency and trustworthiness. These certifications and practices help platforms verify a provider’s standards:

A transparent provider shares information about annotation guidelines, review processes, and error rates. They also explain how they handle edge cases and update their models. Platforms should look for vendors who communicate openly and provide clear documentation. This approach helps platforms maintain compliance and build user trust.

Ongoing support and regular updates keep content moderation systems effective. Leading providers combine human expertise with machine learning. Human annotators label content based on evolving guidelines. This process creates annotated datasets that train and refine AI models over time.

Providers use feedback loops to improve accuracy. They address challenges such as annotator disagreement and changes in moderation rules. Some vendors use large language models (LLMs) and a policy-as-prompt framework. This method allows dynamic updates to moderation policies without retraining the entire model. It gives platforms more flexibility and faster adaptation to new risks.

Providers also operationalize policies into clear protocols. They create detailed annotation guidelines, train human moderators, and set model thresholds. Regular updates to these guidelines help address new content types and edge cases. Technology enables scalable and adaptive moderation systems that respond to changing societal norms and platform needs.

Tip: Platforms should choose providers who offer responsive support, regular updates, and clear communication channels. This partnership ensures that moderation systems stay current and effective.

Cost plays a major role in selecting a content moderation data provider. Providers use different pricing models based on technology, compliance, training, and customization. Each cost component affects the long-term budget of a platform. The table below outlines typical cost structures and their impact:

Cost Component | Typical Cost Range / Impact | Impact on Long-Term Platform Budgets |

|---|---|---|

Technology Upgrades & AI Training | Ongoing investment increases fixed costs over time | |

Compliance and Legal Fees | Up to 15% of revenues | Recurring expense affects profitability |

Employee Training & Turnover | Training costs increase by ~20% with staff changes | Higher operational costs and need for continuous workforce development |

Client-Specific Customization | Adds 5-10% to operational costs | Customization increases expenses but can improve retention and revenue |

Hybrid Moderation Models | Profit margins typically 15-25% | Combining AI and human oversight optimizes costs and improves margins |

Operational Efficiencies | Cost reductions of 15-20% via automation and remote work | Directly lowers labor and overhead expenses, benefiting budgets |

Profitability Improvements | Potential increase of 60-110% | Effective cost management and scaling can improve financial health |

Platforms should evaluate both upfront and recurring costs. Technology upgrades and compliance fees require ongoing investment. Employee training and turnover can raise operational expenses. Customization adds value but increases costs. Automation and hybrid models can reduce expenses and improve margins. Providers who manage costs well help platforms achieve long-term financial health.

Note: A thorough cost analysis helps platforms avoid hidden expenses and choose providers who deliver value and scalability.

Pilot testing helps organizations validate new content moderation practices before full deployment. Teams follow a structured approach to reduce risks and ensure readiness:

1. Define the purpose, goals, and scope of the pilot project. 2. Establish success criteria with input from all stakeholders. 3. Outline the benefits of the pilot and the risks of skipping this step. 4. Design the pilot’s scope and duration, selecting participants and data samples. 5. Prepare participants with training and pre-pilot testing. 6. Conduct the pilot, testing technical performance and integration. 7. Monitor and document issues, including user feedback and workflow impacts. 8. Assess hardware, software, and user acceptance. 9. Evaluate results against success criteria to validate requirements. 10. Use lessons learned to adjust training and workflows before scaling up.

This process ensures that content moderation practices meet platform needs and adapt to real-world challenges.

Continuous evaluation keeps content moderation practices effective over time. Organizations audit human moderation decisions by sampling and reviewing actions. They measure false positives and false negatives to spot errors. For automated tools, teams calculate precision and recall by comparing AI results to a ground truth dataset. Monitoring violation rates, user reports, and customer satisfaction helps track performance. Root cause analysis addresses gaps between human and automated moderation. Automated dashboards provide real-time tracking, supporting ongoing improvement. Collaboration with clients clarifies community guidelines and makes enforcement more precise.

Feedback loops play a vital role in improving content moderation practices. Users and moderators report mistakes, which helps refine AI models and reduce errors. Human feedback, such as labels and corrections, supports fairness and accuracy. Quality control teams use feedback to identify and fix moderator mistakes quickly. This process realigns moderators with updated policies and prevents repeat errors. Data from feedback loops supports root cause analysis and policy updates. These steps ensure that training data and moderation processes evolve to meet changing needs.

Selecting and integrating content moderation training data involves several key steps:

Regularly reviewing data strategies and vendor partnerships ensures platforms adapt to evolving threats and regulations. Investing in high-quality data and robust moderation practices leads to safer communities, improved trust, and long-term platform success.

High-quality training data includes accurate labels, diverse examples, and clear documentation. Teams check data for errors and update it often. Reliable data helps AI models spot harmful content and follow platform rules.

Teams review datasets for fairness and include many cultures and languages. They use audits and feedback to find and fix bias. Regular updates keep the data current and balanced.

AI handles large volumes quickly. Human moderators review complex or sensitive cases. This combination improves accuracy and fairness. It also helps platforms adapt to new risks.

Companies remove personal details from data. They follow laws like GDPR and use secure storage. Regular audits and clear privacy policies protect user information and build trust.

80% direct reduction, how can the image-text sentiment analysis model save the cost of human review?