< BACK TO ALL BLOGS

The Ultimate Image Moderation Guide for Platforms

Digital platforms face growing challenges as unmoderated images can put users and brands at risk. Common threats include:

Image moderation stands as a critical defense for trust and safety. Leaders must ask: How can they select and implement the best image moderation solution to protect their communities and maintain trust and safety?

Image moderation serves as a process that reviews, analyzes, and filters every image uploaded to digital platforms. This process ensures that each visual aligns with platform guidelines, community standards, and legal requirements. The main goal is to identify and handle inappropriate, offensive, or harmful visual content. Platforms use several methods to achieve effective content moderation:

1. Human Moderation: Human moderators manually review each image. They interpret context and intent, looking for violence, nudity, hate speech, and other inappropriate visual elements. 2. AI Image Moderation: Automated systems use computer vision and machine learning to scan and categorize images. These systems detect objectionable visual content such as nudity, violence, or hate symbols. 3. Hybrid Approach: Platforms often combine AI and human review. AI filters obvious violations, while humans handle complex or culturally sensitive visual cases.

Image moderation aims to protect users, uphold brand reputation, and foster positive user experiences. However, challenges include scalability, accuracy, cultural sensitivity, and bias. Platforms must balance speed and accuracy to maintain effective content moderation for all visual uploads.

Image moderation plays a vital role in content moderation strategies for any online community. The reasons for its importance include:

Primary Reason | Explanation |

Protecting Users | Prevents exposure to graphic or harmful images, especially protecting minors and sensitive users. |

Mitigating Cyberbullying | Identifies and removes images used for harassment or bullying, fostering a respectful environment. |

Maintaining Community Standards | Enforces platform policies by detecting and removing violating images; prevents spread of misleading or false information. |

Protecting Brand Reputation | Blocks harmful images that could damage brand image, thereby preserving user trust and confidence. |

Complying with Laws and Regulations | Ensures adherence to legal requirements, avoiding fines and penalties; protects intellectual property rights by detecting infringements. |

Promoting Positive User Experience | Creates a safe and welcoming atmosphere, encouraging user participation, retention, and engagement. |

Platforms that invest in robust image moderation build safer, more trustworthy digital spaces. Effective content moderation of visual material not only protects users but also strengthens brand reputation and legal compliance. Every image matters in shaping the overall user experience and the integrity of the platform.

Platforms face a wide range of harmful content in user-uploaded images. These risks threaten user safety and platform integrity. Common types of harmful content include:

Platforms use AI and human moderators to detect and remove these images. However, challenges remain in defining and identifying harmful content, especially across different cultures and languages.

Content moderation failures can lead to serious legal consequences. Courts have started to scrutinize platform product design and algorithmic curation. In some cases, courts have ruled that these features are not protected by Section 230 or the First Amendment. For example, in Lemmon v. Snap (2021), a Snapchat product feature caused harm, exposing the platform to liability. Nearly 200 cases have alleged product defects related to content moderation failures. Some lawsuits have survived dismissal attempts.

Governments worldwide are strengthening digital laws targeting harmful content. Platforms that do not moderate images effectively risk regulatory scrutiny, hefty fines, and public backlash. Litigation costs from user claims can add financial strain and divert resources from product development. Investing in robust content moderation helps platforms avoid costly legal repercussions.

Unmoderated images can quickly damage brand reputation and erode user trust. Negative or spammy images reduce perceived legitimacy, causing users to lose trust. Studies show that 94% of consumers avoid businesses after seeing negative reviews. Harmful content can drive users away, with 76% less likely to engage after a negative interaction. Buyers often check images and reviews before purchasing, so harmful content can directly affect sales.

Negative Impact | Explanation |

Negative Brand Perception | Unmoderated images can create a harmful impression by allowing inappropriate or offensive content, damaging brand reputation and eroding trust. |

Legal Liability | Brands risk lawsuits or fines if unmoderated content violates laws (e.g., copyright infringement, defamation). |

Security Concerns | Unmoderated content can harbor malware or phishing attacks, endangering users and harming brand credibility. |

Loss of Revenue | Offensive or inappropriate images can drive customers away, directly reducing revenue. |

Brand Consistency | Lack of moderation disrupts brand voice and messaging, weakening user confidence and brand integrity. |

Effective content moderation protects both users and brands. Platforms that invest in strong image moderation build trust, reduce legal risk, and maintain a positive brand image.

AI-powered content moderation tools have transformed how platforms manage visual content. These tools use visual AI and machine learning capabilities to scan every image for harmful or inappropriate material. Advanced visual AI now supports multimodal moderation, combining text, video, audio, and image analysis for better accuracy. For example, YouTube uses multimodal AI to scan videos, analyze speech, and check metadata, making content moderation more effective. Visual AI can process millions of images in seconds, making automated content moderation fast and scalable. Automated background removal is another key feature, helping platforms filter out unwanted visual elements. However, visual AI sometimes struggles with complex context or new trends. Generative AI and automated background removal improve detection, but risks like hallucinations and technical complexity remain.

Human moderators play a vital role in content moderation. They review images that automated content moderation tools flag as uncertain. Humans excel at understanding visual context, cultural differences, and subtle cues in visual content. They can spot coded messages or evolving slang that visual AI might miss. However, human review is slower and more expensive than automated content moderation. It is hard to scale human teams for platforms with millions of visual uploads. Reviewing disturbing visual content can also affect mental health. The table below compares strengths:

Factor | AI Moderation Strengths | Human Moderation Strengths |

Speed | Processes content in milliseconds, real-time | Slower, limited capacity |

Scalability | Handles massive volumes, easily scalable | Difficult to scale quickly |

Consistency | Applies rules uniformly, consistent decisions | Decisions may vary between moderators |

Cost | Cost-effective, reduces need for many humans | More expensive due to labor costs |

Context | Limited understanding of nuance and sarcasm | Excels at understanding context, nuance, culture |

Mental Health | No psychological impact | Risk of mental health issues |

Hybrid models combine the strengths of visual AI and human review for the best results in image moderation. Automated content moderation tools first scan visual uploads, using visual AI to flag clear violations and perform automated background removal. Images that need deeper review go to human moderators, who apply cultural and ethical judgment. This approach improves accuracy and reduces errors. Human feedback helps train visual AI, making automated content moderation smarter over time. Hybrid models handle large volumes of visual content while ensuring that complex or sensitive images get the attention they need. Automated background removal and visual AI work together with human insight to create a safer, more reliable moderation process.

Selecting the right content moderation product starts with a critical decision: build an in-house solution or buy a third-party platform. Each approach offers unique advantages and challenges.

Key factors to consider:

Clear, platform-specific moderation guidelines and a combination of AI technology with human moderators improve accuracy and accountability. Transparency in moderation policies builds user trust and supports consistent enforcement.

Building an image moderation product demands a dedicated technical team, ongoing maintenance, and regular updates. This approach suits platforms with unique requirements that off-the-shelf solutions cannot meet. In contrast, buying a solution like DeepCleer provides ready-to-use AI-powered tools, reducing deployment time and eliminating the need for specialized in-house resources. Most businesses find purchasing an established product more cost-effective and efficient.

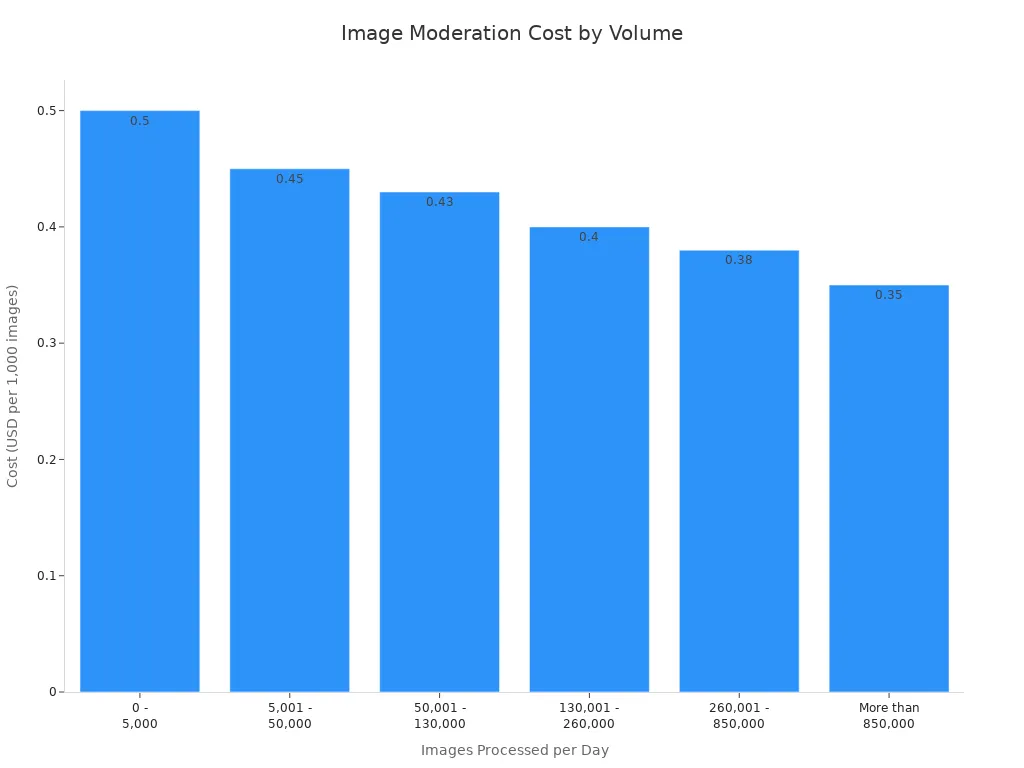

Volume of Images Processed per Day | Approximate Cost (USD per 1,000 images) |

|---|---|

0 - 5,000 | $0.50 |

5,001 - 50,000 | $0.45 |

50,001 - 130,000 | $0.43 |

130,001 - 260,000 | $0.40 |

260,001 - 850,000 | $0.38 |

More than 850,000 | $0.35 |

Advanced image moderation services cost about $1.20 per 1,000 images, while standard services are around $0.60 per 1,000 images. This pay-as-you-go model allows businesses to scale costs directly with usage, avoiding the large upfront commitments typical of building a custom product.

Image Moderation Cost by Volume

Outsourcing content moderation to specialized vendors has become a popular choice for many platforms. This approach offers several advantages:

However, outsourcing also presents challenges:

Advantages of Outsourcing Image Moderation | Disadvantages of Outsourcing Image Moderation |

Cost savings by avoiding internal hiring and training expenses | Quality control challenges due to inconsistent moderation decisions |

Improved efficiency through experienced vendors with refined processes | Cultural and contextual misunderstandings affecting moderation accuracy |

Access to specialized expertise, including legal compliance and brand protection | Data security and privacy risks from sharing user content with third parties |

Scalability to handle fluctuating content volumes and spikes | Ethical concerns regarding the mental well-being of outsourced moderators exposed to harmful content |

Enhanced user experience by creating a safe and engaging environment | Need for strong governance and oversight to ensure partner compliance with company policies |

Reduced logistical and financial burdens of maintaining an internal team | Potential regulatory compliance challenges across different jurisdictions |

Increased adaptability by leveraging latest moderation tools and industry knowledge | N/A |

Outsourcing allows platforms to focus on their core product while leveraging the expertise and scalability of specialized vendors. Strong governance and regular audits help ensure alignment with company standards.

Choosing the right content moderation vendor requires a careful evaluation of several criteria. The ideal product must align with the platform’s needs and support future growth.

Key evaluation criteria:

Feature | Purpose | Impact |

|---|---|---|

Scalability | Manage increasing content volumes | Ensures smooth platform operation as it grows |

API Integration | Connect with existing systems | Enables seamless data flow and automation |

Multi-language Support | Monitor content in various languages | Supports a global user base |

Real-time Reporting | Track moderation metrics | Provides actionable insights for decision-making |

Customizable moderation rules, human-AI collaboration, and a strong vendor reputation are crucial for selecting a content moderation product.

Examples of Top Vendors:

Platforms should match the product’s strengths to their specific content types and volume. Performance varies by vendor depending on training data specificity. A thorough evaluation ensures the chosen product supports both current and future content moderation needs.

E-commerce platforms rely on high-quality image moderation to maintain trust and safety. Every image uploaded, whether for a product listing or as user-generated content, shapes the overall product presentation. The unique needs of e-commerce require careful review of both official product images and customer-submitted visuals. These images must meet strict standards for accuracy, legality, and appropriateness.

Common types of images that require moderation on e-commerce platforms include:

E-commerce images present challenges for moderation. Filtering explicit or misleading visuals can be difficult due to context and artistic variations. Deepfakes and manipulated images require advanced forensic AI tools. Cultural sensitivity also plays a role, as what is acceptable in one region may be offensive in another. Platforms must process a high volume of images in real time, which demands scalable solutions. Hybrid moderation, combining AI and human review, helps balance accuracy and context understanding. Automated background removal supports enhanced product presentation by isolating the product from distracting elements, improving visual clarity and consistency.

Brand safety stands as a top priority for every e-commerce platform. Effective image moderation protects the brand from reputational damage caused by offensive or misleading visuals. Nearly 93% of buyers consider online reviews and user-generated content before making purchase decisions. Moderation ensures that product images and reviews remain genuine and appropriate, building consumer trust.

A well-moderated platform filters out inappropriate or counterfeit visuals, ensuring only authentic product presentation reaches customers. This approach improves user experience and encourages repeat purchases. Companies that respond quickly to negative feedback and maintain accurate product presentation demonstrate commitment to trust and safety. Automated background removal further enhances product presentation by providing clean, distraction-free visuals. Compliance with legal and ethical standards, such as GDPR and CCPA, reduces risk and supports a trustworthy marketplace. E-commerce platforms that invest in robust image moderation and background removal create an environment where users feel safe and confident in their purchases.

Online platforms must navigate a complex landscape of global laws that govern image moderation and content compliance. Regulations such as the EU Digital Services Act require platforms to implement features like user blocking, reporting tools, and transparent content removal processes. These laws often demand that platforms provide users with ways to contest moderation decisions. Many countries impose strict penalties, including fines and even prison sentences for employees who fail to comply.

Platforms face challenges when laws require the removal of content that is "legal but harmful." This creates uncertainty in defining and enforcing community guidelines. Some regulations do not distinguish between large and small platforms, applying the same rules to all. Countries like those in the European Union, United Kingdom, United States, Australia, and Canada have introduced their own laws, each with unique requirements and enforcement methods.

Region/Country | Key Laws/Regulations | Enforcement and Penalties |

|---|---|---|

European Union | Digital Services Act, Digital Markets Act | Fines up to 6% of global turnover |

United Kingdom | Online Safety Act | National enforcement bodies, penalties |

United States | Section 230, State laws | Ongoing judicial cases, overlapping mandates |

Australia | eSafety Commissioner regulations | Enforcement notices, reforms |

Canada | Online Harms Act | Legislative enforcement mechanisms |

Platforms must monitor AI moderation accuracy and adapt community guidelines to meet evolving legal standards. These steps help maintain user safety and transparency.

Data privacy laws like GDPR and CCPA have a major impact on image moderation systems. Images with identifiable information, such as faces or license plates, are treated as personal data. Platforms must follow strict rules for data minimization, purpose limitation, and security. To comply, many use AI-powered anonymization tools that blur or mask personal details in images. Manual methods often prove slow and error-prone, while automated solutions offer speed and consistency.

CCPA gives users rights to opt out of data collection, making it important for platforms to respect user choices. AI-driven anonymization helps platforms process large volumes of images while protecting privacy. This approach reduces legal risks and builds trust with users. Non-compliance can lead to heavy fines, reputational harm, and legal disputes. By integrating privacy features into image moderation and aligning with community guidelines, platforms can balance safety, compliance, and innovation.

Successful integration of image moderation tools into platform workflows ensures a seamless user experience and strong product presentation. Teams should:

Teams should avoid storing sensitive data unless necessary and always obtain user consent.

Testing ensures that image moderation solutions deliver reliable results for every product and user-generated content submission. Teams should:

This approach supports proactive content moderation and maintains high-quality product presentation.

Human moderators need continuous training to ensure consistent and unbiased image moderation. Effective training protocols include:

This process helps moderators handle user-generated content and maintain a positive experience for all users.

User feedback drives continuous improvement in image moderation and product presentation. Platforms should:

Regular collaboration with clients and moderation teams ensures that best practices for content moderation evolve with changing trends and user needs.

Practice | Benefit |

|---|---|

Feedback loops | Improve moderation accuracy |

Real-time data visibility | Enhance product experience and trust |

Continuous training | Support consistent product presentation |

Background removal, when integrated into these workflows, improves product presentation and user experience by delivering clean, distraction-free images. This approach supports a safe environment for user-generated content and strengthens the overall product.

When evaluating content moderation tools, platforms should focus on precision and recall as the most important performance metrics. Precision measures how often the product correctly flags harmful images, while recall shows how many harmful images the product finds. High precision reduces false positives, so fewer safe images get blocked. High recall means the product catches most harmful content. Many content moderation solutions now provide confidence scores for each decision, allowing teams to set custom thresholds. Automation efficiency also matters. Some tools save over 70% of manual moderation time, letting teams focus on complex cases. Platforms should also track how often users appeal decisions and how many cases get overturned. These metrics help measure the real-world experience and effectiveness of the product.

Leading content moderation tools offer a wide range of features to meet different platform needs. Key features include real-time moderation, AI-powered detection, and support for multiple content types like images, video, and live streams. Many products use advanced computer vision and natural language processing to improve accuracy and reduce manual review. Integration with IoT devices allows smarter, context-aware filtering. Customization options let platforms set their own rules and workflows. Top products also support multi-language moderation and provide strong API integration for easy setup. The table below compares features of popular content moderation solutions:

Product | Scalability | Accuracy | Customization | Integration | Advanced AI | Use Case Focus |

|---|---|---|---|---|---|---|

Google Cloud Vision API | High | Strong | Safe Search | Easy | Machine Learning | Enterprise management |

Microsoft Azure | Enterprise | Strong | Custom lists | Tailored | OCR, AI | Content moderation |

Picpurify | Proven | 98% | Custom models | Real-time | AI-driven | Enterprise needs |

Sightengine | Scalable | High | Nudity, redaction | Easy | Multi-modal | Multi-modal moderation |

Pricing for content moderation tools varies based on the product, moderation method, and business needs. In-house solutions require investment in staff, technology, and ongoing training. Outsourced products often use pay-as-you-go or subscription models, which can scale with content volume. Key cost factors include the number of images, the complexity of content, and the need for real-time moderation. Products that handle deep fakes or require cultural expertise may cost more. Outsourcing can free up resources and provide access to the latest technology and local knowledge. There is no single pricing model, so platforms should compare options based on their size, content type, and desired experience.

Checklist for Evaluating Content Moderation Solutions:

Generative AI is changing the landscape of image moderation. Platforms now face a surge in AI-generated images and videos. These visuals often appear more realistic and context-aware, making them harder to detect with traditional tools. Modern moderation systems must understand subtle cues, cultural references, and emotional tones. This shift requires smarter AI and better collaboration between machines and humans.

Key trends shaping the future of image moderation include:

Emerging Trend | Description |

|---|---|

AI-Driven Contextual Understanding | AI now grasps tone, intent, and cultural nuances, improving trust in moderation. |

Use of Generative AI and Synthetic Data | Synthetic data strengthens training, making models more robust and adaptable. |

AI-Human Hybrid Moderation | AI handles volume, while humans manage sensitive or complex content. |

Multi-Modal Foundational AI Models | Systems analyze images, audio, text, and sensor data for a complete view. |

Deepfake and AI-Generated Content Detection | Advanced tools spot deepfakes and synthetic media, stopping misuse quickly. |

Real-Time Moderation for Video and Live Streaming | Platforms now moderate live content with predictive analytics and user controls. |

Stricter Regulatory Frameworks and Ethics | Laws require platforms to show accountability, reduce bias, and protect privacy. |

Generative AI brings new challenges and opportunities. Platforms must invest in smarter, more ethical moderation to keep pace with evolving content.

Image moderation must evolve to address new threats and content types. Platforms use machine learning to help systems learn from past decisions and user feedback. This process allows AI to adapt and recognize new forms of inappropriate content. Customization ensures moderation tools match each platform’s unique standards.

Key practices for continuous improvement include:

Continuous learning and adaptation keep moderation systems effective. Platforms that combine automation, human insight, and regular updates stay ahead of emerging risks and maintain user trust.

To implement effective image moderation, platforms should:

Platforms must review user-generated content, product photos, profile pictures, and advertisements. Moderators also check images for violence, nudity, hate symbols, and misleading visuals. Each type can affect user safety and brand reputation.

AI moderation tools analyze thousands of images per second. These systems provide real-time or near-instant results. Fast processing helps platforms keep harmful content away from users.

Many advanced tools support multi-language detection and cultural context. They use AI models trained on diverse data. This approach helps platforms serve global audiences and respect local norms.

Moderators review flagged images. Users can appeal decisions through platform tools. Regular feedback and retraining help reduce errors and improve accuracy over time.

Most vendors offer APIs and SDKs for easy integration. Platforms can connect moderation tools to their workflows. This setup streamlines content review and supports automation.