< BACK TO ALL BLOGS

Leading Image Moderation Providers for Visual Content Checks

Use Case / Survey | Description | Impact on Brand Reputation |

Gaming Platforms | Pre-moderation shields young users from harmful content. | Builds trust by protecting vulnerable groups. |

Interaction Platforms | Monitoring and flagging inappropriate or misleading content. | Maintains authenticity and prevents harassment. |

Marketplaces | Moderation ensures reviews are authentic and conversations safe. | Stops malicious content that could damage the brand. |

Knowledge Platforms | Pre-moderation checks information accuracy. | Creates a reliable knowledge base and supports credibility. |

Survey Statistics | 85% of users trust user-generated content; 95% of travelers read reviews before booking; 83% of job seekers check company reviews. | Shows how moderated content shapes consumer choices and brand image. |

When you look for the best image moderation tools, you see names like Amazon Rekognition, Google Cloud Vision, Api4AI, Hive Moderation, Sightengine, WebPurify,DeepCleer, Foiwe, and Checkstep. Many businesses trust these companies for their content moderation services. Take a look at this quick table to see who stands out:

Provider Type | Examples | Why They Lead |

Tech Giants | Google, Amazon, Microsoft | Fast, smart AI, easy to scale |

Specialists | DeepCleer, WebPurify | Super accurate, fits your industry |

Startups | Hive, Truepic, Sensity AI | Cool new tech, real-time checks, deepfake tools |

You can count on these providers for reliable image moderation and content moderation services across many industries.

You want your platform to feel safe for everyone. When you let images go unchecked, you open the door to a lot of risks. Here’s what can happen if you skip image moderation or content moderation:

Laws and content safety standards now expect you to take action. Regulations like GDPR and the EU Digital Services Act require you to handle personal data safely and remove harmful content quickly. If your platform is open to kids, you must block age-inappropriate images and prevent grooming risks. You need both AI and human review for sensitive content moderation, especially when dealing with user-generated content moderation or video moderation. Real-time protection and real-time monitoring help you catch problems before they go viral.

If you ignore these rules, you risk legal trouble, financial loss, and losing your users’ trust. You want to keep your community safe and avoid violations.

When you show users that you care about content moderation, you build brand trust. Here’s how you can do it:

These steps help you filter out harmful content and keep your platform positive. People feel safer and more welcome. They know you care about content safety and sensitive content moderation. This trust keeps users coming back and helps your brand grow.

Selecting the right image moderation api can feel overwhelming. You want to keep your platform safe, but you also need a solution that fits your business. Let’s break down what matters most when you compare content moderation tools.

You need an image moderation api that catches harmful content without flagging safe images by mistake. High accuracy means fewer false positives and negatives. Some APIs, like Sightengine, claim up to 99% accuracy and can process millions of images every day. Others, like OpenAI’s omni-moderation-latest, support both image and text moderation, cover more categories, and work in many languages. Here’s a quick look at how some leading APIs stack up:

API / Model | Key Features & Coverage | Performance Metric (F1-Score / Accuracy) | Notes |

|---|---|---|---|

PerspectiveVision | Detects 11 unsafe image categories | F1-Score: 0.810 | Customizable unsafe image definitions |

Google Perspective API | Similar category coverage | Not explicitly stated | Widely used for unsafe image detection |

Microsoft Image Moderation | Focus on adult and racy content | Not explicitly stated | Targets specific unsafe categories |

OpenAI omni-moderation-latest | 13 categories, multimodal, multilingual | Improved accuracy and recall | Higher false positive rate, strong multilingual support |

Sightengine API | 99% accuracy, high throughput | 99% accuracy | Great for large-scale, real-time content moderation |

You want to choose an image moderation api that matches your need for explicit content detection, user-generated content moderation, and text moderation.

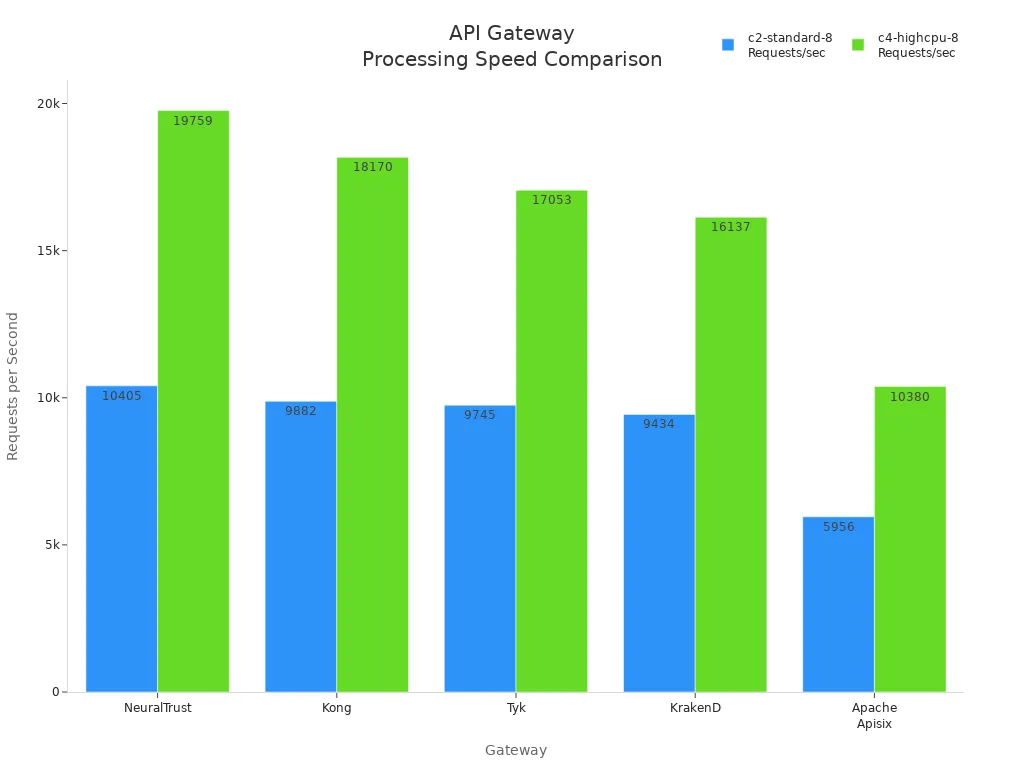

Fast results matter, especially if you need real-time content moderation. Some APIs handle billions of images daily and process thousands of requests per second. Imgix, for example, uses its own hardware to keep things speedy and reliable. Check out this chart to see how different gateways perform under heavy loads:

API Gateway Processing Speed Comparison

If your platform grows, your image moderation api should scale with you. Look for content moderation tools that keep up during peak times.

You handle a lot of sensitive data. Your image moderation api must follow privacy laws like GDPR. Some providers, like DeepCleer, have ISO 27001、ISO9001、ISO2000.These show they take data security seriously.

AI-driven image moderation works fast and never sleeps. It’s great for catching obvious harmful content and handling huge volumes. But AI can miss context, slang, or creative workarounds. Human moderators understand nuance and culture. They step in for tricky cases, like reviewing avatars or complex images. The best image moderation api lets you combine ai-driven content moderation with human review. This hybrid approach gives you both speed and judgment.

You want content moderation tools that fit your budget and work with your tech stack. Many image moderation api providers offer tiered pricing, free trials, and pay-as-you-go plans. For example, DeepCleer charges per image for both automated and live moderation.

Tip: Make a checklist of your must-haves—accuracy, speed, privacy, human-in-the-loop, and cost. Match these to your business needs before you decide.

If you want a tool that handles huge amounts of images fast, Amazon Rekognition stands out. You get:

You can trust Amazon Rekognition for content moderation services when you need speed, accuracy, and the ability to scale.

Google Cloud Vision gives you a wide range of tools for image moderation and content moderation services. Here’s what you get:

You might notice some false positives or negatives, which happens with most AI-based content moderation services. Google Cloud Vision works best when you combine it with human review for tricky cases.

Api4AI brings real-time content moderation services with a focus on flexibility. You can:

If you want customizable, cloud-based content moderation services that are easy to integrate, Api4AI is a smart choice.

DeepCleer offers a powerful AI-powered moderation platform. Here’s what makes it special:

If you need best-in-class accuracy and want to handle both traditional and new threats, Sightengine is a top pick for content moderation services.

WebPurify helps you moderate many types of images, from profile photos to livestreams. You can use it for:

WebPurify’s content moderation services adapt to your needs, making it easy to keep your community safe.

Foiwe offers content moderation services that blend AI and human review. You can use Foiwe for:

Foiwe works well if you want a mix of automation and human judgment for your content moderation services.

Checkstep focuses on compliance and customization in content moderation services. You get:

If you need to meet strict regulations or want to tailor your content moderation services, Checkstep is a strong option.

Tip: If you need video moderation or want to combine AI with human review, many of these providers offer hybrid solutions. You can pick the right mix for your platform.

You want to know what sets each provider apart. Here’s a quick table to help you compare the main features and strengths of top content moderation services:

Provider | Key Features & Strengths | Performance Highlights | Customization & Integration | Extra Notes |

Api4AI | Customizable sensitivity, real-time analysis | Fast processing | Easy integration | Reduces manual moderation |

AWS Rekognition | Flags up to 95% unsafe content, human review support | Scalable, cost-effective | Predefined/custom unsafe categories | Pay-as-you-go pricing |

Clarifai | Pre-built models, hate symbol detection, multimodal | Efficient, scalable | Integrates with advanced models | Streamlines content review |

CyberPurify | Real-time filtering, spyware protection | Real-time filtering | Cross-platform support | Focus on child safety |

Google Cloud | Safe Search Detection, customizable filters | Automated, scalable | Easy application integration | Reduces manual moderation |

Microsoft Azure | OCR, face detection, custom image lists | Confidence scores for detection | Custom workflows | Reduces errors and costs |

PicPurify | 98% accuracy, fast decisions | High accuracy and speed | Highly customizable | Cost-efficient |

SentiSight.ai | Flexible deployment, pay-as-you-go | Flexible and cost-effective | REST API integration | Free credits for new users |

DeepCleer | Image and video moderation, up to 99% accuracy | High accuracy, scalable | Customizable rules, REST APIs | Privacy compliant |

WebPurify | AI + human moderation, multi-language text detection | Fast, 98.5% accuracy | Batch review, image tagging | No content stored on servers |

Tip: Use this table to match the right content moderation tools to your business needs.

You want your content moderation services to catch harmful material fast and accurately. Most top providers use advanced AI models that deliver high accuracy and low server load. For example, DeepCleer and PicPurify both reach up to 99% accuracy. VISUA stands out with its Adaptive Learning Engine, which quickly adapts to new content types and works well for real-time processing. VISUA and Microsoft also let you process multiple moderation tasks in one API call, which saves time. If you need on-premise deployment for privacy, VISUA gives you that option, while most others use cloud-based systems. Built-in libraries like NudeNet offer good privacy and cost control, but you may need more technical setup to reach the same accuracy as third-party content moderation services.

You want content moderation services that fit smoothly into your workflow and offer strong support. Here’s a table to help you compare integration and support options:

Provider | Integration Options | Support Options |

Utopia AI | Rapid integration, custom AI models, all content types | Continuous learning, scalable real-time moderation |

WebPurify | User-friendly API, automated + human moderation | 24/7 automated + human moderation, expert review |

Besedo | Hybrid AI and human moderation, customizable | Dedicated onboarding, customer success teams |

Alibaba Cloud | Automated moderation, scalable deep learning | High accuracy, fast response, evolving recognition |

DeepCleer | SDKs, detailed docs, easy API setup | Feedback loops, continuous improvement, privacy focus |

Note: Many content moderation services offer both automated and human review, so you can handle tricky cases and scale as you grow.

You want to pick a provider that fits your goals and keeps your platform safe. Start by following these steps:

You should also research each provider’s experience and reputation. Look for content moderation services that match your needs, offer strong security, and fit your budget.

Every industry has its own rules and risks. You need to make sure your provider understands your field. Here are some things to think about:

Pick content moderation services that know your industry and can adjust to your needs.

You want a provider that supports you every step of the way. Leading content moderation services offer:

Tip: Choose a provider that listens to your feedback and can change their service as your business grows.

You have many strong image moderation providers to choose from, each with unique strengths. When you compare your options, keep these tips in mind:

The right choice helps you keep your platform safe and your users happy.

Image moderation checks pictures for harmful or unwanted content. You use it to keep your website or app safe. It helps block things like nudity, violence, or hate symbols before users see them.

Most top APIs review images in seconds. Some even work in real time. You get results quickly, so your users do not have to wait.

Yes! Many providers let you use both. AI handles most images fast. Human moderators step in for tricky or sensitive cases. This combo gives you better accuracy.

Leading providers follow strict privacy rules like GDPR. They use encryption and secure servers. Always check a provider’s privacy policy before you start.

Prices depend on how many images you check and which features you need. Some offer free trials or pay-as-you-go plans. You can find a solution that fits your budget.