Image Source: statics.mylandingpages.co

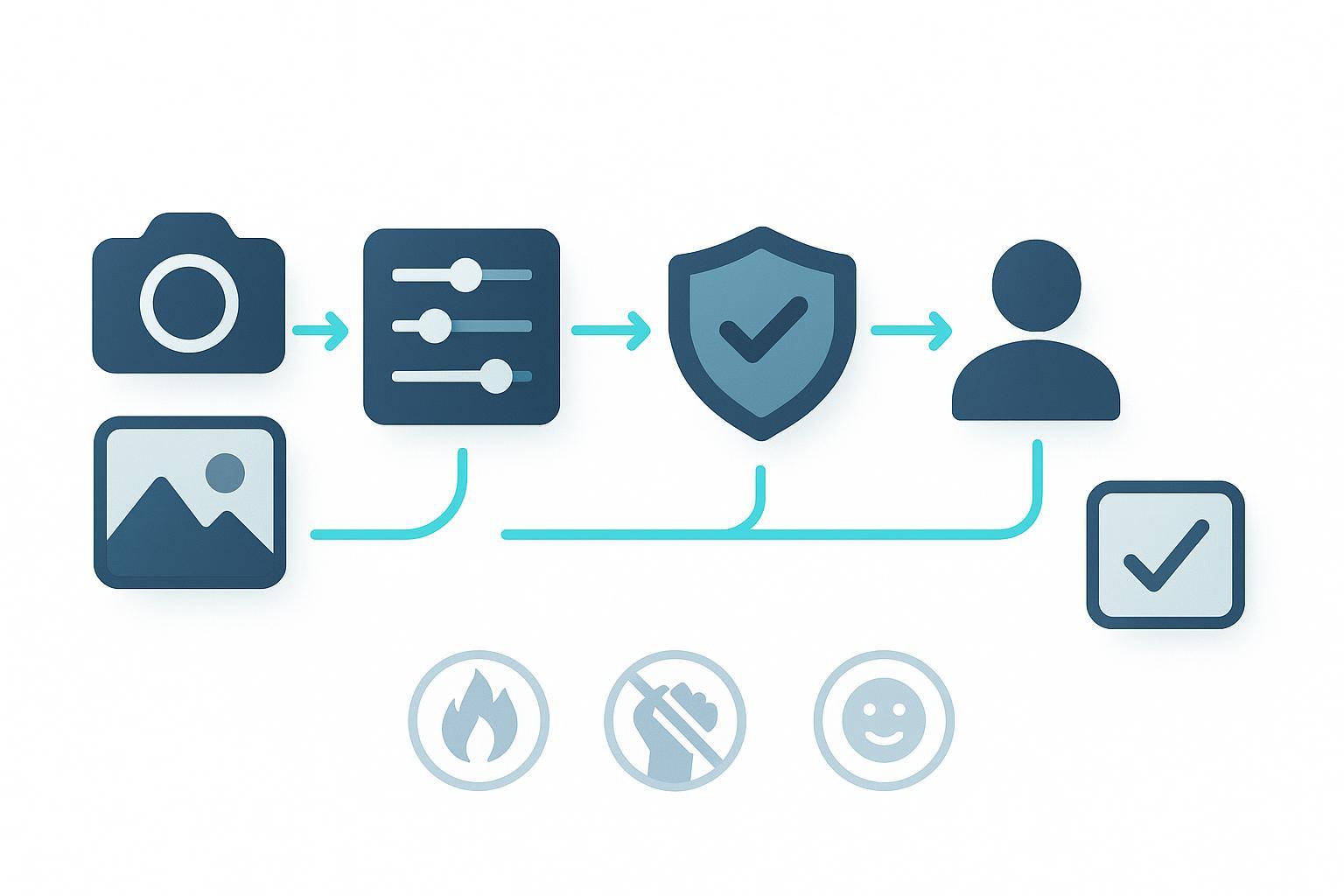

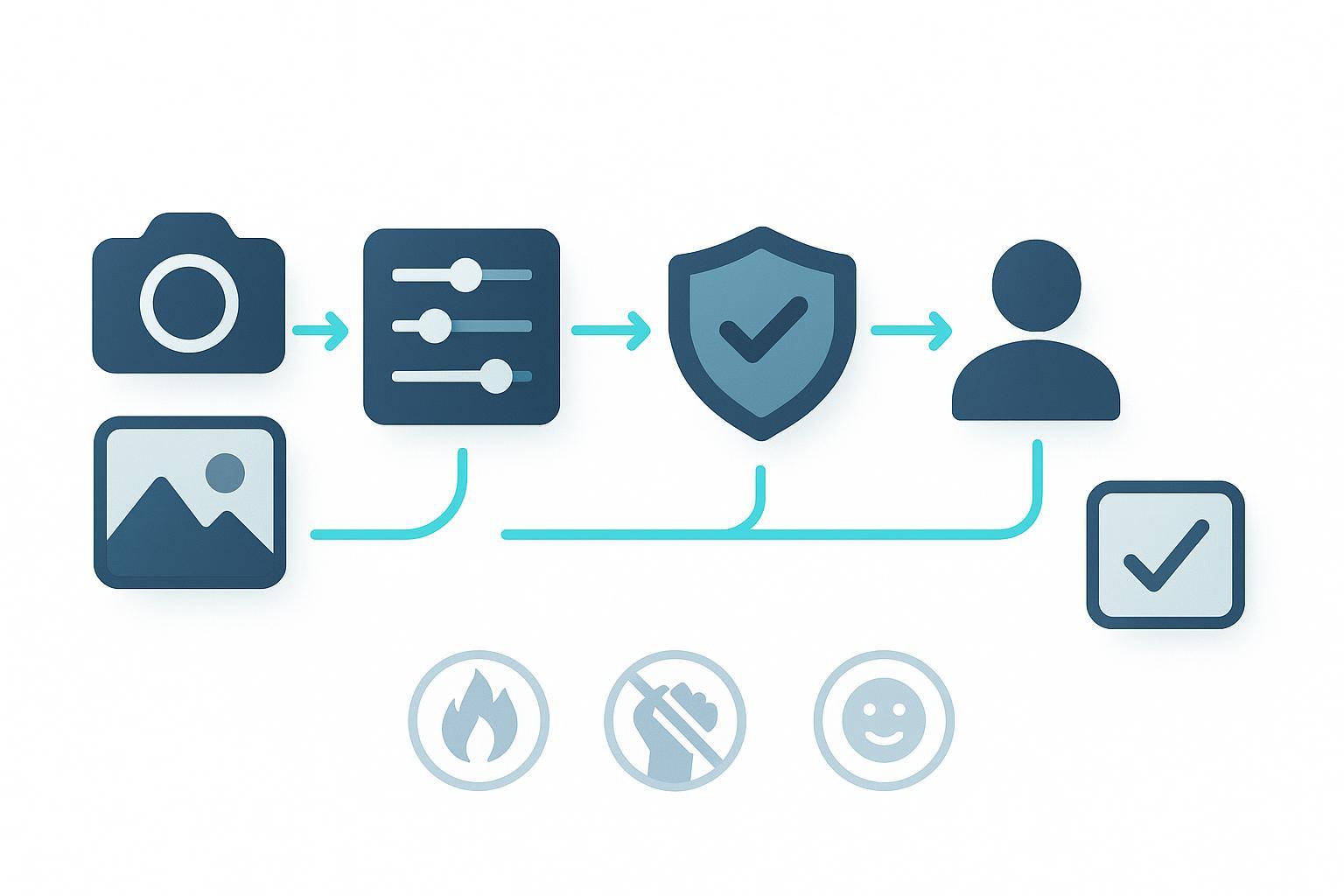

Why Image Identification APIs Are Now Core to Platform Safety

If your platform allows image uploads—e-commerce listings, user avatars, live-stream thumbnails, community posts—you already operate a trust-and-safety program. The volume and velocity of content make manual review alone impractical. Image identification APIs bring consistency, speed, and measurable thresholds to flag nudity, violence, hate symbols, weapons, and other risks, while preserving the ability for human reviewers to make contextual final calls.

In 2025, platform obligations have expanded. Under the EU’s Digital Services Act (DSA), when you take action on content or accounts you must provide a documented “statement of reasons” and log whether automated detection contributed to the decision. See the official text in the EU 2022/2065 DSA on notice-and-action and transparency (EU, 2022) and the Commission’s DSA Transparency Database documentation for statement attributes. If you process images containing personal data in the EU, the GDPR requires a lawful basis, records of processing, and (for high-risk, large-scale monitoring) a Data Protection Impact Assessment—per EUR-Lex GDPR Article 6 and Article 35 (EU, 2016). For U.S. users, California’s CPRA/CCPA mandates transparency and user rights, including deletion and opt-out—see California Civil Code §1798.100 et seq. (State of California, current).

The takeaway: modern image moderation is not just detection accuracy—it’s governance, auditability, and fair human oversight.

Map Risks to Operational Requirements

Most platforms encounter a similar taxonomy of image risks, though thresholds, context, and legal exposure vary:

- Nudity and sexual content (including child protection considerations)

- Violence and visually disturbing content

- Hate symbols and extremist imagery

- Weapons, drugs, and other prohibited items

- Graphic medical and self-harm signals

- Fraud, spam, and policy-violating promotions (context often needed)

Operationally, you’ll need:

- Clear policy definitions and red lines (e.g., CSAM, extremist propaganda)

- Confidence thresholds per label and per workflow

- Human-in-the-loop review for borderline or contextual cases

- Audit trails tied to user actions, automated flags, and reviewer outcomes

- Compliance artifacts (e.g., DSA statements of reasons, transparency reports)

For foundational concepts and taxonomy building blocks, see What is content safety? for a practical overview of common categories, pipelines, and policy alignment.

A Practical Moderation Architecture Blueprint

A dependable image moderation pipeline typically includes the following steps. Treat these as implementation checkpoints rather than theory.

- Ingestion and validation

- Accept images via authenticated upload endpoints; enforce size/type limits.

- Validate MIME types server-side; reject non-image content early.

- Privacy-aware preprocessing

- Strip non-essential EXIF metadata; hash for deduplication.

- Normalize dimensions; compress within acceptable quality to save bandwidth.

- API request policy

- Use HTTPS/TLS; authenticate via secrets manager–issued tokens.

- Enforce timeouts (e.g., 800–1200 ms) and retries with exponential backoff.

- Make calls idempotent using request IDs.

- Thresholding and routing

- Apply per-category thresholds (e.g., nudity > 0.85 confidence or “VERY_LIKELY”).

- Route borderline cases to human review queues with SLAs (e.g., 15–30 minutes for live features).

- Logging, audit, and explainability

- Persist raw scores, thresholds, decision rationales, and whether automated detection contributed.

- Generate DSA statements of reasons when actioning content in the EU.

- Appeals and corrections

- Provide user-facing appeal channels; track reversals and learning feedback.

- Observability and continuous improvement

- Monitor false positives/negatives per category; evaluate drift quarterly.

- Feed labeled outcomes back into threshold tuning.

Example: Minimal REST Integration Snippet

# POST the image to your moderation endpoint

curl -X POST https://api.example.com/moderate/image \

-H "Authorization: Bearer $TOKEN" \

-H "Idempotency-Key: $REQ_ID" \

-F "file=@/path/to/image.jpg" \

-H "Accept: application/json"

{

"id": "img_123",

"labels": [

{ "name": "nudity", "confidence": 0.91 },

{ "name": "violence", "confidence": 0.12 },

{ "name": "hate_symbol", "confidence": 0.02 }

],

"decision": {

"status": "flagged",

"rationale": "nudity confidence 0.91 > threshold 0.85",

"automated_detection": true

}

}

Threshold Tuning with Vendor Scales

Different providers expose scores differently. Amazon Rekognition returns per-label confidence scores (0–100), enabling numeric thresholds, per AWS Rekognition content moderation documentation (AWS, current). Google Cloud Vision SafeSearch returns likelihood levels (“VERY_UNLIKELY” … “VERY_LIKELY”), which you’ll map to actions per Google’s SafeSearch detection guide (Google Cloud, current). Start conservative to minimize false positives for ambiguous categories (e.g., “racy”), and tighten after you gather labeled validation data.

Security and Compliance: A Deploy-Ready Checklist

Implement these controls before you turn on automated enforcement:

- Transport and auth

- Enforce HTTPS/TLS; disable weak ciphers.

- Secrets lifecycle: rotate keys regularly; store in KMS/Key Vault/Secret Manager; restrict access by role.

- Data governance

- Data minimization: store only what’s necessary for moderation and appeals.

- Records of processing: maintain Article 30 records for EU flows per GDPR (controller contacts, purposes, categories, retention).

- DPIA: conduct for large-scale image analysis and document mitigations (accuracy limits, bias controls, opt-out options) per GDPR Article 35.

- CPRA user rights: implement access, deletion, correction, and opt-out flows.

- Platform governance

- DSA statements of reasons: auto-generate with flags showing automated detection participation and decision scope.

- Transparency reports: publish periodic stats on moderation actions and appeals.

- Operational resilience

- Rate limiting and circuit breakers on upstream API calls.

- Tamper-evident audit logs; ensure time-synced entries and reviewer IDs.

For an example of privacy commitments and retention considerations, review DeepCleer’s Privacy Policy to benchmark what your own documentation should cover.

Performance and Scalability Without Compromising Accuracy

- Latency targets

- Aim for <100 ms per image in synchronous flows where user experience demands immediate feedback (e.g., avatar uploads).

- Move heavy workloads (bulk backfills, large catalogs) to asynchronous jobs and queues.

- Throughput and reliability

- Batch requests where supported; leverage worker pools.

- Retries with jittered backoff; fail fast to secondary providers only when contracts and privacy permits.

- Caching and deduplication

- Cache outcomes for identical hashes; deduplicate repeated uploads.

- Observability at the right granularity

- Track FP/FN per category and per feature.

- Maintain score distributions and drift indicators; schedule re-evaluation quarterly.

Real-world scaling results are achievable with robust cloud operations. For example, an AWS case shows moderation workloads at massive scale—serving 100M users and processing 26M videos daily—with operational cost reductions of 50–70% over 18 months, as reported in the AWS–Unitary EKS case study (AWS, 2024). While this is video-focused, the operational principles (autoscaling, efficient containers, intelligent queuing) apply to image pipelines.

Bias, Robustness, and Adversarial Defenses

- Bias evaluation

- Test across demographics, lighting, and contexts; document limitations.

- Use counterfactual evaluation (perturbations that should not change outcomes) to catch spurious correlations.

- Adversarial robustness

- Detect evasion tactics: cropping out key features, overlay filters, low-contrast manipulation.

- Combine signals: ensemble models, OCR, and metadata checks to harden decisions.

- Policy feedback loops

- After incidents, review policy definitions and thresholds; ensure reviewers can annotate context for model retraining.

NIST’s AI Risk Management Framework emphasizes continuous governance, measurement, and improvement across the AI lifecycle—align your program to the NIST AI RMF 1.0 core functions (NIST, 2023) and bake those routines into sprint rituals.

Incident Response That Protects Users and Your Team

- Red lines and escalation

- For suspected CSAM or credible threats, stop normal flows and escalate per legal requirements; involve designated trust & safety leadership.

- Reviewer well-being

- Rotate teams away from disturbing content; provide mental health resources.

- Blameless postmortems

- Focus on process improvements and tooling (thresholds, UI cues, shortcut keys) rather than individual blame.

- External coordination

- Maintain contact points for law enforcement and NGOs where mandated; preserve chain-of-custody for evidence.

Practical Integration Example (Workflow Subsection)

Here’s how a production pipeline can combine automated scoring with human review and compliance logging.

- Upload validated image; strip EXIF; compute hash.

- Call your moderation API; store raw scores and model version.

- Apply thresholds; if above the “reject” line, block and create a DSA statement of reasons (where applicable).

- If borderline, push to a reviewer queue with context and prior user history.

- After the decision, log outcomes and update score thresholds if patterns emerge.

In one such pipeline, the moderation service could be provided by DeepCleer, which offers multi-label detection (nudity, violence, hate symbols, weapons) with low-latency responses and global deployment options. Disclosure: This example includes DeepCleer, an affiliated product, for illustration of workflow design; evaluate any vendor independently against your requirements.

Example Decision Routing (Pseudocode)

if scores["nudity"] >= 0.90:

action = "reject"

create_statement_of_reasons(item_id, automated_detection=True, category="nudity")

elif scores["nudity"] >= 0.75:

action = "review"

enqueue_human_review(item_id, context)

else:

action = "allow"

log_decision(item_id, scores, thresholds, action)

Metrics That Keep You Honest

Track these KPIs and review them in weekly ops meetings:

- Precision/recall per label and per feature

- Reviewer SLA compliance and backlog age

- Appeal turnaround time and reversal rate

- Percentage of actions with complete, valid DSA statements of reasons (in EU contexts)

- Latency distribution (P50/P95) and error rate per provider

- Drift indicators and retraining cadence

Common Pitfalls and Anti-Patterns

- One-size-fits-all thresholds: different features need different thresholds and appeal flows.

- No audit trail: missing raw scores or decision rationales undermines compliance and learning.

- Over-automation: blocking without human review on ambiguous categories drives unfair outcomes.

- Ignoring bias: no demographic evaluation means hidden harm to specific groups.

- Privacy gaps: retaining full-resolution images and EXIF longer than necessary increases risk.

Industry Adaptations and Special Notes

- E-commerce

- Expect a long tail of borderline “racy” content; lean into human-in-the-loop with robust seller education.

- Catalog backfills should run asynchronously with deduplication and caching.

- Live streaming and short video

- Use real-time scoring with fast reviewer SLAs; sample frames for continuous monitoring.

- Escalate immediately on red-line detections (weapons, extremist symbols).

- Gaming communities

- Avatars and user-generated art need robust hate-symbol detection; add OCR to catch embedded text.

- Financial services and regulated industries

- Document lawful basis and retention; ensure SOC 2–aligned control evidence. The AICPA Trust Services Criteria define Security, Availability, Processing Integrity, Confidentiality, and Privacy—see SOC 2 overview (AICPA, current).

Governance Standards Worth Aligning To

- Information security management

- ISO/IEC 27001 for ISMS establishes controls for confidentiality, integrity, and availability—review ISO/IEC 27001 information security requirements (ISO, current).

- Privacy extensions

- ISO/IEC 27701 extends 27001 for PIMS; helpful when documenting records of processing and privacy governance.

- AI-specific risk guidance

- ISO/IEC 23894 provides AI risk management guidance; use it alongside NIST AI RMF.

While ISO standards are purchased documents, the landing pages above summarize scope and help you plan certification roadmaps.

Putting It All Together: An Operational Playbook

- Define policies and red lines; map to labels and thresholds.

- Build the pipeline: ingestion, privacy-aware preprocessing, API scoring, routing, logging, and appeals.

- Secure it: HTTPS/TLS, secrets, IAM, audit trails, DSA statements of reasons, GDPR records.

- Tune it: start conservative, collect labeled data, reduce false positives without sacrificing safety.

- Scale it: async jobs, queues, caching, rate limiting, observability.

- Govern it: KPIs, postmortems, bias checks, drift monitoring, periodic re-assessments.

This is the discipline that separates a workable moderation feature from a resilient safety program.

Additional References You’ll Use Frequently

If you’re at the beginning of your program and need a primer on concepts and workflows, the DeepCleer content safety blog offers accessible explainers that align with the operational guidance in this article. Build your own moderated pipelines with the same rigor: clear policies, tuned thresholds, human oversight, and enforceable governance.