< BACK TO ALL BLOGS

Text Moderation API Buying Guide for Modern Platforms 2025

Choosing the right text moderation api in 2025 means prioritizing accuracy, security, and adaptability. Text moderation now protects users from new threats while helping platforms meet strict regulations. Modern risks include deepfakes, synthetic media, and advanced cyberattacks.

Selecting the right text moderation api in 2025 requires careful evaluation of several core factors. Modern content moderation tools must handle large volumes of user-generated content while maintaining high accuracy and efficiency. The following decision factors guide most platform owners and developers:

Tip: Enterprise-grade content moderation often requires a blend of automation and human oversight to achieve the highest accuracy and compliance.

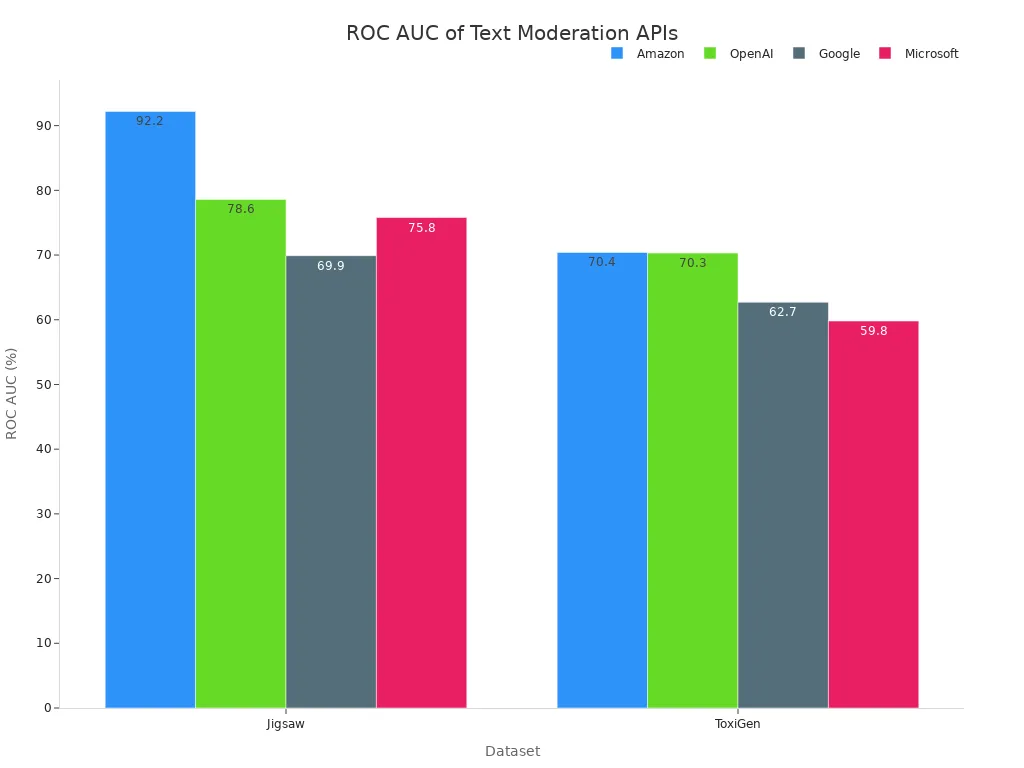

Accuracy remains a top concern for any content moderation api. Recent benchmark studies show that not all content moderation tools perform equally. The table below compares the accuracy of leading APIs across different datasets:

Dataset | API | ROC AUC | F1 | False Positive Rate (FPR) | False Negative Rate (FNR) |

|---|---|---|---|---|---|

Jigsaw | Amazon | ~92.2% | ~7.5% | ~8.1% | |

Jigsaw | OpenAI | ~78.6% | ~78.6% | ~17.1% | ~25.6% |

Jigsaw | ~69.9% | ~67.2% | ~58.4% | ~1.8% | |

Jigsaw | Microsoft | ~75.8% | ~75.7% | ~20.4% | ~28.1% |

ToxiGen | Amazon | ~70.4% | ~68.9% | ~7.2% | ~52.0% |

ToxiGen | OpenAI | ~70.3% | ~68.1% | ~33.2% | ~56.0% |

ToxiGen | ~62.7% | ~62.7% | ~39.1% | ~35.5% | |

ToxiGen | Microsoft | ~59.8% | ~57.4% | ~16.4% | ~64.0% |

ROC AUC of Text Moderation APIs

Amazon and OpenAI lead in accuracy, while Google tends to overmoderate, and Microsoft misses more toxic content. Some specialized content moderation tools, such as those from Pangram Labs, achieve even higher accuracy rates, reaching up to 99.85% with very low false positives. This level of performance is critical for platforms that rely on accurate detection to protect users and maintain trust.

Every platform has unique needs when it comes to content moderation api selection. The type of user-generated content, the platform’s audience, and the business model all influence which features matter most. For example, a social network with millions of daily comments requires different content moderation tools than a niche forum or a dating app.

Platforms often need customizable severity thresholds and class-specific moderation actions. A dating site may allow certain types of content at moderate levels but enforce strict rules for other categories. Content moderation software must support pattern-matching for profanity, personal information, and even custom term lists. This flexibility lets platforms enforce their own guidelines and protect their communities.

Note: The ability to combine classification scores with pattern-matching and user context, such as age or message frequency, enables more nuanced moderation strategies.

Support for multiple languages is also essential. Many content moderation tools offer English-only classification but provide profanity detection in several languages. This helps platforms moderate comments and user-generated content from diverse audiences. Features like autocorrection and integration with user history dashboards further enhance the effectiveness of content moderation software.

When choosing a content moderation api, platform owners should consider:

Matching the capabilities of content moderation tools to the platform’s specific needs ensures better protection, improved user experience, and compliance with regulations.

Modern platforms face a growing wave of harmful content. This includes hate speech, misinformation, and abusive comments. Without strong content moderation api solutions, platforms risk exposing users to dangerous material. Harmful content can appear in user-generated content, such as comments, posts, and messages. Social media moderation teams must act quickly to prevent these threats from spreading.

Regulations like the Digital Services Act set clear rules for handling illegal or harmful content. The law requires platforms to remove flagged material fast. Platforms must also provide transparent reasons for their actions. The table below outlines key aspects of the Digital Services Act:

Regulation Aspect | Description |

|---|---|

Digital Services Act (DSA) | In effect since Feb 17, 2024; applies to all intermediary services serving EU users. |

Service Categories & Obligations | - Simple intermediaries: basic due diligence |

Liability Protections | Platforms keep limited liability if they act quickly on illegal content. |

Notice-and-Action Mechanism | Easy systems for users to flag illegal content; platforms must respond quickly and explain decisions. |

Transparency Requirements | Platforms must show how they moderate content and label ads. |

Ad Targeting Restrictions | No targeted ads using sensitive data or profiling minors. |

Reporting Requirements | Standardized transparency reports required by law. |

Content moderation api tools help platforms meet these rules. They use policy-driven systems, harm taxonomies, and audit logs. This approach supports proactive governance, not just reactive moderation. Platforms that rely only on reactive moderation often miss fast-moving threats. Proactive content moderation strategies, such as fact-checking and demotion, reduce legal risks and protect users.

Platforms that use clear policies and compliance tools create safer, more accountable spaces for everyone.

User safety stands at the heart of every content moderation api. Harmful content can damage trust and drive users away. Platforms that allow toxic comments or unsafe user-generated content risk losing their audience. Real-time moderation detects and removes threats before they reach users.

Text moderation APIs scan user generated content for hate speech, harassment, and other dangers. AI-driven detection finds harmful content in comments and posts faster than human teams alone. These tools assign confidence scores to each piece of content, supporting precise moderation. Integration with generative AI models helps stop inappropriate content before it spreads.

Dashboards track the frequency of harmful comments and user behavior trends. These insights help platforms spot repeat offenders and new toxic patterns. Regular audits and feedback loops improve both AI and human moderation accuracy. Examples of content moderation include removing hate speech, blocking spam, and flagging personal attacks. Another set of examples of content moderation involves demoting misleading posts and warning users about policy violations.

Platforms that depend only on reactive moderation often fall behind. Harmful content spreads quickly, and comments can go viral in minutes. Proactive systems, supported by content moderation api tools, keep communities safer and healthier.

Real-time moderation stands as a core feature for any modern platform. Automated moderation tools now deliver instant responses to new posts and comments. Leading moderation technology, such as Meta’s LlamaGuard 2 8X, achieves an average response time of just 3 milliseconds. This speed outpaces both previous versions and the industry average, as shown below:

Metric | LlamaGuard 2 8X | Previous Version | Industry Average |

|---|---|---|---|

Average Response Time | 3 milliseconds | 5 milliseconds | 8 milliseconds |

Platforms use real-time monitoring to catch harmful content before it spreads. Auto-moderation systems scan every message, applying a content moderation filter that blocks threats instantly. This approach reduces manual review and keeps communities safe.

Global platforms require content moderation tools that understand many languages. Some solutions, like Lakera Guard, only support English today. Others, such as TraceGPT, offer AI-powered content moderation in over 50 languages. Utopia AI Moderator goes further by supporting all languages and dialects, even informal speech and spelling errors. Real-time monitoring across languages ensures that auto-moderation protects users everywhere. A comprehensive moderation strategy must include language-agnostic content moderation filters to handle diverse audiences.

Every platform has unique moderation rules. Leading automated moderation tools provide customizable rule engines. These engines let teams set up content moderation filters that match their own guidelines. Auto-moderation can use multi-step workflows, conditional logic, and adaptive learning to improve accuracy. For example:

Custom policies allow comprehensive moderation that fits each community’s needs.

Auto-moderation must fit into existing systems and scale for millions of users. Content moderation tools like CometChat support text, images, video, and files, offering deep integration with chat infrastructure. Real-time monitoring and auto-moderation help platforms handle large volumes of user-generated content. However, experts note that automated moderation tools alone cannot solve every challenge. At scale, platforms often rely on a mix of auto-moderation and human review. Machine learning models can prioritize content for review, but human judgment remains vital for complex cases. A strong content moderation filter, paired with flexible moderation technology, supports both growth and safety.

Auto-moderation boosts efficiency, but human-machine collaboration ensures the best results for large-scale platforms.

Image Source: unsplash

Industry leaders in 2025 include Microsoft Content Moderator, Stream AI Moderation, Unitary Moderation API, and Mistral's moderation API. These ai auto-moderation services stand out for their advanced technology and reliable performance. The best content moderation tools use a mix of keyword filtering, pattern matching, machine learning, and large language models. Many also support multiple languages and content types, such as text, images, and videos. Top providers focus on ethical practices, transparency, and continuous updates to address new threats.

Service | Languages Supported | Content Types | Custom Policies | Real-Time Moderation | Integration Options |

|---|---|---|---|---|---|

Microsoft Content Moderator | 7+ | Text, Images | Yes | Yes | Azure, REST API |

Stream AI Moderation | 50+ | Text, Emojis, GIFs | Yes | Yes | SDKs, REST API |

Unitary Moderation API | 20+ | Text, Video | Yes | Yes | API, Dashboard |

Mistral Moderation API | 10+ | Text | Yes | Yes | API |

The best content moderation tools offer real-time detection, flexible integration, and support for many languages. They also allow teams to set custom rules and thresholds.

Strengths | Weaknesses |

|---|---|

May misinterpret sarcasm or cultural context | |

Cost-effective: Reduces need for large human teams | Bias: Training data can affect fairness |

Over-blocking or under-blocking possible | |

Customizable: Adapts to platform needs | Language support varies; some tools focus on English |

Protects human moderators from harmful content | Dependence on rules limits adaptability to new threats |

The best content moderation tools increase speed and coverage but sometimes struggle with context and fairness. Combining ai auto-moderation services with human review improves accuracy.

Pricing Model | Description | Pros | Cons |

|---|---|---|---|

Pay per API call or item processed | Flexible, no upfront cost | Costs can spike with high usage | |

Subscription Plans | Fixed monthly or annual fee for set usage | Predictable costs, includes support | May pay for unused capacity |

Tiered Pricing | Volume discounts at higher usage levels | Cost-efficient for growth | Can be hard to estimate total cost |

Enterprise/Custom | Tailored pricing for large or special needs | Optimized for big platforms | Complex negotiation, possible hidden fees |

Hidden costs such as integration, training, and ongoing support can affect the total cost of ownership. The best content moderation tools offer clear pricing and support to help platforms plan budgets.

Platforms should compare features, strengths, and pricing to find the best fit for their needs. The right mix of auto-moderation and human oversight ensures safe and fair communities.

Different platforms require unique integration approaches for text moderation APIs. Social media and forums depend on AI-powered natural language processing to detect hate speech, spam, and misinformation. Gaming platforms need both text and visual moderation, especially for custom skins and chat features. E-commerce sites focus on trademark and copyright detection, often combining visual AI with text moderation. Live streaming services demand real-time, low-latency moderation to prevent harmful content from spreading. The table below shows how platform types shape integration needs:

Platform Type | Integration Requirements and Moderation Needs |

|---|---|

Social Media | NLP-based moderation for hate speech, spam, and misinformation. |

Gaming | Text and visual moderation, including custom content and chats. |

E-commerce | Trademark and copyright detection, visual AI integration. |

Live Streaming | Real-time, low-latency moderation for immediate threat response. |

Metaverse | Embedded visual AI for moderating virtual environments. |

Messaging Apps | Real-time moderation balancing content policies and user freedom. |

Implementing a text moderation API requires several developer tools and resources:

Developers also need to ensure privacy compliance and optimize for scalability and performance.

Leading text moderation APIs offer high levels of customization. Developers can create custom rules, adjust sensitivity settings, and integrate human review processes. Platforms like Stream and CleanSpeak allow teams to define unique workflows, manage user discipline, and set up flagging or banning systems. Customization ensures that moderation aligns with each platform’s policies and risk tolerance. Flexible APIs support both automated decisions and human oversight, adapting to changing community needs.

The cost of integrating a text moderation API varies by platform size and complexity. For a medium-sized platform in 2025, the average cost ranges from $10,000 to $49,000. This includes API integration and ongoing service. Content moderation agencies may charge $50 to $99 per hour for specialized support. Factors such as content volume, response time, and technology infrastructure influence the final price. Careful budgeting helps platforms balance safety, compliance, and operational efficiency.

Selecting the right text moderation API starts with clear priorities. Platforms need tools that match their unique needs and growth plans. The most important features include:

Platforms that focus on these priorities build safer, more trusted communities.

Strong vendor support makes a big difference. Reliable vendors offer clear documentation, live support, and regular updates. They help teams set up integrations and solve problems quickly. Many vendors provide SDKs and dashboards for easy management. Good support also means fast responses to new threats and compliance changes. Teams should look for vendors with a track record of helping clients scale and adapt.

Modern platforms must plan for growth and change. Scalable moderation APIs use cloud-based infrastructure and modular AI tools. These systems handle more users and new content types without slowing down. Hybrid models combine AI speed with human judgment for tough cases. Real-time feedback and continuous training keep the system up to date with new slang and trends. Audit-friendly tools and compliance reporting help platforms meet global rules like the EU DSA. By tracking key metrics such as latency and accuracy, teams can adjust their strategies and stay ahead of threats. This approach turns moderation into a strategic asset that supports long-term success.

Selecting a text moderation API in 2025 involves several key steps:

Regularly review moderation strategies and update tools as new threats and regulations emerge. This approach helps platforms stay safe, efficient, and compliant.

A text moderation API scans user-generated content for harmful or inappropriate language. It helps platforms detect and block threats like hate speech, spam, and personal attacks. This tool keeps online communities safe and compliant with regulations.

Real-time moderation uses AI to review content as soon as users submit it. The system flags or removes harmful messages instantly. This process protects users and prevents the spread of dangerous material on the platform.

Multilingual support allows a moderation API to understand and filter content in many languages. This feature helps global platforms protect users everywhere. It also ensures that harmful content does not slip through because of language barriers.

Yes. Most text moderation APIs let teams set custom rules and filters. Platforms can adjust sensitivity, create unique workflows, and manage flagged content. This flexibility helps each platform enforce its own guidelines and community standards.

Comprehensive analytics and reporting help teams track moderation results and spot trends. These tools show which types of content get flagged most often. They also help platforms improve their moderation strategies and meet compliance requirements.