< BACK TO ALL BLOGS

Top 10 AI Content Moderation Solutions for Enterprise Use

Enterprises seeking robust AI content moderation benefit from these top 10 trusted solutions:

Solution | Why Enterprises Trust Them | Key Features |

|---|---|---|

DeepCleer | Trusted by leading brands | Patented AI, real-time moderation, learns from humans |

Pangram Labs | Preferred by trust & safety teams | High accuracy, low false positives, SOC 2 compliance |

Tech Mahindra | Proven in enterprise case studies | Scalable AI, analyst-backed, digital transformation |

Other leading content moderation tools include Hive Moderation, Concentrix, Besedo, LiveWorld, ActiveFence, Amazon Rekognition, and Azure AI Content Safety. These solutions offer reliable ai-powered content moderation, support user-generated content, address safety concerns, and help maintain brand safety. Enterprises should match solutions to specific needs and workflows for the best results.

Enterprises rely on content moderation tools that deliver high accuracy. These tools use metrics like precision, recall, and F1 score to measure how well they detect violations in user-generated content. High accuracy means the system correctly identifies harmful material and reduces errors. Many automated content moderation systems now use large language models, which often outperform human reviewers in tasks such as detecting cyberbullying or fake news. Enterprises also consider content quality, including readability and brand voice, to ensure comprehensive content moderation. Using diverse test datasets helps companies confirm that their ai content moderation solution works well in real-world scenarios.

Scalability remains a top priority for enterprises handling large volumes of user-generated content. Leading ai content moderation platforms offer real-time moderation, dynamic filtering, and support for multiple content formats like text, images, and video. Cloud-based infrastructure allows these solutions to scale up or down based on content volume.DeepCleer combine automated content moderation with human review, ensuring that even complex violations get proper attention. This hybrid approach supports effective content moderation across global platforms and helps maintain community management standards.

Tip: Enterprises should look for solutions that provide automated escalation paths for critical violations and can adapt to changing content risks.

Compliance with regulations such as GDPR, CCPA, and COPPA is essential for enterprise content moderation tools. These laws require strict data privacy, secure handling of user information, and clear consent management. Enterprises often need to comply with additional standards like HIPAA or PCI DSS, depending on their industry. AI content moderation systems must integrate privacy-by-design principles, encryption, and audit readiness to build trust and avoid legal violations.

Top ai content moderation solutions offer seamless integration with enterprise platforms. Many provide APIs that support multimodal analysis, real-time processing, and customizable policies. For example, some tools analyze text, images, video, and audio, while others allow for tailored workflows and multilingual support. Integration with user reporting systems and existing business tools ensures that violations are detected and managed efficiently.

Customization allows enterprises to align ai content moderation with their unique policies and workflows. Many platforms offer drag-and-drop workflow builders, pre-built templates, and support for custom-trained AI models. These features enable companies to adjust detection sensitivity, create instant feedback loops, and continuously improve moderation accuracy. Seamless integration with CRMs and project management tools ensures that automated content moderation fits into existing operations and addresses specific violations.

Hybrid AI-human moderation enhances decision quality and trust. Studies show that combining human judgment with AI tools leads to better outcomes, especially for complex or nuanced violations. This approach helps enterprises achieve effective content moderation and maintain high standards for user-generated content.

Enterprises can choose from these top 10 ai content moderation solutions for effective protection and policy enforcement:

Each of these solutions offers advanced ai tools for detecting violations, spam and scam detection, and explicit content filtering. Many platforms combine automated content moderation with human review, ensuring accurate decisions for complex violations.

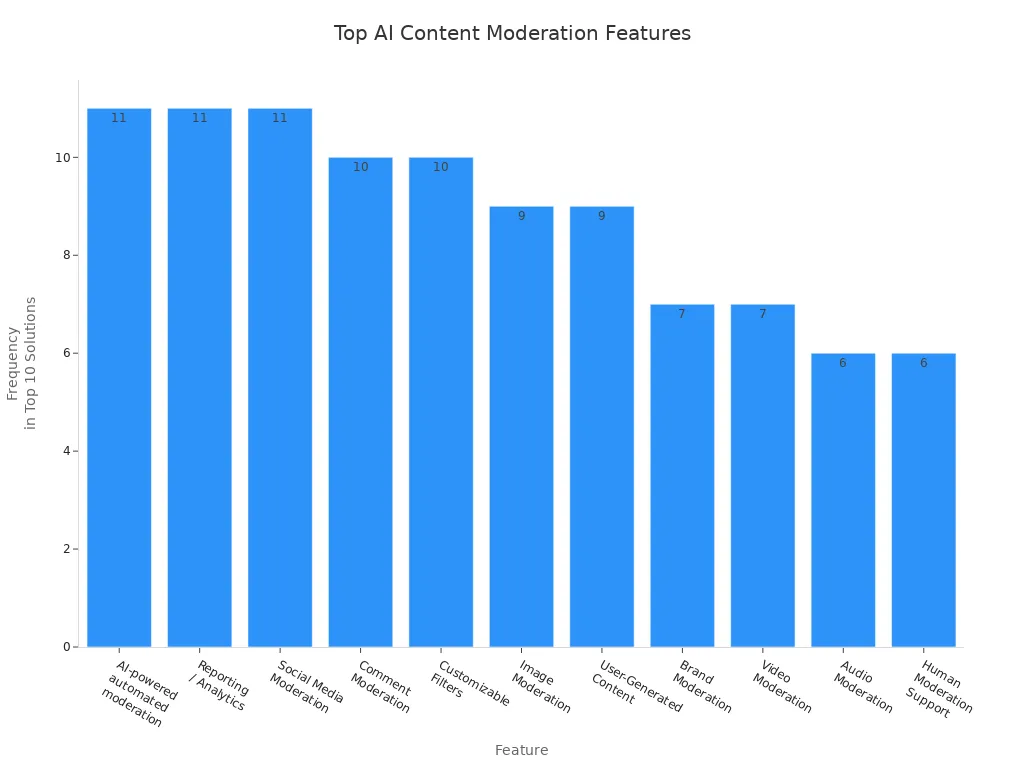

Most leading content moderation tools share several important features:

TOP AI Content Moderation Features

Many solutions now support real-time analysis of user-generated content. Some, DeepCleer content moderation use proactive threat detection to stop violations before they spread,and offer multimedia detection for images and videos. These features help enterprises fight hate speech, manage violations, and protect their communities.

Enterprises benefit from ai-powered content moderation that adapts to new risks. Automated content moderation tools can scale to handle millions of posts, while human moderators review edge cases. This hybrid approach ensures that violations, including those in audio or video, do not go unnoticed.

DeepCleer offers a unique blend of AI and human expertise for content moderation. The platform tailors moderation strategies to each client’s brand values and community standards.DeepCleer scales with business growth and supports multiple languages, ensuring relevance in global markets. The company adheres to international regulations, including the Digital Services Act, which helps enterprises maintain compliance and user trust.

Unique Strength Aspect | Description |

|---|---|

AI and Human Expertise | Combines advanced AI with trained human moderators for balanced efficiency and context sensitivity |

Tailored Solutions | Customizes moderation strategies to align with brand values and community standards |

Scalability | Services scale with business growth, managing increasing content volumes without quality loss |

Multilingual Support | Supports multiple languages for global compliance |

Regulatory Compliance | Adheres to international regulations, ensuring legal compliance and user trust |

DeepCleer works best for enterprises that need flexible, scalable moderation with a strong focus on compliance and cultural sensitivity.

Enterprises should begin by evaluating their unique requirements before selecting an AI content moderation solution. A structured approach helps ensure the chosen tool aligns with business goals and community management standards. Consider the following steps:

This process ensures that enterprises select a solution capable of handling their specific content types, compliance needs, and user base.

After identifying requirements, enterprises should follow best practices for trialing and integrating AI content moderation tools. A careful rollout reduces risk and maximizes value:

Tip: Request demos from vendors and consult with their experts to understand how each solution fits your workflows. Pilot testing with real data helps reveal strengths and limitations before full deployment.

By following these steps, enterprises can confidently select and implement an AI content moderation solution that supports their goals and adapts to future challenges.

Selecting a trusted AI content moderation solution helps enterprises protect their platforms and users. Companies should focus on accuracy, scalability, and compliance when reviewing options. Hybrid AI-human approaches often deliver the best results for complex content.

Ready to take the next step? Contact vendors or request a demo to see which tool fits your enterprise best.

AI content moderation uses artificial intelligence to review and filter user-generated content. The system detects harmful, offensive, or inappropriate material. Enterprises use these tools to keep their platforms safe and compliant.

Hybrid moderation combines AI automation with human judgment. AI handles most content quickly. Human moderators review complex or unclear cases. This approach improves accuracy and reduces errors.

Most leading solutions support many languages. They use advanced models to detect violations in text, images, and videos across different regions. Enterprises should check language support before choosing a tool.

Top providers design their systems to meet privacy laws like GDPR and CCPA. They use encryption, secure data storage, and clear consent processes. Enterprises must review compliance features before deployment.

Enterprises can request demos or pilot programs from vendors. They should use real data to test accuracy and integration. Feedback from these trials helps select the best solution.

Tip: Always involve IT and legal teams when evaluating new moderation tools.