< BACK TO ALL BLOGS

User-Generated Content Moderation Essentials for 2025

You face a landscape where user-generated content shapes every platform in 2025. The volume of ugc has exploded, with the market reaching $7.6 billion and platforms like YouTube, Instagram, and TikTok leading in engagement. New content types—such as short-form video, AR, and podcasts—bring both creativity and complexity. UGC now includes everything from bite-sized posts to interactive graphics, making moderation essential. You must balance technology, clear rules, and human oversight to keep your community safe and your platform trusted.

You encounter a wide range of user generated content every day. On social media, you see photos, videos, and text updates from creators and everyday users. Many people share customer reviews and testimonials on e-commerce sites, giving honest feedback about products and services. You also find blog posts, articles, and detailed case studies written by content creators who want to share their experiences or insights. In online forums and communities, users discuss topics, ask questions, and leave comments that help others. Live streams and UGC videos, such as unboxing, tutorials, and product demos, appear on platforms like YouTube and TikTok. Some users even create ads that brands use in their campaigns.

You find these types of ugc on many platforms, including Instagram, Facebook, LinkedIn, and review sites like Yelp and Trustpilot. UGC platforms make it easy for you to share your voice and connect with others.

User-generated content shapes how you trust and engage with a platform. When you read customer reviews or see real users sharing their stories, you feel more confident about your choices. Studies show that 92% of consumers trust ugc more than traditional ads. Social campaigns with user generated content see up to 50% higher engagement rates. Brands that feature authentic content from users build stronger communities and keep users coming back. You help create a sense of belonging when you share your experiences. UGC also boosts platform retention, with 66% of branded communities seeing a positive impact. When you see credible and useful content, your emotions and trust in the platform grow. This trust leads to more activity and loyalty.

Tip: Encourage your community to share customer reviews and stories. Authentic ugc increases engagement and helps your platform stand out.

You must understand the legal landscape before you moderate user-generated content. In 2025, global regulations set strict standards for digital platforms. These laws protect users and hold you accountable for the content on your site. The table below highlights the most important regulations you need to know:

Regulation / Jurisdiction | Key Provisions Affecting User-Generated Content Moderation | Enforcement & Penalties | Notable Dates / Developments |

EU Digital Services Act (DSA) | Duties for digital service providers, transparency, risk assessments, annual audits, and advertising transparency. | National coordinators enforce. Fines up to 6% of global turnover. | Effective Nov 2022. New transparency rules July 2025. |

UK Online Safety Act (OSA) | Duty of care for illegal and harmful content, age verification, algorithmic transparency. | Ofcom enforces. Fines up to £18 million or 10% of revenue. | In force March 2025. Child protection codes July 2025. |

UK Crime and Policing Bill | Platforms must remove flagged illegal content within 48 hours. | Civil penalties for companies and managers. | Introduced Feb 2025. In review. |

UK DMCCA | Regulates large digital firms, bans false information, ensures fair practices. | Fines up to 10% of turnover. | Effective Jan 2025. |

U.S. Section 230 & State Laws | Immunity for third-party content, FTC oversight, state privacy and safety laws. | FTC and state enforcement. | FTC inquiry Feb 2025. New visa rules May 2025. |

Other Jurisdictions | Expanding content regulation, focus on online harms and transparency. | Extraterritorial enforcement. | Ongoing changes. |

Note: You must stay updated on these laws. Non-compliance can lead to heavy fines and business disruption.

You handle sensitive data every time you moderate user-generated content. Privacy laws require you to protect users’ personal information and respect their rights. Key concerns and best practices include:

These laws help you balance user safety, privacy, and freedom of expression. When you follow them, you build trust and keep your platform safe for everyone.

You need strong community guidelines to keep your platform safe and welcoming. Start by understanding your audience and the risks they face. Tailor your ugc moderation guidelines to fit your users and the types of content they share. Clear rules help you build trust and set expectations for everyone.

When you set clear rules, you cultivate trust and encourage positive behavior.

You must make your community guidelines easy to find and understand. Use simple words and give real examples. This helps users follow the rules and keeps your platform focused on trust and safety.

Open communication helps you build trust and keep your community safe.

You need strong tools to keep up with the massive volume of ugc on your platform. AI and automation help you scale content moderation quickly and efficiently. These systems use natural language processing and image recognition to scan text, images, and videos for harmful or inappropriate content. AI-powered moderation can filter millions of posts in real time, making it possible to handle the growing complexity of user-generated content.

You should evaluate AI-powered tools by checking their accuracy, transparency, and ability to adapt to new threats. Look for solutions that offer continuous updates and allow you to fine-tune moderation rules. Make sure your tools support multilingual content and can scale as your user base grows. Automated moderation works best when you combine it with human oversight to catch errors and reduce bias.

Tip: Choose AI moderation tools that provide clear explanations for flagged content and allow users to appeal decisions. This builds trust and helps you avoid over-enforcement.

Challenge | Description and Impact |

|---|---|

Context Understanding | AI struggles with sarcasm, humor, and cultural references, leading to misinterpretation of content. |

Language Nuances | Rapidly evolving idioms, slang, and multilingual content reduce AI accuracy by up to 30% in some cases. |

Cultural Sensitivities | AI risks over-censorship or under-censorship due to difficulty in recognizing cultural differences. |

False Positives/Negatives | AI often misclassifies content, causing wrongful removals or missed harmful content, affecting trust. |

You must remember that AI moderation tools are not perfect. They can misinterpret jokes, slang, or cultural references. False positives and negatives are common, so you need human moderators to review difficult cases. AI systems also need regular updates to keep up with new language trends and threats.

Human moderators play a vital role in ugc moderation. You rely on them to handle complex, nuanced, or context-dependent cases that AI cannot solve. Human review ensures fairness, accuracy, and cultural sensitivity when moderating user generated content.

You should invest in training and supporting your moderation team. Provide mental health resources to help them cope with the psychological toll of reviewing harmful content. Regular training keeps your team updated on new risks and best practices. You also need clear guidelines so human moderators can make consistent decisions.

Note: Human review is essential for appeals and for correcting mistakes made by automated moderation. This helps you build a fair and inclusive community.

Real-time filtering tools help you block harmful ugc before it reaches your community. These tools use AI and machine learning to scan content instantly, flagging or removing posts that break your rules. Real-time filtering is a key part of proactive content moderation, letting you stop threats before they spread.

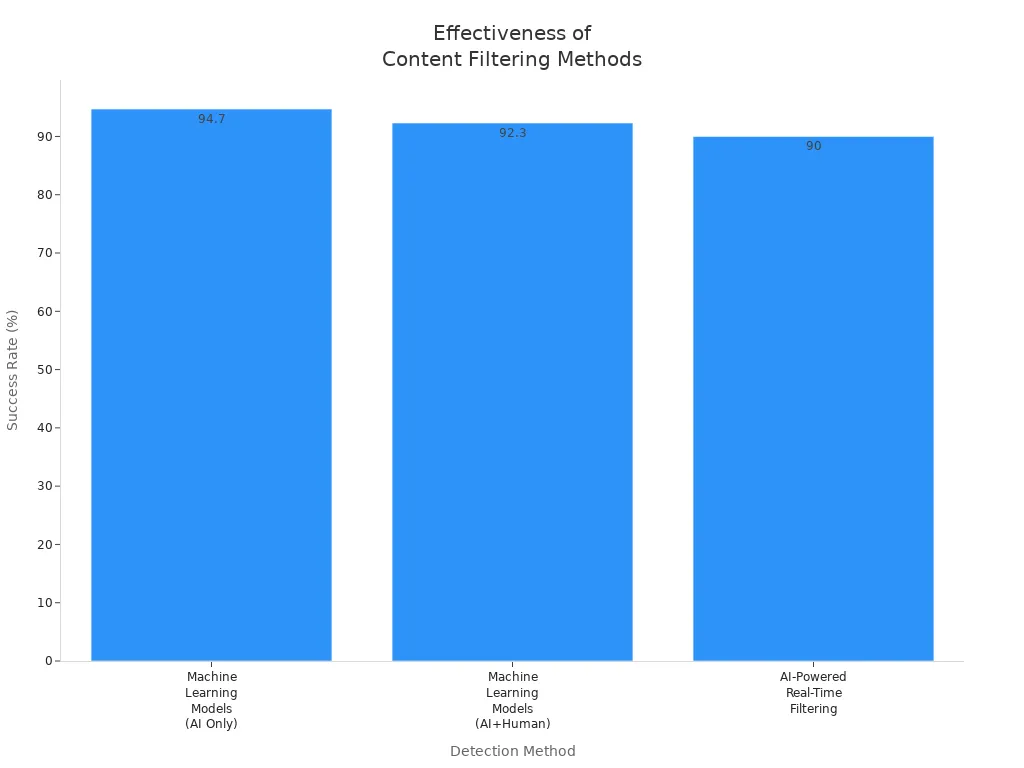

Detection Method | Description | Human Involvement | Success Rate / Effectiveness |

Hash Matching | Compares digital fingerprints of content against a database of known harmful material. | Human review for uncertain cases | Blocks matched content automatically. |

Keyword Filtering | Flags text using keywords linked to harmful content. | Human review for flagged content | Reduces moderator workload. |

Machine Learning Models | Classifies content as valid or harmful using labeled data. | Humans review uncertain cases | 94.7% hate speech detected; 25% human moderation increases valid detection to 92.3%. |

AI-Powered Real-Time Filtering | Analyzes content before posting using pattern recognition and NLP. | Humans handle borderline cases | Blocks about 90% of harmful content instantly. |

Effectiveness of Content Filtering Methods

You should look for real-time filtering tools that offer malware detection, as harmful files can spread quickly through ugc. Choose solutions that support multiple languages and can adapt to your platform’s size. Scalability is important, so pick tools that grow with your user base and handle traffic spikes without slowing down.

Tip: Test real-time filtering tools with your ugc before full deployment. This helps you spot gaps and adjust settings for better accuracy.

You need a balanced approach to moderating user generated content. Combine AI and automation for speed and scale, but always include human review for fairness and context. Choose tools that offer malware detection, multilingual support, and scalability to protect your platform and community.

You need strong reporting features to keep your platform safe. When you give users easy ways to report harmful or inappropriate content, you empower your community to help with moderation. Most platforms use clear buttons or links next to posts, comments, or messages. You should make the reporting process simple and quick. Users must not feel confused or overwhelmed.

Tip: Regularly review your reporting system. Ask users for feedback to improve the process and address new risks.

A good reporting system helps you spot problems early. It also builds trust because users see that you care about their safety.

You must offer a fair appeals process for users who disagree with moderation decisions. Appeals give users a voice and help you correct mistakes. A strong appeals process shows your commitment to fairness and transparency.

Note: A transparent appeals process not only meets legal standards but also strengthens your community’s trust in your platform.

You need regular moderation audits to keep your platform safe and fair. Audits help you check if your content moderation matches your policies and supports trust and safety. A strong audit uses a clear process:

When you follow these steps, you make your moderation stronger and show your commitment to safety.

Regular audits help you spot gaps in your system and protect your community from harm.

You build trust and safety by sharing clear transparency reports with your users. These reports show your actions and help users understand your moderation process.

When you publish transparency reports, you invite your community to join you in keeping the platform safe. This openness builds trust and safety for everyone.

You face unique challenges as a content moderator. The constant flow of user-generated content can feel overwhelming. Burnout often results from several factors:

You can reduce burnout by setting clear boundaries and taking regular breaks. Automated tools and chatbots help by filtering out simple cases, so you can focus on complex decisions. Monitoring software can track stress levels and alert managers when you need support. You should encourage open communication with your team and seek help when you feel overwhelmed.

Tip: Normalize conversations about mental health in your workplace. Regular check-ins and team bonding activities help you feel connected and supported.

You need strong training and support programs to thrive as a moderator. Resilience training builds your self-awareness and adaptability. Workshops, role-playing, and stress-reduction exercises help you manage stress. Mindfulness practices, such as meditation and muscle relaxation, can lower anxiety and improve your emotional well-being.

Comprehensive training should cover:

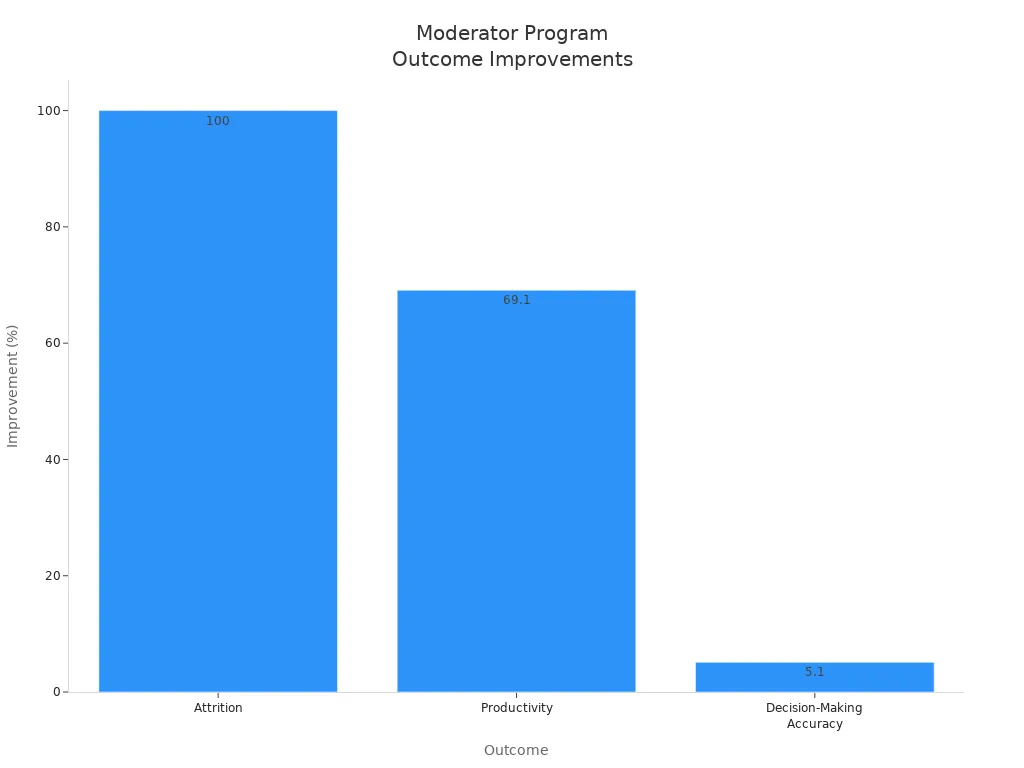

Program Component | Description |

Mental Health | Recurring surveys and extra breaks for those exposed to explicit content. |

Training & Upskilling | Trauma-informed activities delivered daily, weekly, and monthly. |

Leadership Support | Leaders foster a culture of care and encourage participation without stigma. |

You benefit from a supportive environment. Programs that combine mental health support, training, and leadership involvement reduce attrition, boost productivity, and improve decision-making accuracy.

Moderator Program Outcome Improvements

Note: Investing in your well-being leads to a safer, more effective moderation team and a healthier online community.

You face a fast-changing world of ugc. Every day, your platform receives thousands of pieces of user generated content. AI tools help you scan and flag content quickly. These systems analyze huge amounts of data and spot patterns that humans might miss. You can use AI to filter out spam, detect hate speech, and block harmful images in real time. This speed keeps your community safe and helps you manage the growing volume of ugc.

However, AI cannot understand every situation. It struggles with sarcasm, cultural references, and sensitive topics. You need human moderators to review flagged content and make final decisions. Human reviewers bring empathy and context. They can judge intent and handle appeals when users disagree with a decision. This teamwork between AI and people forms the core of a strong user-generated content strategy.

You benefit from tools that show both the original and AI-processed content side by side. These tools give you policy snippets and explainability overlays. You see confidence scores that help you decide if a piece of content breaks the rules. When you add human feedback to the system, your AI models get smarter and adapt to new trends in user generated content. You also protect your moderators by setting time limits, blurring graphic images, and offering support. This approach keeps your team healthy and your decisions fair.

Tip: Use AI for routine ugc moderation, but always let humans handle complex or sensitive cases. This balance leads to faster, more accurate, and fair content moderation.

You build trust when your users see that both technology and people work together to keep the platform safe. This dynamic duo helps you maintain platform integrity and user trust, even as ugc evolves.

You want every user to feel treated fairly when moderating user generated content. Consistency builds trust and keeps your community engaged. When you apply the same rules to everyone, users know what to expect. They see your platform as a safe place to share ugc and connect with others.

Research shows that users accept moderation decisions more when you explain your actions clearly. If you remove content, tell users why. Give examples and show how the decision fits your guidelines. This transparency helps users understand your process and builds trust in your platform.

To ensure fairness, you need to:

You must also recognize that different cultures see content in different ways. A meme or joke may be fine in one country but offensive in another. Train your moderators to spot these differences and use good judgment. Sometimes, you may need to work with teams who know global cultures well. This helps you make decisions that are fair and respectful.

Note: When you explain your moderation actions and apply your rules the same way every time, you build trust and encourage users to follow your guidelines.

A strong user-generated content moderation strategy supports safety, fairness, and community growth. You create a space where users feel safe to share, which leads to higher engagement and more conversions. When you moderate user generated content with care and consistency, you protect your brand and help your platform thrive.

You can transform your marketing strategy by curating and moderating user-generated content. When you launch a user-generated content campaign, you invite your community to share authentic experiences. This approach helps you boost engagement and build trust. For example, Coca Cola’s “Share a Coke” campaign encouraged customers to post photos of personalized bottles. Apple’s #ShotoniPhone campaign showcased real photos from users, highlighting product quality. Starbucks’s #WhiteCupContest and Glossier’s hashtag campaigns inspired creativity and fostered a sense of belonging. National Geographic’s #WanderlustContest brought together travel enthusiasts, creating a vibrant storytelling community.

You can also look at brands like SHEIN, which displays real customer photo reviews to create a community hub. HelloFresh combines branded and user-generated content in email and social media, using contests and influencer partnerships to boost conversions. These strategies help you drive purchasing decisions and achieve higher engagement. When you feature authentic ugc, you empower creators and encourage others to participate, which leads to more conversions and a stronger brand community.

Tip: Incentivize participation with rewards or recognition. This approach not only boosts engagement but also helps you boost conversions and drive ongoing contributions.

You must protect your brand while running user-generated content marketing campaigns. Start by setting clear guidelines so all ugc aligns with your brand’s voice and values. Use robust moderation to filter out harmful or off-brand content. Always get explicit permission from creators before sharing their content. Credit original creators to show respect and encourage more sharing.

Follow these steps to safeguard your reputation:

A strong user-generated content marketing strategy helps you boost conversions, achieve higher engagement, and drive purchasing decisions. When you combine compliance, moderation, and community involvement, you create campaigns that deliver real results.

You need to choose the right moderation tools to protect your platform and users. In 2025, you face new risks like AI-generated deepfakes and child grooming. Laws such as the REPORT Act require you to detect and report child sexual abuse material (CSAM) quickly. The best solutions use hash matching for known CSAM and AI classifiers for new or AI-generated threats. You must also look for tools that adapt to changing content trends and community norms.

When you evaluate content moderation solutions, focus on these key criteria:

Evaluation Criterion | Description |

|---|---|

Accuracy and Flexibility | Can the tool adapt to new content types and policy changes? |

Real-Time Moderation | Does it process content fast enough to keep users safe? |

Support for Multiple Formats | Can it handle text, images, video, audio, live streams, and many languages? |

Ease of Integration | Are the APIs and dashboards easy for your team to use? |

Compliance and Transparency | Does it help you meet legal standards and provide audit logs? |

Scalability and Cost Efficiency | Will it work for your business size and budget? |

Moderator Experience | Does it protect moderator well-being and reduce exposure to harmful content? |

Tip: Always check if the solution supports proactive detection and reporting to meet legal requirements.

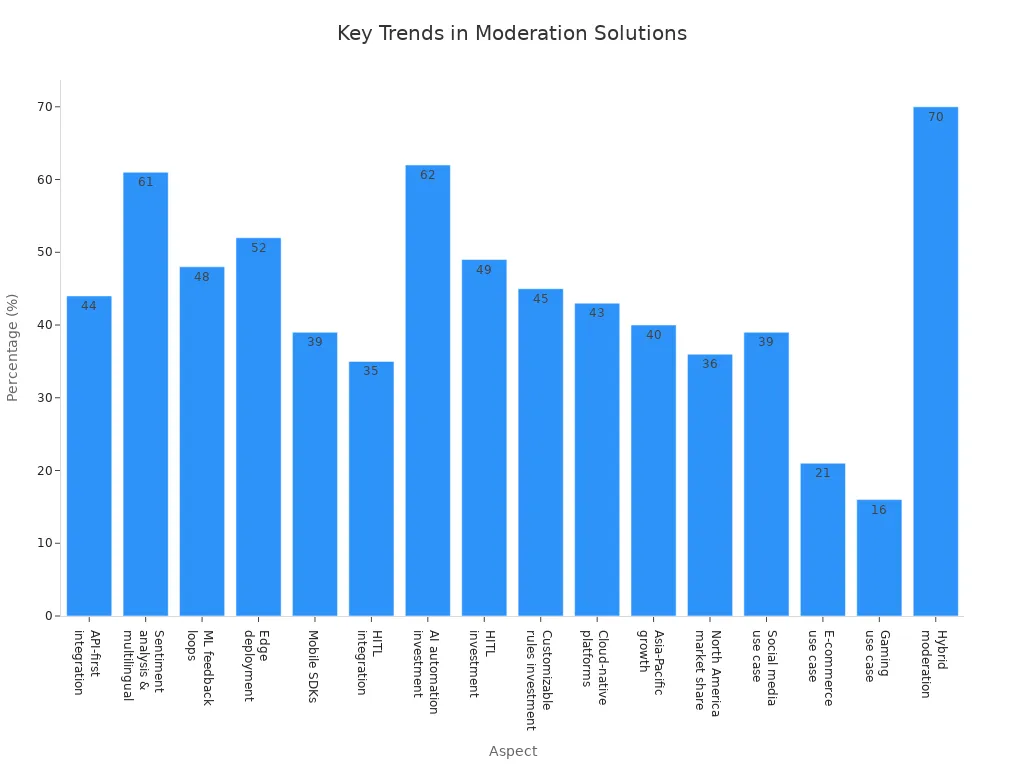

You want a solution that fits smoothly into your existing systems. Leading platforms use API-first, cloud-native tools that work across social media, e-commerce, and gaming. These tools use AI for natural language processing, image and video recognition, and sentiment analysis. This helps you scan and flag content in real time.

Hybrid models combine AI automation with human review. This approach gives you both speed and accuracy. Cloud infrastructure lets you scale up as your user base grows. You can handle large volumes of content in many languages and meet regional rules with customizable engines.

Key Trends in Moderation Solutions

You see more platforms using edge deployment and mobile SDKs for live streaming and mobile apps. Over 70% of companies now invest in hybrid moderation models. This trend helps you keep up with new risks and user demands. As digital content grows, you need solutions that scale and adapt without slowing down your platform.

Note: Choose a solution that grows with you and supports both automation and human oversight. This keeps your community safe and your platform ready for the future.

You must stay alert to new threats and trends in user-generated content moderation. The digital world changes quickly. New risks like deepfakes, misinformation, and evolving hate speech appear every year. You can protect your platform by following a set of best practices:

Tip: Combine AI-driven automation with human review to handle large volumes of content while keeping context and fairness.

You need to improve your moderation process all the time. The internet never stands still, so your policies and tools must evolve. Regular reviews help you address new threats and keep your community safe.

Note: Continuous improvement keeps your platform safe, fair, and ready for whatever comes next.

You play a key role in shaping safe, engaging communities through strong user-generated content moderation. Platforms like Wikipedia, YouTube, and Twitch show that ongoing adaptation is essential for success:

Platform | Key Lesson on Adaptation |

|---|---|

Wikipedia | Human judgment enables quick responses |

YouTube | Over-reliance on automation causes issues |

Twitch | Hybrid models balance control and nuance |

Effective moderation protects your community from harmful content, builds trust, and supports growth. Regularly review your strategies and tools to keep pace with new risks. Stay proactive and help create a safer online world for everyone.

You review and manage content that users post on your platform. This process helps you remove harmful, illegal, or inappropriate material. Moderation protects your community and keeps your platform safe.

AI tools scan large amounts of content quickly. Human moderators handle complex or sensitive cases. You get speed and accuracy when you use both together.

You check each report carefully. Automated systems filter obvious mistakes. Human moderators review unclear cases. This approach helps you avoid unfair removals.

You set clear rules for what users can and cannot post. Use simple language and real examples. Update your guidelines often to address new risks.

You provide mental health resources and regular training. Encourage breaks and open communication. Support helps your team stay healthy and effective.